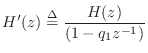

Digital Waveguide Theory

In this appendix, the basic principles of digital waveguide acoustic modeling are derived from a mathematical point of view. For this, the reader is expected to have some background in linear systems and elementary physics. In particular, facility with Laplace transforms [284], Newtonian mechanics [180], and basic differential equations is assumed.

We begin with the partial differential equation (PDE) describing the ideal vibrating string, which we first digitize by converting partial derivatives to finite differences. This yields a discrete-time recursion which approximately simulates the ideal string. Next, we go back and solve the original PDE, obtaining continuous traveling waves as the solution. These traveling waves are then digitized by ordinary sampling, resulting in the digital waveguide model for the ideal string. The digital waveguide simulation is then shown to be equivalent to a particular finite-difference recursion. (This only happens for the lossless ideal vibrating string with a particular choice of sampling intervals, so it is an interesting case.) Next digital waveguides simulating lossy and dispersive vibrating strings are derived, and alternative choices of wave variables (displacement, velocity, slope, force, power, etc.) are derived. Finally, an introduction to scattering theory for digital waveguides is presented.

The Ideal Vibrating String

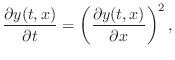

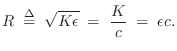

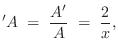

The wave equation for the ideal (lossless, linear, flexible) vibrating string, depicted in Fig.C.1, is given by

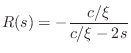

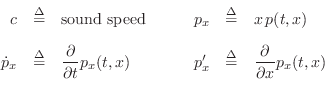

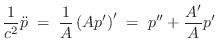

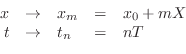

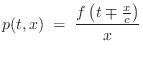

where

|

where ``

The Finite Difference Approximation

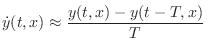

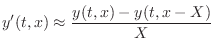

In the musical acoustics literature, the normal method for creating a computational model from a differential equation is to apply the so-called finite difference approximation (FDA) in which differentiation is replaced by a finite difference (see Appendix D) [481,311]. For example

and

where

The odd-order derivative approximations suffer a half-sample delay error while all even order cases can be compensated as above.

FDA of the Ideal String

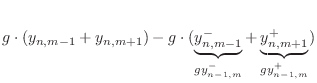

Substituting the FDA into the wave equation gives

In a practical implementation, it is common to set

Thus, to update the sampled string displacement, past values are needed for each point along the string at time instants

Perhaps surprisingly, it is shown in Appendix E that the above recursion is exact at the sample points in spite of the apparent crudeness of the finite difference approximation [442]. The FDA approach to numerical simulation was used by Pierre Ruiz in his work on vibrating strings [392], and it is still in use today [74,75].

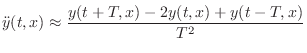

When more terms are added to the wave equation, corresponding to complex

losses and dispersion characteristics, more terms of the form

![]() appear in (C.6). These higher-order terms correspond to

frequency-dependent losses and/or dispersion characteristics in

the FDA. All linear differential equations with constant coefficients give rise to

some linear, time-invariant discrete-time system via the FDA.

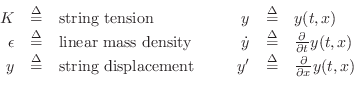

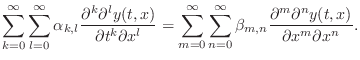

A general subclass of the linear, time-invariant case

giving rise to ``filtered waveguides'' is

appear in (C.6). These higher-order terms correspond to

frequency-dependent losses and/or dispersion characteristics in

the FDA. All linear differential equations with constant coefficients give rise to

some linear, time-invariant discrete-time system via the FDA.

A general subclass of the linear, time-invariant case

giving rise to ``filtered waveguides'' is

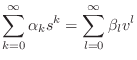

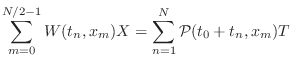

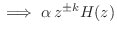

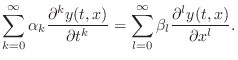

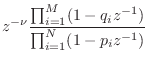

|

(C.7) |

while the fully general linear, time-invariant 2D case is

|

(C.8) |

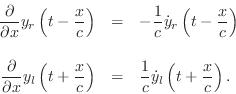

A nonlinear example is

|

(C.9) |

and a time-varying example can be given by

|

(C.10) |

Traveling-Wave Solution

It is easily shown that the lossless 1D wave equation

![]() is solved by any string shape which travels to the left or right with

speed

is solved by any string shape which travels to the left or right with

speed

![]() . Denote right-going

traveling waves in general by

. Denote right-going

traveling waves in general by

![]() and left-going

traveling waves by

and left-going

traveling waves by

![]() , where

, where ![]() and

and ![]() are assumed

twice-differentiable.C.1Then a general class of solutions to the

lossless, one-dimensional, second-order wave equation can be expressed

as

are assumed

twice-differentiable.C.1Then a general class of solutions to the

lossless, one-dimensional, second-order wave equation can be expressed

as

The next section derives the result that

An important point to note about the traveling-wave solution of the 1D

wave equation is that a function of two variables ![]() has been

replaced by two functions of a single variable in time units. This

leads to great reductions in computational complexity.

has been

replaced by two functions of a single variable in time units. This

leads to great reductions in computational complexity.

The traveling-wave solution of the wave equation was first published by d'Alembert in 1747 [100]. See Appendix A for more on the history of the wave equation and related topics.

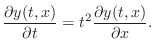

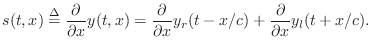

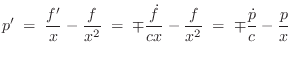

Traveling-Wave Partial Derivatives

Because we have defined our traveling-wave components

![]() and

and

![]() as having arguments in units of time, the partial

derivatives with respect to time

as having arguments in units of time, the partial

derivatives with respect to time ![]() are identical to simple

derivatives of these functions. Let

are identical to simple

derivatives of these functions. Let

![]() and

and

![]() denote the

(partial) derivatives with respect to time of

denote the

(partial) derivatives with respect to time of ![]() and

and ![]() ,

respectively. In contrast, the partial derivatives with respect to

,

respectively. In contrast, the partial derivatives with respect to ![]() are

are

Denoting the spatial

partial derivatives by ![]() and

and

![]() , respectively, we can write more succinctly

, respectively, we can write more succinctly

![\begin{eqnarray*}

y'_r&=& -\frac{1}{c}{\dot y}_r\\ [5pt]

y'_l&=& \frac{1}{c}{\dot y}_l,

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img3234.png)

where this argument-free notation assumes the same ![]() and

and ![]() for all

terms in each equation, and the subscript

for all

terms in each equation, and the subscript ![]() or

or ![]() determines

whether the omitted argument is

determines

whether the omitted argument is ![]() or

or ![]() .

.

Now we can see that the second partial derivatives in ![]() are

are

These relations, together with the fact that partial differention is a linear operator, establish that

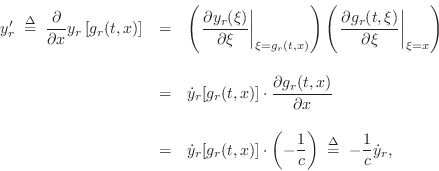

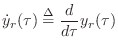

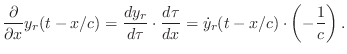

Use of the Chain Rule

These traveling-wave partial-derivative relations may be derived a bit

more formally by means of the chain rule from calculus, which

states that, for the composition of functions ![]() and

and ![]() , i.e.,

, i.e.,

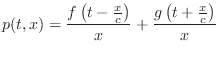

To apply the chain rule to the spatial differentiation of traveling waves, define

![\begin{eqnarray*}

g_r(t,x) &=& t - \frac{x}{c}\\ [10pt]

g_l(t,x) &=& t + \frac{x}{c}.

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img3242.png)

Then the traveling-wave components can be written as

![]() and

and

![]() , and their partial derivatives with respect to

, and their partial derivatives with respect to ![]() become

become

and similarly for ![]() .

.

String Slope from Velocity Waves

Let's use the above result to derive the slope of the ideal

vibrating string

From Eq.![]() (C.11), we have the string displacement given by

(C.11), we have the string displacement given by

Wave Velocity

Because ![]() is an eigenfunction under differentiation

(i.e., the exponential function is its own derivative), it is often

profitable to replace it with a generalized exponential function, with

maximum degrees of freedom in its parametrization, to see if

parameters can be found to fulfill the constraints imposed by differential

equations.

is an eigenfunction under differentiation

(i.e., the exponential function is its own derivative), it is often

profitable to replace it with a generalized exponential function, with

maximum degrees of freedom in its parametrization, to see if

parameters can be found to fulfill the constraints imposed by differential

equations.

In the case of the one-dimensional ideal wave equation (Eq.![]() (C.1)),

with no boundary conditions, an appropriate choice of eigensolution is

(C.1)),

with no boundary conditions, an appropriate choice of eigensolution is

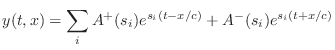

| (C.12) |

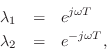

Substituting into the wave equation yields

| (C.13) | |||

|

|

||

Thus

D'Alembert Derived

Setting

![]() , and extending the summation to an integral,

we have, by Fourier's theorem,

, and extending the summation to an integral,

we have, by Fourier's theorem,

for arbitrary continuous functions

An example of the appearance of the traveling wave components shortly after plucking an infinitely long string at three points is shown in Fig.C.2.

![\includegraphics[width=\twidth]{eps/f_t_waves_no_term}](http://www.dsprelated.com/josimages_new/pasp/img3269.png) |

Converting Any String State to Traveling Slope-Wave Components

We verified in §C.3.1 above that traveling-wave components ![]() and

and ![]() in Eq.

in Eq.![]() (C.14) satisfy the ideal string wave equation

(C.14) satisfy the ideal string wave equation

![]() . By definition, the physical string displacement is

given by the sum of the traveling-wave components, or

. By definition, the physical string displacement is

given by the sum of the traveling-wave components, or

Thus, given any pair of traveling waves

The state of an ideal string at

time ![]() is classically specified by its displacement

is classically specified by its displacement ![]() and

velocity

and

velocity

![$\displaystyle \left[\begin{array}{c} y(t,x) \\ [2pt] v(t,x) \end{array}\right] ...

...ght]

\left[\begin{array}{c} y_r(t-x/c) \\ [2pt] y_l(t+x/c) \end{array}\right].

$](http://www.dsprelated.com/josimages_new/pasp/img3273.png)

![$\displaystyle \left[\begin{array}{c} y'^{+} \\ [2pt] y'^{-} \end{array}\right] ...

...eft[\begin{array}{c} y'-\frac{v}{c} \\ [2pt] y'+\frac{v}{c} \end{array}\right]

$](http://www.dsprelated.com/josimages_new/pasp/img3274.png)

![$\displaystyle \left[\begin{array}{c} y^{+} \\ [2pt] y^{-} \end{array}\right] \eqsp \frac{1}{2}\left[\begin{array}{c} y-w \\ [2pt] y+w \end{array}\right]

$](http://www.dsprelated.com/josimages_new/pasp/img3275.png)

It will be seen in §C.7.4 that state conversion between physical variables and traveling-wave components is simpler when force and velocity are chosen as the physical state variables (as opposed to displacement and velocity used here).

Sampled Traveling Waves

To carry the traveling-wave solution into the ``digital domain,'' it

is necessary to sample the traveling-wave amplitudes at

intervals of ![]() seconds, corresponding to a sampling rate

seconds, corresponding to a sampling rate

![]() samples per second. For CD-quality audio, we have

samples per second. For CD-quality audio, we have

![]() kHz. The natural choice of spatial sampling

interval

kHz. The natural choice of spatial sampling

interval ![]() is the distance sound propagates in one temporal

sampling interval

is the distance sound propagates in one temporal

sampling interval ![]() , or

, or

![]() meters. In a lossless

traveling-wave simulation, the whole wave moves left or right one

spatial sample each time sample; hence, lossless simulation requires

only digital delay lines. By lumping losses parsimoniously in a real

acoustic model, most of the traveling-wave simulation can in fact be

lossless even in a practical application.

meters. In a lossless

traveling-wave simulation, the whole wave moves left or right one

spatial sample each time sample; hence, lossless simulation requires

only digital delay lines. By lumping losses parsimoniously in a real

acoustic model, most of the traveling-wave simulation can in fact be

lossless even in a practical application.

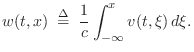

Formally, sampling is carried out by the change of variables

Since

This new notation also introduces a ``

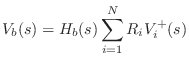

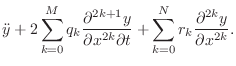

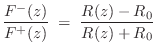

Digital Waveguide Model

![\includegraphics[scale=0.9]{eps/fideal}](http://www.dsprelated.com/josimages_new/pasp/img3287.png) |

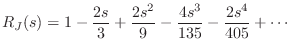

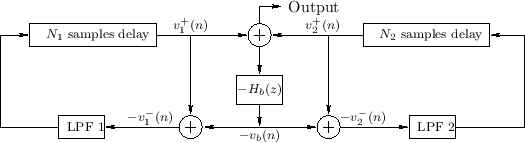

In this section, we interpret the sampled d'Alembert traveling-wave solution of the ideal wave equation as a digital filtering framework. This is an example of what are generally known as digital waveguide models [430,431,433,437,442].

The term

![]() in Eq.

in Eq.![]() (C.16) can

be thought of as the output of an

(C.16) can

be thought of as the output of an ![]() -sample delay line whose input is

-sample delay line whose input is

![]() . In general, subtracting a positive number

. In general, subtracting a positive number ![]() from a time

argument

from a time

argument ![]() corresponds to delaying the waveform by

corresponds to delaying the waveform by ![]() samples. Since

samples. Since ![]() is the right-going component, we draw its delay

line with input

is the right-going component, we draw its delay

line with input ![]() on the left and its output

on the left and its output

![]() on the

right. This can be seen as the upper ``rail'' in Fig.C.3

on the

right. This can be seen as the upper ``rail'' in Fig.C.3

Similarly, the term

![]() can be

thought of as the input to an

can be

thought of as the input to an ![]() -sample delay line whose

output is

-sample delay line whose

output is ![]() . Adding

. Adding ![]() to the time argument

to the time argument ![]() produces an

produces an ![]() -sample waveform

advance. Since

-sample waveform

advance. Since ![]() is the left-going component, it makes

sense to draw the delay line with its input

is the left-going component, it makes

sense to draw the delay line with its input

![]() on the right

and its output

on the right

and its output ![]() on the left.

This can be seen as the lower ``rail'' in Fig.C.3.

on the left.

This can be seen as the lower ``rail'' in Fig.C.3.

Note that the position along the string,

![]() meters,

is laid out from left to right in the diagram, giving a physical

interpretation to the horizontal direction in the diagram. Finally,

the left- and right-going traveling waves must be summed to produce a

physical output according to the formula

meters,

is laid out from left to right in the diagram, giving a physical

interpretation to the horizontal direction in the diagram. Finally,

the left- and right-going traveling waves must be summed to produce a

physical output according to the formula

We may compute the physical string displacement at any spatial sampling point

Any ideal, one-dimensional waveguide can be simulated in this way. It

is important to note that the simulation is exact at the

sampling instants, to within the numerical precision of the samples

themselves. To avoid aliasing associated with sampling, we

require all waveshapes traveling along the string to be initially

bandlimited to less than half the sampling frequency. In other

words, the highest frequencies present in the signals ![]() and

and

![]() may not exceed half the temporal sampling frequency

may not exceed half the temporal sampling frequency

![]() ; equivalently, the highest spatial

frequencies in the shapes

; equivalently, the highest spatial

frequencies in the shapes ![]() and

and ![]() may not exceed

half the spatial sampling frequency

may not exceed

half the spatial sampling frequency

![]() .

.

A C program implementing a plucked/struck string model in the form of Fig.C.3 is available at http://ccrma.stanford.edu/~jos/pmudw/.

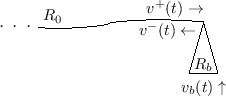

Digital Waveguide Interpolation

A more compact simulation diagram which stands for either sampled or

continuous waveguide simulation is shown in Fig.C.4.

The figure emphasizes that the ideal, lossless waveguide is simulated

by a bidirectional delay line, and that bandlimited

spatial interpolation may be used to construct a displacement

output for an arbitrary ![]() not a multiple of

not a multiple of ![]() , as suggested by

the output drawn in Fig.C.4. Similarly, bandlimited

interpolation across time serves to evaluate the waveform at an

arbitrary time not an integer multiple of

, as suggested by

the output drawn in Fig.C.4. Similarly, bandlimited

interpolation across time serves to evaluate the waveform at an

arbitrary time not an integer multiple of ![]() (§4.4).

(§4.4).

Ideally, bandlimited interpolation is carried out by convolving a

continuous ``sinc function''

sinc![]() with the signal samples. Specifically, convolving a sampled signal

with the signal samples. Specifically, convolving a sampled signal

![]() with

sinc

with

sinc![]() ``evaluates'' the signal at an

arbitrary continuous time

``evaluates'' the signal at an

arbitrary continuous time ![]() . The sinc function is the impulse

response of the ideal lowpass filter which cuts off at half the

sampling rate.

. The sinc function is the impulse

response of the ideal lowpass filter which cuts off at half the

sampling rate.

In practice, the interpolating sinc function must be windowed

to a finite duration. This means the associated lowpass filter must

be granted a ``transition band'' in which its frequency response is

allowed to ``roll off'' to zero at half the sampling rate. The

interpolation quality in the ``pass band'' can always be made perfect

to within the resolution of human hearing by choosing a sufficiently

large product of window-length times transition-bandwidth. Given

``audibly perfect'' quality in the pass band, increasing the

transition bandwidth reduces the computational expense of the

interpolation. In fact, they are approximately inversely

proportional. This is one reason why oversampling at rates

higher than twice the highest audio frequency is helpful. For

example, at a ![]() kHz sampling rate, the transition bandwidth above

the nominal audio upper limit of

kHz sampling rate, the transition bandwidth above

the nominal audio upper limit of ![]() kHz is only

kHz is only ![]() kHz, while at

a

kHz, while at

a ![]() kHz sampling rate (used in DAT machines) the guard band is

kHz sampling rate (used in DAT machines) the guard band is ![]() kHz wide--nearly double. Since the required window length (impulse

response duration) varies inversely with the provided transition

bandwidth, we see that increasing the sampling rate by less than ten

percent reduces the filter expense by almost fifty percent.

Windowed-sinc interpolation is described further in

§4.4. Many more techniques for digital resampling

and delay-line interpolation are reviewed in

[267].

kHz wide--nearly double. Since the required window length (impulse

response duration) varies inversely with the provided transition

bandwidth, we see that increasing the sampling rate by less than ten

percent reduces the filter expense by almost fifty percent.

Windowed-sinc interpolation is described further in

§4.4. Many more techniques for digital resampling

and delay-line interpolation are reviewed in

[267].

Relation to the Finite Difference Recursion

In this section we will show that the digital waveguide simulation technique is equivalent to the recursion produced by the finite difference approximation (FDA) applied to the wave equation [442, pp. 430-431]. A more detailed derivation, with examples and exploration of implications, appears in Appendix E. Recall from (C.6) that the time update recursion for the ideal string digitized via the FDA is given by

| (C.18) |

To compare this with the waveguide description, we substitute the traveling-wave decomposition

| (C.19) | |||

Thus, we obtain the result that the FDA recursion is also exact in the lossless case, because it is equivalent to the digital waveguide method which we know is exact at the sampling points. This is surprising since the FDA introduces artificial damping when applied to lumped, mass-spring systems, as discussed earlier.

The last identity above can be rewritten as

| (C.20) | |||

which says the displacement at time

This results extends readily to the digital waveguide mesh (§C.14), which is essentially a lattice-work of digital waveguides for simulating membranes and volumes. The equivalence is important in higher dimensions because the finite-difference model requires less computations per node than the digital waveguide approach.

Even in one dimension, the digital waveguide and finite-difference

methods have unique advantages in particular situations, and as a

result they are often combined together to form a hybrid

traveling-wave/physical-variable simulation

[351,352,222,124,123,224,263,223].

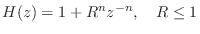

In this hybrid simulations, the traveling-wave variables are called

``W variables'' (where `W' stands for ``Wave''), while the physical

variables are caled ``K variables'' (where `K' stands for

``Kirchoff''). Each K variable, such as displacement ![]() on a vibrating string, can be regarded as the sum of two

traveling-wave components, or W variables:

on a vibrating string, can be regarded as the sum of two

traveling-wave components, or W variables:

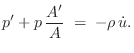

A Lossy 1D Wave Equation

In any real vibrating string, there are energy losses due to yielding

terminations, drag by the surrounding air, and internal friction within the

string. While losses in solids generally vary in a complicated way with

frequency, they can usually be well approximated by a small number of

odd-order terms added to the wave equation. In the simplest case, force is

directly proportional to transverse string velocity, independent of

frequency. If this proportionality constant is ![]() , we obtain the

modified wave equation

, we obtain the

modified wave equation

Thus, the wave equation has been extended by a ``first-order'' term, i.e., a term proportional to the first derivative of

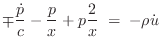

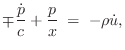

Setting

![]() in the wave equation to find the relationship

between temporal and spatial frequencies in the eigensolution, the wave

equation becomes

in the wave equation to find the relationship

between temporal and spatial frequencies in the eigensolution, the wave

equation becomes

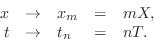

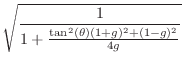

|

|||

|

where

|

(C.22) |

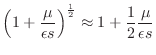

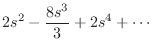

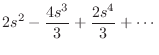

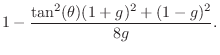

by the binomial theorem. For this small-loss approximation, we obtain the following relationship between temporal and spatial frequency:

| (C.23) |

The eigensolution is then

| (C.24) |

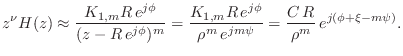

The right-going part of the eigensolution is

| (C.25) |

which decays exponentially in the direction of propagation, and the left-going solution is

| (C.26) |

which also decays exponentially in the direction of travel.

Setting ![]() and using superposition to build up arbitrary traveling

wave shapes, we obtain the general class of solutions

and using superposition to build up arbitrary traveling

wave shapes, we obtain the general class of solutions

| (C.27) |

Sampling these exponentially decaying traveling waves at intervals of

![]() seconds (or

seconds (or ![]() meters) gives

meters) gives

The simulation diagram for the lossy digital waveguide is shown in Fig.C.5.

Again the discrete-time simulation of the decaying traveling-wave solution

is an exact implementation of the continuous-time solution at the

sampling positions and instants, even though losses are admitted in the

wave equation. Note also that the losses which are distributed in

the continuous solution have been consolidated, or lumped, at

discrete intervals of ![]() meters in the simulation. The loss factor

meters in the simulation. The loss factor

![]() summarizes the distributed loss incurred in one

sampling interval. The lumping of distributed losses does not introduce

an approximation error at the sampling points. Furthermore, bandlimited

interpolation can yield arbitrarily accurate reconstruction between

samples. The only restriction is again that all initial conditions and

excitations be bandlimited to below half the sampling rate.

summarizes the distributed loss incurred in one

sampling interval. The lumping of distributed losses does not introduce

an approximation error at the sampling points. Furthermore, bandlimited

interpolation can yield arbitrarily accurate reconstruction between

samples. The only restriction is again that all initial conditions and

excitations be bandlimited to below half the sampling rate.

Loss Consolidation

In many applications, it is possible to realize vast computational savings

in digital waveguide models by commuting losses out of unobserved and

undriven sections of the medium and consolidating them at a minimum number

of points. Because the digital simulation is linear and time invariant

(given constant medium parameters

![]() ), and because linear,

time-invariant elements commute, the diagram in Fig.C.6 is

exactly equivalent (to within numerical precision) to the previous diagram

in Fig.C.5.

), and because linear,

time-invariant elements commute, the diagram in Fig.C.6 is

exactly equivalent (to within numerical precision) to the previous diagram

in Fig.C.5.

![\includegraphics[scale=0.9]{eps/flloss}](http://www.dsprelated.com/josimages_new/pasp/img3343.png) |

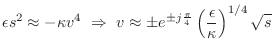

Frequency-Dependent Losses

In nearly all natural wave phenomena, losses increase with frequency. Distributed losses due to air drag and internal bulk losses in the string tend to increase monotonically with frequency. Similarly, air absorption increases with frequency, adding loss for sound waves in acoustic tubes or open air [318].

Perhaps the apparently simplest modification to Eq.![]() (C.21) yielding

frequency-dependent damping is to add a third-order

time-derivative term [392]:

(C.21) yielding

frequency-dependent damping is to add a third-order

time-derivative term [392]:

While this model has been successful in practice [77], it turns out to go unstable at very high sampling rates. The technical term for this problem is that the PDE is ill posed [45].

A well posed replacement for Eq.![]() (C.28) is given by

(C.28) is given by

in which the third-order partial derivative with respect to time,

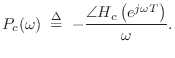

The solution of a lossy wave equation containing higher odd-order

derivatives with respect to time yields traveling waves which

propagate with frequency-dependent attenuation. Instead of scalar

factors ![]() distributed throughout the diagram as in Fig.C.5,

each

distributed throughout the diagram as in Fig.C.5,

each ![]() factor becomes a lowpass filter having some

frequency-response per sample denoted by

factor becomes a lowpass filter having some

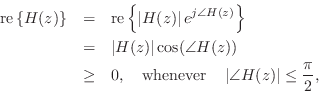

frequency-response per sample denoted by ![]() . Because

propagation is passive, we will always have

. Because

propagation is passive, we will always have

![]() .

.

More specically, As shown in [392], odd-order partial derivatives with respect to time in the wave equation of the form

In view of the above, we see that we can add odd-order time

derivatives to the wave equation to approximate experimentally

observed frequency-dependent damping characteristics in vibrating

strings [73]. However, we then have the problem that

such wave equations are ill posed, leading to possible stability

failures at high sampling rates. As a result, it is generally

preferable to use mixed derivatives, as in Eq.![]() (C.29), and try to

achieve realistic damping using higher order spatial derivatives

instead.

(C.29), and try to

achieve realistic damping using higher order spatial derivatives

instead.

Well Posed PDEs for Modeling Damped Strings

A large class of well posed PDEs is given by [45]

Thus, to the ideal string wave equation Eq.

Digital Filter Models of Damped Strings

In an efficient digital simulation, lumped loss factors of the form

![]() are approximated by a rational frequency response

are approximated by a rational frequency response

![]() . In general, the coefficients of the optimal rational

loss filter are obtained by minimizing

. In general, the coefficients of the optimal rational

loss filter are obtained by minimizing

![]() with respect to the filter coefficients or the poles

and zeros of the filter. To avoid introducing frequency-dependent

delay, the loss filter should be a zero-phase,

finite-impulse-response (FIR) filter [362].

Restriction to zero phase requires the impulse response

with respect to the filter coefficients or the poles

and zeros of the filter. To avoid introducing frequency-dependent

delay, the loss filter should be a zero-phase,

finite-impulse-response (FIR) filter [362].

Restriction to zero phase requires the impulse response

![]() to

be finite in length (i.e., an FIR filter) and it must be symmetric

about time zero, i.e.,

to

be finite in length (i.e., an FIR filter) and it must be symmetric

about time zero, i.e.,

![]() . In most implementations,

the zero-phase FIR filter can be converted into a causal, linear

phase filter by reducing an adjacent delay line by half of the

impulse-response duration.

. In most implementations,

the zero-phase FIR filter can be converted into a causal, linear

phase filter by reducing an adjacent delay line by half of the

impulse-response duration.

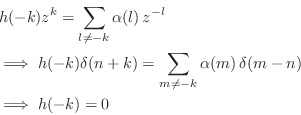

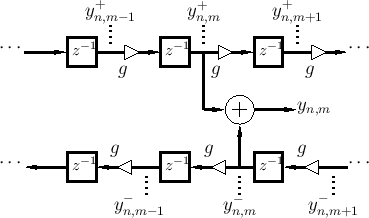

Lossy Finite Difference Recursion

We will now derive a finite-difference model in terms of string displacement samples which correspond to the lossy digital waveguide model of Fig.C.5. This derivation generalizes the lossless case considered in §C.4.3.

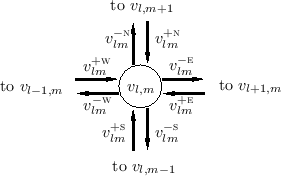

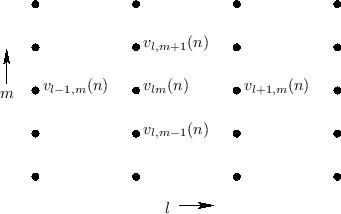

Figure C.7 depicts a digital waveguide section once again in ``physical canonical form,'' as shown earlier in Fig.C.5, and introduces a doubly indexed notation for greater clarity in the derivation below [442,222,124,123].

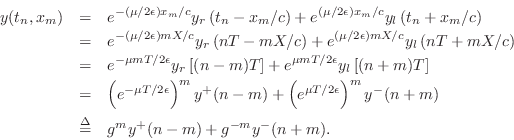

Referring to Fig.C.7, we have the following time-update relations:

Adding these equations gives

This is now in the form of the finite-difference time-domain (FDTD) scheme analyzed in [222]:

Frequency-Dependent Losses

The preceding derivation generalizes immediately to

frequency-dependent losses. First imagine each ![]() in Fig.C.7

to be replaced by

in Fig.C.7

to be replaced by ![]() , where for passivity we require

, where for passivity we require

![\begin{eqnarray*}

y^{+}_{n+1,m}&=& g\ast y^{+}_{n,m-1}\;=\; g\ast (y_{n,m-1}- y^...

...

&=& g\ast \left[(y_{n,m-1}+y_{n,m+1}) - g\ast y_{n-1,m}\right]

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img3373.png)

where ![]() denotes convolution (in the time dimension only).

Define filtered node variables by

denotes convolution (in the time dimension only).

Define filtered node variables by

Then the frequency-dependent FDTD scheme is simply

The frequency-dependent generalization of the FDTD scheme described in this section extends readily to the digital waveguide mesh. See §C.14.5 for the outline of the derivation.

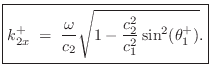

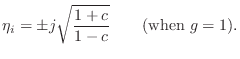

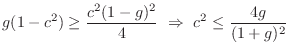

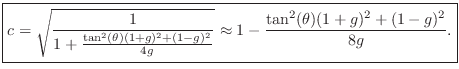

The Dispersive 1D Wave Equation

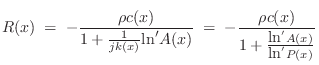

In the ideal vibrating string, the only restoring force for transverse displacement comes from the string tension (§C.1 above); specifically, the transverse restoring force is equal the net transverse component of the axial string tension. Consider in place of the ideal string a bundle of ideal strings, such as a stranded cable. When the cable is bent, there is now a new restoring force arising from some of the fibers being compressed and others being stretched by the bending. This force sums with that due to string tension. Thus, stiffness in a vibrating string introduces a new restoring force proportional to bending angle. It is important to note that string stiffness is a linear phenomenon resulting from the finite diameter of the string.

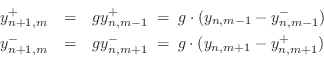

In typical treatments,C.3bending stiffness adds a new term to the wave equation that is proportional to the fourth spatial derivative of string displacement:

where the moment constant

To solve the stiff wave equation Eq.![]() (C.32),

we may set

(C.32),

we may set

![]() to get

to get

At very high frequencies, or when the tension ![]() is negligible relative

to

is negligible relative

to

![]() , we obtain the ideal bar (or rod) approximation:

, we obtain the ideal bar (or rod) approximation:

At intermediate frequencies, between the ideal string and the ideal bar,

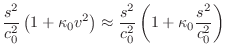

the stiffness contribution can be treated as a correction term

[95]. This is the region of most practical interest because

it is the principal operating region for strings, such as piano strings,

whose stiffness has audible consequences (an inharmonic, stretched overtone

series). Assuming

![]() ,

,

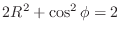

|

|||

|

|||

|

Substituting for

![$\displaystyle e^{st+vx} = \exp{\left\{{s\left[t\pm \frac{x}{c_0}\left(

1+\frac{1}{2}\kappa_0 \frac{s^2}{c_0^2} \right)\right]}\right\}}.

$](http://www.dsprelated.com/josimages_new/pasp/img3398.png)

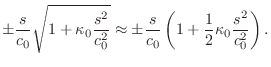

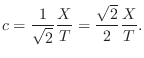

Since the temporal and spatial sampling intervals are related by ![]() , this must generalize to

, this must generalize to

![]() , where

, where ![]() is the size of a unit

delay in the absence of stiffness. Thus, a unit delay

is the size of a unit

delay in the absence of stiffness. Thus, a unit delay ![]() may be

replaced by

may be

replaced by

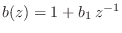

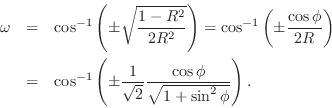

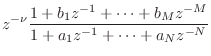

The general, order ![]() , allpass filter is given by [449]

, allpass filter is given by [449]

For computability of the string simulation in the presence of scattering

junctions, there must be at least one sample of pure delay along each

uniform section of string. This means for at least one allpass filter in

Fig.C.8, we must have

![]() which

implies

which

implies ![]() can be factored as

can be factored as

![]() . In a

systolic VLSI implementation, it is desirable to have at least one real

delay from the input to the output of every allpass filter, in order

to be able to pipeline the computation of all of the allpass filters in

parallel. Computability can be arranged in practice by deciding on a

minimum delay, (e.g., corresponding to the wave velocity at a maximum

frequency), and using an allpass filter to provide excess delay beyond the

minimum.

. In a

systolic VLSI implementation, it is desirable to have at least one real

delay from the input to the output of every allpass filter, in order

to be able to pipeline the computation of all of the allpass filters in

parallel. Computability can be arranged in practice by deciding on a

minimum delay, (e.g., corresponding to the wave velocity at a maximum

frequency), and using an allpass filter to provide excess delay beyond the

minimum.

Because allpass filters are linear and time invariant, they commute

like gain factors with other linear, time-invariant components.

Fig.C.9 shows a diagram equivalent to

Fig.C.8 in which the allpass filters have been

commuted and consolidated at two points. For computability in all

possible contexts (e.g., when looped on itself), a single sample of

delay is pulled out along each rail. The remaining transfer function,

![]() in the example of

Fig.C.9, can be approximated using any allpass filter

design technique

[1,2,267,272,551].

Alternatively, both gain and dispersion for a stretch of waveguide can

be provided by a single filter which can be designed using any

general-purpose filter design method which is sensitive to

frequency-response phase as well as magnitude; examples include

equation error methods (such as used in the matlab invfreqz

function (§8.6.4), and Hankel norm methods

[177,428,36].

in the example of

Fig.C.9, can be approximated using any allpass filter

design technique

[1,2,267,272,551].

Alternatively, both gain and dispersion for a stretch of waveguide can

be provided by a single filter which can be designed using any

general-purpose filter design method which is sensitive to

frequency-response phase as well as magnitude; examples include

equation error methods (such as used in the matlab invfreqz

function (§8.6.4), and Hankel norm methods

[177,428,36].

![\includegraphics[scale=0.9]{eps/flstiffstring}](http://www.dsprelated.com/josimages_new/pasp/img3413.png) |

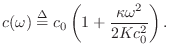

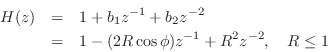

In the case of a lossless, stiff string, if ![]() denotes the

consolidated allpass transfer function, it can be argued that the filter

design technique used should minimize the phase-delay error, where

phase delay is defined by [362]

denotes the

consolidated allpass transfer function, it can be argued that the filter

design technique used should minimize the phase-delay error, where

phase delay is defined by [362]

(Phase Delay)

(Phase Delay)

Alternatively, a lumped allpass filter can be designed by minimizing group delay,

(Group Delay)

(Group Delay)

See §9.4.1 for designing allpass filters with a prescribed delay versus frequency. To model stiff strings, the allpass filter must supply a phase delay which decreases as frequency increases. A good approximation may require a fairly high-order filter, adding significantly to the cost of simulation. (For low-pitched piano strings, order 8 allpass filters work well perceptually [1].) To a large extent, the allpass order required for a given error tolerance increases as the number of lumped frequency-dependent delays is increased. Therefore, increased dispersion consolidation is accompanied by larger required allpass filters, unlike the case of resistive losses.

The function piano_dispersion_filter in the Faust distribution (in effect.lib) designs and implements an allpass filter modeling the dispersion due to stiffness in a piano string [154,170,368].

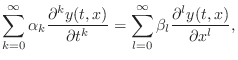

Higher Order Terms

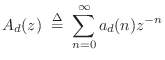

The complete, linear, time-invariant generalization of the lossy, stiff string is described by the differential equation

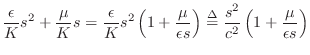

which, on setting

|

(C.34) |

Solving for

| (C.35) | |||

where

We see that a large class of wave equations with constant coefficients, of any order, admits a decaying, dispersive, traveling-wave type solution. Even-order time derivatives give rise to frequency-dependent dispersion and odd-order time derivatives correspond to frequency-dependent losses. The corresponding digital simulation of an arbitrarily long (undriven and unobserved) section of medium can be simplified via commutativity to at most two pure delays and at most two linear, time-invariant filters.

Every linear, time-invariant filter can be expressed as a zero-phase filter in series with an allpass filter. The zero-phase part can be interpreted as implementing a frequency-dependent gain (damping in a digital waveguide), and the allpass part can be seen as frequency-dependent delay (dispersion in a digital waveguide).

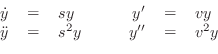

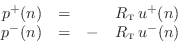

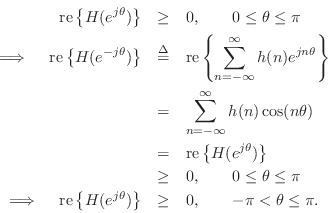

Alternative Wave Variables

We have thus far considered discrete-time simulation of transverse

displacement ![]() in the ideal string. It is equally valid to

choose velocity

in the ideal string. It is equally valid to

choose velocity

![]() , acceleration

, acceleration

![]() , slope

, slope ![]() , or perhaps some other derivative

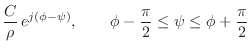

or integral of displacement with respect to time or position.

Conversion between various time derivatives can be carried out by

means integrators and differentiators, as depicted in

Fig.C.10. Since integration and

differentiation are linear operators, and since the traveling

wave arguments are in units of time, the conversion formulas relating

, or perhaps some other derivative

or integral of displacement with respect to time or position.

Conversion between various time derivatives can be carried out by

means integrators and differentiators, as depicted in

Fig.C.10. Since integration and

differentiation are linear operators, and since the traveling

wave arguments are in units of time, the conversion formulas relating

![]() ,

, ![]() , and

, and ![]() hold also for the traveling wave components

hold also for the traveling wave components

![]() .

.

![\includegraphics[scale=0.9]{eps/fwaveconversions}](http://www.dsprelated.com/josimages_new/pasp/img3445.png) |

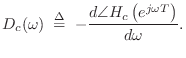

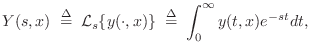

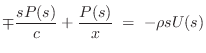

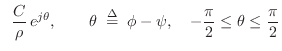

Differentiation and integration have a simple form in the

frequency domain. Denoting the Laplace Transform of ![]() by

by

|

(C.36) |

where ``

| (C.37) |

Similarly,

In discrete time, integration and differentiation can be accomplished using digital filters [362]. Commonly used first-order approximations are shown in Fig.C.12.

![\includegraphics[width=\twidth]{eps/fdigitaldiffint}](http://www.dsprelated.com/josimages_new/pasp/img3450.png) |

If discrete-time acceleration ![]() is defined as the sampled version of

continuous-time acceleration, i.e.,

is defined as the sampled version of

continuous-time acceleration, i.e.,

![]() , (for some fixed continuous position

, (for some fixed continuous position ![]() which we

suppress for simplicity of notation), then the

frequency-domain form is given by the

which we

suppress for simplicity of notation), then the

frequency-domain form is given by the ![]() transform

[485]:

transform

[485]:

|

(C.38) |

In the frequency domain for discrete-time systems, the first-order approximate conversions appear as shown in Fig.C.13.

![\includegraphics[scale=0.6]{eps/ffddigitaldiffint}](http://www.dsprelated.com/josimages_new/pasp/img3454.png) |

The ![]() transform plays the role of the Laplace transform for discrete-time

systems. Setting

transform plays the role of the Laplace transform for discrete-time

systems. Setting ![]() , it can be seen as a sampled Laplace

transform (divided by

, it can be seen as a sampled Laplace

transform (divided by ![]() ), where the sampling is carried out by halting

the limit of the rectangle width at

), where the sampling is carried out by halting

the limit of the rectangle width at ![]() in the definition of a Reimann

integral for the Laplace transform. An important difference between the

two is that the frequency axis in the Laplace transform is the imaginary

axis (the ``

in the definition of a Reimann

integral for the Laplace transform. An important difference between the

two is that the frequency axis in the Laplace transform is the imaginary

axis (the ``![]() axis''), while the frequency axis in the

axis''), while the frequency axis in the ![]() plane is on

the unit circle

plane is on

the unit circle

![]() . As one would expect, the frequency axis for

discrete-time systems has unique information only between frequencies

. As one would expect, the frequency axis for

discrete-time systems has unique information only between frequencies

![]() and

and ![]() while the continuous-time frequency axis extends to plus and

minus infinity.

while the continuous-time frequency axis extends to plus and

minus infinity.

These first-order approximations are accurate (though scaled by ![]() )

at low frequencies relative to half the sampling rate, but they are

not ``best'' approximations in any sense other than being most like

the definitions of integration and differentiation in continuous time.

Much better approximations can be obtained by approaching the problem

from a digital filter design viewpoint, as discussed in §8.6.

)

at low frequencies relative to half the sampling rate, but they are

not ``best'' approximations in any sense other than being most like

the definitions of integration and differentiation in continuous time.

Much better approximations can be obtained by approaching the problem

from a digital filter design viewpoint, as discussed in §8.6.

Spatial Derivatives

In addition to time derivatives, we may apply any number of spatial

derivatives to obtain yet more wave variables to choose from. The first

spatial derivative of string displacement yields slope waves

or, in discrete time,

From this we may conclude that

By the wave equation, curvature waves,

![]() , are

simply a scaling of acceleration waves, in the case of ideal strings.

, are

simply a scaling of acceleration waves, in the case of ideal strings.

In the field of acoustics, the state of a vibrating string at any

instant of time ![]() is often specified by the displacement

is often specified by the displacement

![]() and velocity

and velocity

![]() for all

for all ![]() [317]. Since

velocity is the sum of the traveling velocity waves and

displacement is determined by the difference of the

traveling velocity waves, viz., from Eq.

[317]. Since

velocity is the sum of the traveling velocity waves and

displacement is determined by the difference of the

traveling velocity waves, viz., from Eq.![]() (C.39),

(C.39),

![$\displaystyle y(t,x) \eqsp \int_0^{x} y'(t,\xi)d\xi

\eqsp -\frac{1}{c}\int_0^{x} \left[v_r(t-\xi/c) - v_l(t+\xi/c)\right]d\xi,

$](http://www.dsprelated.com/josimages_new/pasp/img3474.png)

In summary, all traveling-wave variables can be computed from any one, as long as both the left- and right-going component waves are available. Alternatively, any two linearly independent physical variables, such as displacement and velocity, can be used to compute all other wave variables. Wave variable conversions requiring differentiation or integration are relatively expensive since a large-order digital filter is necessary to do it right (§8.6.1). Slope and velocity waves can be computed from each other by simple scaling, and curvature waves are identical to acceleration waves to within a scale factor.

In the absence of factors dictating a specific choice, velocity waves are a good overall choice because (1) it is numerically easier to perform digital integration to get displacement than it is to differentiate displacement to get velocity, (2) slope waves are immediately computable from velocity waves. Slope waves are important because they are a simple scaling of force waves.

Force Waves

Referring to Fig.C.14, at an arbitrary point ![]() along

the string, the vertical force applied at time

along

the string, the vertical force applied at time ![]() to the portion of

string to the left of position

to the portion of

string to the left of position ![]() by the portion of string to the

right of position

by the portion of string to the

right of position ![]() is given by

is given by

| (C.41) |

assuming

| (C.42) |

These forces must cancel since a nonzero net force on a massless point would produce infinite acceleration. I.e., we must have

Vertical force waves propagate along the string like any other

transverse wave variable (since they are just slope waves multiplied

by tension ![]() ). We may choose either

). We may choose either ![]() or

or ![]() as the string

force wave variable, one being the negative of the other. It turns

out that to make the description for vibrating strings look the same

as that for air columns, we have to pick

as the string

force wave variable, one being the negative of the other. It turns

out that to make the description for vibrating strings look the same

as that for air columns, we have to pick ![]() , the one that

acts to the right. This makes sense intuitively when one

considers longitudinal pressure waves in an acoustic tube: a

compression wave traveling to the right in the tube pushes the air in

front of it and thus acts to the right. We therefore define the

force wave variable to be

, the one that

acts to the right. This makes sense intuitively when one

considers longitudinal pressure waves in an acoustic tube: a

compression wave traveling to the right in the tube pushes the air in

front of it and thus acts to the right. We therefore define the

force wave variable to be

| (C.43) |

Note that a negative slope pulls up on the segment to the right. At this point, we have not yet considered a traveling-wave decomposition.

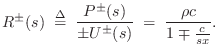

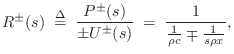

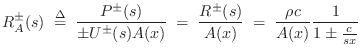

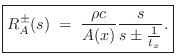

Wave Impedance

Using the above identities, we have that the force distribution along the string is given in terms of velocity waves by

where

|

(C.45) |

The wave impedance can be seen as the geometric mean of the two resistances to displacement: tension (spring force) and mass (inertial force).

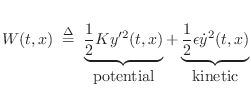

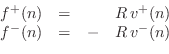

The digitized traveling force-wave components become

which gives us that the right-going force wave equals the wave impedance times the right-going velocity wave, and the left-going force wave equals minus the wave impedance times the left-going velocity wave.C.4Thus, in a traveling wave, force is always in phase with velocity (considering the minus sign in the left-going case to be associated with the direction of travel rather than a

In the case of the acoustic tube [317,297], we have the analogous relations

|

(C.47) |

where

| (C.48) |

where

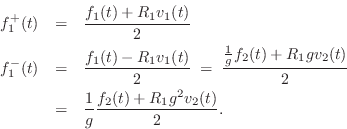

State Conversions

In §C.3.6, an arbitrary string state was converted to traveling displacement-wave components to show that the traveling-wave representation is complete, i.e., that any physical string state can be expressed as a pair of traveling-wave components. In this section, we revisit this topic using force and velocity waves.

By definition of the traveling-wave decomposition, we have

Using Eq.![]() (C.46), we can eliminate

(C.46), we can eliminate

![]() and

and

![]() ,

giving, in matrix form,

,

giving, in matrix form,

![$\displaystyle \left[\begin{array}{c} f \\ [2pt] v \end{array}\right] = \left[\b...

...ay}\right]

\left[\begin{array}{c} f^{{+}} \\ [2pt] f^{{-}} \end{array}\right].

$](http://www.dsprelated.com/josimages_new/pasp/img3492.png)

![$\displaystyle \left[\begin{array}{c} f \\ [2pt] v \end{array}\right] = \left[\b...

...d{array}\right]\left[\begin{array}{c} v^{+} \\ [2pt] v^{-} \end{array}\right].

$](http://www.dsprelated.com/josimages_new/pasp/img3494.png)

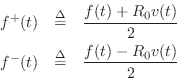

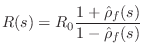

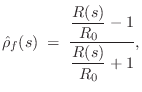

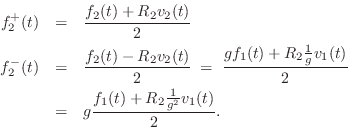

Carrying out the inversion to obtain force waves

![]() from

from

![]() yields

yields

![$\displaystyle \left[\begin{array}{c} f^{{+}} \\ [2pt] f^{{-}} \end{array}\right...

...ft[\begin{array}{c} \frac{f+Rv}{2} \\ [2pt] \frac{f-Rv}{2} \end{array}\right].

$](http://www.dsprelated.com/josimages_new/pasp/img3498.png)

![$\displaystyle \left[\begin{array}{c} v^{+} \\ [2pt] v^{-} \end{array}\right] = ...

...[\begin{array}{c} \frac{v+f/R}{2} \\ [2pt] \frac{v-f/R}{2} \end{array}\right].

$](http://www.dsprelated.com/josimages_new/pasp/img3500.png)

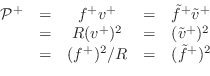

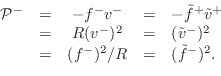

Power Waves

Basic courses in physics teach us that power is work per unit time, and work is a measure of energy which is typically defined as force times distance. Therefore, power is in physical units of force times distance per unit time, or force times velocity. It therefore should come as no surprise that traveling power waves are defined for strings as follows:

|

From the Ohm's-law relations

Thus, both the left- and right-going components are nonnegative. The sum of the traveling powers at a point gives the total power at that point in the waveguide:

| (C.49) |

If we had left out the minus sign in the definition of left-going power waves, the sum would instead be a net power flow.

Power waves are important because they correspond to the actual ability of the wave to do work on the outside world, such as on a violin bridge at the end of a string. Because energy is conserved in closed systems, power waves sometimes give a simpler, more fundamental view of wave phenomena, such as in conical acoustic tubes. Also, implementing nonlinear operations such as rounding and saturation in such a way that signal power is not increased, gives suppression of limit cycles and overflow oscillations [432], as discussed in the section on signal scattering.

For example, consider a waveguide having a wave impedance which

increases smoothly to the right. A converging cone provides a

practical example in the acoustic tube realm. Then since the energy

in a traveling wave must be in the wave unless it has been transduced

elsewhere, we expect

![]() to propagate unchanged along the

waveguide. However, since the wave impedance is increasing, it must

be true that force is increasing and velocity is decreasing according

to

to propagate unchanged along the

waveguide. However, since the wave impedance is increasing, it must

be true that force is increasing and velocity is decreasing according

to

![]() . Looking only at force or velocity

might give us the mistaken impression that the wave is getting

stronger (looking at force) or weaker (looking at velocity), when

really it was simply sailing along as a fixed amount of energy. This

is an example of a transformer action in which force is

converted into velocity or vice versa. It is well known that a

conical tube acts as if it's open on both ends even though we can

plainly see that it is closed on one end. A tempting explanation is

that the cone acts as a transformer which exchanges pressure and

velocity between the endpoints of the tube, so that a closed end on

one side is equivalent to an open end on the other. However, this

view is oversimplified because, while spherical pressure waves travel

nondispersively in cones, velocity propagation is dispersive

[22,50].

. Looking only at force or velocity

might give us the mistaken impression that the wave is getting

stronger (looking at force) or weaker (looking at velocity), when

really it was simply sailing along as a fixed amount of energy. This

is an example of a transformer action in which force is

converted into velocity or vice versa. It is well known that a

conical tube acts as if it's open on both ends even though we can

plainly see that it is closed on one end. A tempting explanation is

that the cone acts as a transformer which exchanges pressure and

velocity between the endpoints of the tube, so that a closed end on

one side is equivalent to an open end on the other. However, this

view is oversimplified because, while spherical pressure waves travel

nondispersively in cones, velocity propagation is dispersive

[22,50].

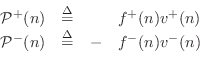

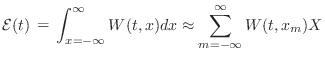

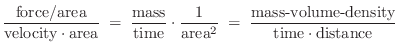

Energy Density Waves

The vibrational energy per unit length along the string, or wave energy density [317] is given by the sum of potential and kinetic energy densities:

|

(C.50) |

Sampling across time and space, and substituting traveling wave components, one can show in a few lines of algebra that the sampled wave energy density is given by

| (C.51) |

where

![\begin{eqnarray*}

W^{+}(n) &=& \frac{{\cal P}^{+}(n)}{c} \,\mathrel{\mathop=}\,\...

...ht]^2 \,\mathrel{\mathop=}\,\frac{\left[f^{{-}}(n)\right]^2}{K}.

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img3508.png)

Thus, traveling power waves (energy per unit time)

can be converted to energy density waves (energy per unit length) by

simply dividing by ![]() , the speed of propagation. Quite naturally, the

total wave energy in the string

is given by the integral along the string of the energy density:

, the speed of propagation. Quite naturally, the

total wave energy in the string

is given by the integral along the string of the energy density:

|

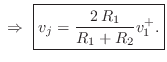

(C.52) |

In practice, of course, the string length is finite, and the limits of integration are from the

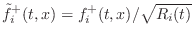

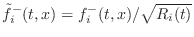

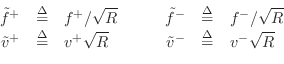

Root-Power Waves

It is sometimes helpful to normalize the wave variables so that signal power is uniformly distributed numerically. This can be especially helpful in fixed-point implementations.

From (C.49), it is clear that power normalization is given by

where we have dropped the common time argument `

|

and

|

The normalized wave variables

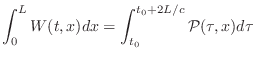

Total Energy in a Rigidly Terminated String

The total energy ![]() in a length

in a length ![]() , rigidly terminated, freely

vibrating string can be computed as

, rigidly terminated, freely

vibrating string can be computed as

|

(C.54) | ||

|

(C.55) |

for any

Scattering at Impedance Changes

When a traveling wave encounters a change in wave impedance, scattering occurs, i.e., a traveling wave impinging on an impedance discontinuity will partially reflect and partially transmit at the junction in such a way that energy is conserved. This is a classical topic in transmission line theory [295], and it is well covered for acoustic tubes in a variety of references [297,363]. However, for completeness, we derive the basic scattering relations below for plane waves in air, and for longitudinal stress waves in rods.

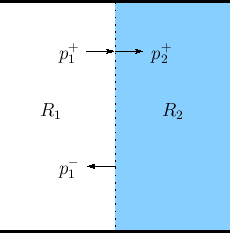

Plane-Wave Scattering

Consider a plane wave with peak pressure amplitude ![]() propagating

from wave impedance

propagating

from wave impedance ![]() into a new wave impedance

into a new wave impedance ![]() , as shown in

Fig.C.15. (Assume

, as shown in

Fig.C.15. (Assume ![]() and

and ![]() are real and positive.)

The physical constraints on the wave are that

are real and positive.)

The physical constraints on the wave are that

- pressure must be continuous everywhere, and

- velocity in must equal velocity out (the junction has no state).

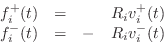

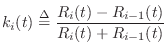

As derived in §C.7.3, we also have the Ohm's law relations:

To obey the physical constraints at the impedance discontinuity, the

incident plane-wave must split into a reflected plane wave

![]() and a transmitted plane-wave

and a transmitted plane-wave ![]() such that

pressure is continuous and signal power is conserved. The physical

pressure on the left of the junction is

such that

pressure is continuous and signal power is conserved. The physical

pressure on the left of the junction is

![]() , and the

physical pressure on the right of the junction is

, and the

physical pressure on the right of the junction is

![]() , since

, since ![]() according to our set-up.

according to our set-up.

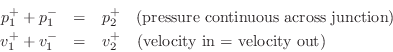

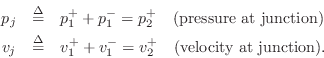

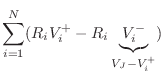

Scattering Solution

Define the junction pressure ![]() and junction velocity

and junction velocity ![]() by

by

Then we can write

![\begin{eqnarray*}

p^+_1+p^-_1 &=& p^+_2\;=\;p_j\\ [10pt]

\,\,\Rightarrow\,\,R_1v...

...\\ [10pt]

\,\,\Rightarrow\,\,2\,R_1v^{+}_1 - R_1 v_j &=& R_2 v_j

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img3532.png)

![$\displaystyle v^{-}_1 = v_j - v^{+}_1 = \left[\frac{2\,R_1}{R_1+R_2} - 1\right]v^{+}_1 = \frac{R_1-R_2}{R_1+R_2} v^{+}_1.

$](http://www.dsprelated.com/josimages_new/pasp/img3536.png)

Using the Ohm's law relations, the pressure waves follow easily:

![\begin{eqnarray*}

p^+_2 &=& R_2v^{+}_2 = R_2 v_j = \frac{2\,R_2}{R_1+R_2}p^+_1\\ [10pt]

p^-_1 &=& -R_1v^{-}_1 = \frac{R_2-R_1}{R_1+R_2} p^+_1

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img3537.png)

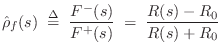

Reflection Coefficient

Define the reflection coefficient of the scattering junction as

![\begin{eqnarray*}

p^+_2 &=& (1+\rho)p^+_1\\ [3pt]

p^-_1 &=& \rho\,p^+_1

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img3539.png)

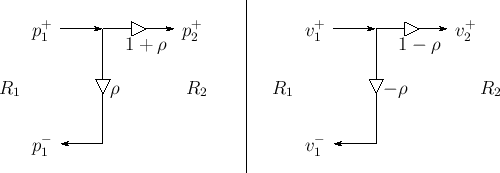

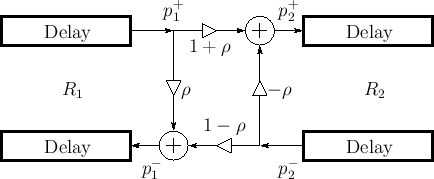

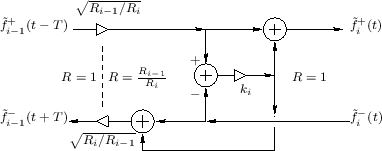

Signal flow graphs for pressure and velocity are given in Fig.C.16.

|

It is a simple exercise to verify that signal power is conserved by

checking that

![]() .

(Left-going power is negated to account for its opposite

direction-of-travel.)

.

(Left-going power is negated to account for its opposite

direction-of-travel.)

So far we have only considered a plane wave incident on the left of

the junction. Consider now a plane wave incident from the right. For

that wave, the impedance steps from ![]() to

to ![]() , so the reflection

coefficient it ``sees'' is

, so the reflection

coefficient it ``sees'' is ![]() . By superposition, the signal flow

graph for plane waves incident from either side is given by

Fig.C.17. Note that the transmission coefficient is

one plus the reflection coefficient in either direction. This signal

flow graph is often called the ``Kelly-Lochbaum'' scattering junction

[297].

. By superposition, the signal flow

graph for plane waves incident from either side is given by

Fig.C.17. Note that the transmission coefficient is

one plus the reflection coefficient in either direction. This signal

flow graph is often called the ``Kelly-Lochbaum'' scattering junction

[297].

|

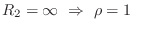

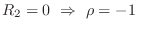

There are some simple special cases:

-

(e.g., rigid wall reflection)

(e.g., rigid wall reflection)

-

(e.g., open-ended tube)

(e.g., open-ended tube)

-

(no reflection)

(no reflection)

Plane-Wave Scattering at an Angle

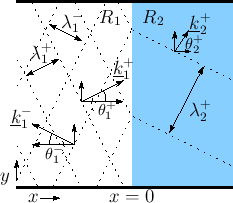

Figure C.18 shows the more general situation (as compared

to Fig.C.15) of a sinusoidal traveling plane wave

encountering an impedance discontinuity at some arbitrary angle of

incidence, as indicated by the vector wavenumber

![]() . The

mathematical details of general sinusoidal plane waves in air and

vector wavenumber are reviewed in §B.8.1.

. The

mathematical details of general sinusoidal plane waves in air and

vector wavenumber are reviewed in §B.8.1.

|

At the boundary between impedance ![]() and

and ![]() , we have, by

continuity of pressure,

, we have, by

continuity of pressure,

as we will now derive.

Let the impedance change be in the

![]() plane. Thus, the

impedance is

plane. Thus, the

impedance is ![]() for

for ![]() and

and ![]() for

for ![]() . There are three

plane waves to consider:

. There are three

plane waves to consider:

- The incident plane wave with wave vector

- The reflected plane wave with wave vector

- The transmitted plane wave with wave vector

where

Reflection and Refraction

The first equality in Eq.![]() (C.56) implies that the

angle of incidence equals angle of reflection:

(C.56) implies that the

angle of incidence equals angle of reflection:

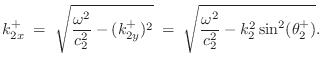

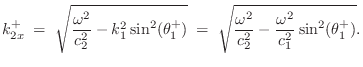

We now wish to find the wavenumber in medium 2.

Let ![]() denote the phase velocity in wave impedance

denote the phase velocity in wave impedance ![]() :

:

Evanescent Wave due to Total Internal Reflection

Note that if

![]() , the horizontal component

of the wavenumber in medium 2 becomes imaginary. In this case,

the wave in medium 2 is said to be evanescent, and the wave in

medium 1 undergoes total internal reflection (no power travels

from medium 1 to medium 2). The evanescent-wave amplitude decays

exponentially to the right and oscillates ``in place'' (like a

standing wave). ``Tunneling'' is possible given a

medium 3 beyond medium 2 in which wave propagation resumes.

, the horizontal component

of the wavenumber in medium 2 becomes imaginary. In this case,

the wave in medium 2 is said to be evanescent, and the wave in

medium 1 undergoes total internal reflection (no power travels

from medium 1 to medium 2). The evanescent-wave amplitude decays

exponentially to the right and oscillates ``in place'' (like a

standing wave). ``Tunneling'' is possible given a

medium 3 beyond medium 2 in which wave propagation resumes.

To show explicitly the exponential decay and in-place oscillation in

an evanescent wave, express the imaginary wavenumber as

![]() . Then we have

. Then we have

![\begin{eqnarray*}

p(t,\underline{x}) &=&

\cos\left(\omega t - \underline{k}^T\...

...-k_x x}\right\}}}\\ [5pt]

&=& e^{-k_x x} \cos(\omega t - k_y y).

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img3576.png)

Thus, an imaginary wavenumber corresponds to an exponentially decaying evanescent wave. Note that the time dependence (cosine term) applies to all points to the right of the boundary. Since evanescent waves do not really ``propagate,'' it is perhaps better to speak of an ``evanescent acoustic field'' or ``evanescent standing wave'' instead of ``evanescent waves''.

For more on the physics of evanescent waves and tunneling, see [295].

Longitudinal Waves in Rods

In this section, elementary scattering relations will be derived for the case of longitudinal force and velocity waves in an ideal string or rod. In solids, force-density waves are referred to as stress waves [169,261]. Longitudinal stress waves in strings and rods have units of (compressive) force per unit area and are analogous to longitudinal pressure waves in acoustic tubes.

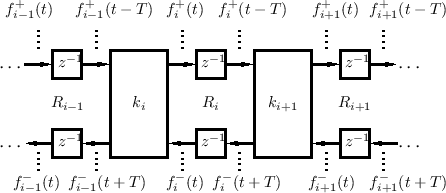

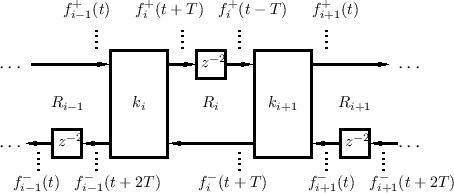

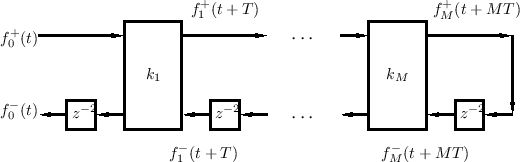

![\includegraphics[width=\twidth]{eps/Fwgfs}](http://www.dsprelated.com/josimages_new/pasp/img3577.png) |

A single waveguide section between two partial sections is shown in

Fig.C.19. The sections are numbered 0 through ![]() from

left to right, and their wave impedances are

from

left to right, and their wave impedances are ![]() ,

, ![]() , and

, and ![]() ,

respectively. Such a rod might be constructed, for example, using

three different materials having three different densities. In the

,

respectively. Such a rod might be constructed, for example, using

three different materials having three different densities. In the

![]() th section, there are two stress traveling waves:

th section, there are two stress traveling waves: ![]() traveling

to the right at speed

traveling

to the right at speed ![]() , and

, and ![]() traveling to the left at speed

traveling to the left at speed

![]() . To minimize the numerical dynamic range, velocity waves may be

chosen instead when

. To minimize the numerical dynamic range, velocity waves may be

chosen instead when ![]() .

.

As in the case of transverse waves (see the derivation of (C.46)), the traveling longitudinal plane waves in each section satisfy [169,261]

where the wave impedance is now

If the wave impedance ![]() is constant, the shape of a traveling wave

is not altered as it propagates from one end of a rod-section to the

other. In this case we need only consider

is constant, the shape of a traveling wave

is not altered as it propagates from one end of a rod-section to the

other. In this case we need only consider ![]() and

and ![]() at one

end of each section as a function of time. As shown in Fig.C.19,

we define

at one

end of each section as a function of time. As shown in Fig.C.19,

we define

![]() as the force-wave component at the extreme

left of section

as the force-wave component at the extreme

left of section ![]() . Therefore, at the extreme right of section

. Therefore, at the extreme right of section ![]() ,

we have the traveling waves

,

we have the traveling waves

![]() and

and

![]() , where

, where ![]() is

the travel time from one end of a section to the other.

is

the travel time from one end of a section to the other.

For generality, we may allow the wave impedances ![]() to vary with

time. A number of possibilities exist which satisfy (C.57) in the

time-varying case. For the moment, we will assume the traveling waves

at the extreme right of section

to vary with

time. A number of possibilities exist which satisfy (C.57) in the

time-varying case. For the moment, we will assume the traveling waves

at the extreme right of section ![]() are still given by

are still given by

![]() and

and

![]() . This definition, however, implies the velocity varies

inversely with the wave impedance. As a result, signal energy, being the product

of force times velocity, is ``pumped'' into or out of the waveguide

by a changing wave impedance. Use of normalized waves

. This definition, however, implies the velocity varies

inversely with the wave impedance. As a result, signal energy, being the product

of force times velocity, is ``pumped'' into or out of the waveguide

by a changing wave impedance. Use of normalized waves

![]() avoids this.

However, normalization increases the required number of

multiplications, as we will see in §C.8.6 below.

avoids this.

However, normalization increases the required number of

multiplications, as we will see in §C.8.6 below.

As before, the physical force density (stress) and velocity at the

left end of section ![]() are obtained by summing the left- and

right-going traveling wave components:

are obtained by summing the left- and

right-going traveling wave components:

Let

Kelly-Lochbaum Scattering Junctions

Conservation of energy and mass dictate that, at the impedance

discontinuity, force and velocity variables must be continuous

where velocity is defined as positive to the right on both sides of the junction. Force (or stress or pressure) is a scalar while velocity is a vector with both a magnitude and direction (in this case only left or right). Equations (C.57), (C.58), and (C.59) imply the following scattering equations (a derivation is given in the next section for the more general case of

where

is called the

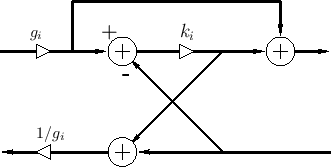

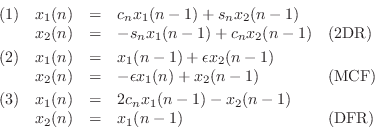

The scattering equations are illustrated in Figs. C.19b and C.20. In linear predictive coding of speech [482], this structure is called the Kelly-Lochbaum scattering junction, and it is one of several types of scattering junction used to implement lattice and ladder digital filter structures (§C.9.4,[297]).

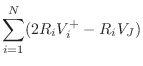

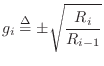

One-Multiply Scattering Junctions

By factoring out ![]() in each equation of (C.60), we can write

in each equation of (C.60), we can write

where

Thus, only one multiplication is actually necessary to compute the transmitted and reflected waves from the incoming waves in the Kelly-Lochbaum junction. This computation is shown in Fig.C.21, and it is known as the one-multiply scattering junction [297].

Another one-multiply form is obtained by organizing (C.60) as

where

As in the previous case, only one multiplication and three additions are required per junction. This one-multiply form generalizes more readily to junctions of more than two waveguides, as we'll see in a later section.

A scattering junction well known in the LPC speech literature but not described here is the so-called two-multiply junction [297] (requiring also two additions). This omission is because the two-multiply junction is not valid as a general, local, physical modeling building block. Its derivation is tied to the reflectively terminated, cascade waveguide chain. In cases where it applies, however, it can be the implementation of choice; for example, in DSP chips having a fast multiply-add instruction, it may be possible to implement the inner loop of the two-multiply, two-add scattering junction using only two instructions.

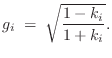

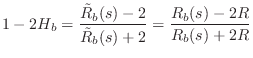

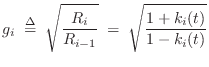

Normalized Scattering Junctions

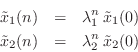

Using (C.53) to convert to normalized waves

![]() , the

Kelly-Lochbaum junction (C.60) becomes

, the

Kelly-Lochbaum junction (C.60) becomes

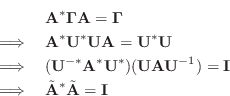

as diagrammed in Fig.C.22. This is called the normalized scattering junction [297], although a more precise term would be the ``normalized-wave scattering junction.''

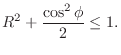

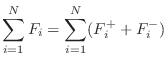

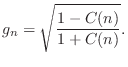

It is interesting to define

![]() , always

possible for passive junctions since

, always

possible for passive junctions since

![]() , and note that

the normalized scattering junction is equivalent to a 2D rotation:

, and note that

the normalized scattering junction is equivalent to a 2D rotation:

where, for conciseness of notation, the time-invariant case is written.

While it appears that scattering of normalized waves at a two-port junction requires four multiplies and two additions, it is possible to convert this to three multiplies and three additions using a two-multiply ``transformer'' to power-normalize an ordinary one-multiply junction [432].

The transformer is a lossless two-port defined by [136]

The transformer can be thought of as a device which steps the wave impedance to a new value without scattering; instead, the traveling signal power is redistributed among the force and velocity wave variables to satisfy the fundamental relations

as can be quickly derived by requiring

Figure C.23 illustrates a three-multiply

normalized-wave scattering junction [432]. The impedance of

all waveguides (bidirectional delay lines) may be taken to be ![]() .

Scattering junctions may then be implemented as a denormalizing

transformer

.

Scattering junctions may then be implemented as a denormalizing

transformer

![]() , a one-multiply scattering junction

, a one-multiply scattering junction

![]() , and a renormalizing transformer

, and a renormalizing transformer

![]() . Either

transformer may be commuted with the junction and combined with the

other transformer to give a three-multiply normalized-wave scattering

junction. (The transformers are combined on the left in

Fig.C.23).

. Either

transformer may be commuted with the junction and combined with the

other transformer to give a three-multiply normalized-wave scattering

junction. (The transformers are combined on the left in

Fig.C.23).

In slightly more detail, a transformer

![]() steps the wave

impedance (left-to-right) from

steps the wave

impedance (left-to-right) from ![]() to

to ![]() . Equivalently, the

normalized force-wave

. Equivalently, the

normalized force-wave

![]() is converted unnormalized form

is converted unnormalized form

![]() . Next there is a physical scattering from impedance

. Next there is a physical scattering from impedance

![]() to

to ![]() (reflection coefficient

(reflection coefficient

![]() ). The outgoing wave to the right is

then normalized by transformer

). The outgoing wave to the right is

then normalized by transformer

![]() to return the wave

impedance back to

to return the wave

impedance back to ![]() for wave propagation within a normalized-wave

delay line to the right. Finally, the right transformer is commuted

left and combined with the left transformer to reduce total

computational complexity to one multiply and three adds.

for wave propagation within a normalized-wave

delay line to the right. Finally, the right transformer is commuted

left and combined with the left transformer to reduce total

computational complexity to one multiply and three adds.

It is important to notice that transformer-normalized junctions may

have a large dynamic range in practice. For example, if

![]() , then Eq.

, then Eq.![]() (C.69) shows that the

transformer coefficients may become as large as

(C.69) shows that the

transformer coefficients may become as large as

![]() . If