Uniform Distribution

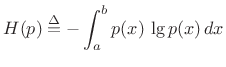

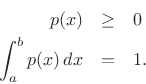

Among probability distributions ![]() which are nonzero over a

finite range of values

which are nonzero over a

finite range of values ![]() , the maximum-entropy

distribution is the uniform distribution. To show this, we

must maximize the entropy,

, the maximum-entropy

distribution is the uniform distribution. To show this, we

must maximize the entropy,

|

(D.33) |

with respect to

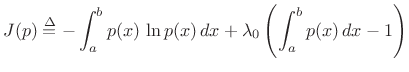

Using the method of Lagrange multipliers for optimization in the presence of constraints [86], we may form the objective function

|

(D.34) |

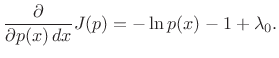

and differentiate with respect to

|

(D.35) |

Setting this to zero and solving for

| (D.36) |

(Setting the partial derivative with respect to

Choosing ![]() to satisfy the constraint gives

to satisfy the constraint gives

![]() , yielding

, yielding

![$\displaystyle p(x) = \left\{\begin{array}{ll} \frac{1}{b-a}, & a\leq x \leq b \\ [5pt] 0, & \hbox{otherwise}. \\ \end{array} \right.$](http://www.dsprelated.com/josimages_new/sasp2/img2812.png) |

(D.37) |

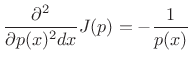

That this solution is a maximum rather than a minimum or inflection point can be verified by ensuring the sign of the second partial derivative is negative for all

|

(D.38) |

Since the solution spontaneously satisfied

Next Section:

Exponential Distribution

Previous Section:

Sample-Variance Variance