Transfer Function Models

For linear time-invariant systems, rather than build an explicit discrete-time model as in §7.3 for each mass, spring, and dashpot, (or inductor, capacitor, and resistor for virtual analog models), we may instead choose to model only the transfer function between selected inputs and outputs of the physical system. It should be emphasized that this is an option only when the relevant portion of the system is linear and time invariant (LTI), or at least sufficiently close to LTI. Transfer-function modeling can be considered a kind of ``large-scale'' or ``macroscopic'' modeling in which an entire physical subsystem, such as a guitar body, is modeled by a single transfer function relating specific inputs and outputs. (A transfer function can also of course be a matrix relating a vector of inputs to a vector of outputs [220].) Such models are used extensively in the field of control system design [150].9.1

Transfer-function modeling is often the most cost-effective way to incorporate LTI lumped elements (Ch. 7) in an otherwise physical computational model. For wave-propagating distributed systems, on the other hand, such as vibrating strings and acoustic tubes, digital waveguides models (Ch. 6) are more efficient than transfer-function models, in addition to having a precise physical interpretation that transfer-function coefficients lack. In models containing lumped elements, or distributed components that are not characterized by wave propagation, maximum computational efficiency is typically obtained by deciding which LTI portions of the model can be ``frozen'' as ``black boxes'' characterized only by their transfer functions. In return for increased computational efficiency, we sacrifice the ability to access the interior of the black box in a physically meaningful way.

An example where such ``macroscopic'' transfer-function modeling is normally applied is the trumpet bell (§9.7.2). A fine-grained model might use a piecewise cylindrical or piecewise conical approximation to the flaring bell [71]. However, there is normally no need for an explicit bell model in a practical virtual instrument when the bell is assumed to be LTI and spatial directivity variations are neglected. In such cases, the transmittance and reflectance of the bell can be accurately summarized by digital filters having frequency responses that are optimal approximations to the measured (or theoretical) bell responses (§9.7). However, it is then not so easy to insert a moveable virtual ``mute'' into the bell reflectance/transmittance filters. This is an example of the general trade-off between physical extensibilty and computational efficiency/parsimony.

Outline

In this chapter, we will look at a variety of ways to digitize

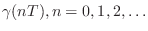

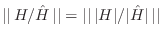

macroscopic point-to-point transfer functions ![]() corresponding to a desired impulse response

corresponding to a desired impulse response ![]() :

:

- Sampling

to get

to get

- Pole mappings (such as

followed by Prony's method)

followed by Prony's method)

- Modal expansion

- Frequency-response matching using digital filter design methods

Next, we'll look at the more specialized technique known as commuted synthesis, in which computational efficiency may be greatly increased by interchanging (``commuting'') the series order of component transfer functions. Commuted synthesis delivers large gains in efficiency for systems with a short excitation and high-order resonators, such plucked and struck strings. In Chapter 9, commuted synthesis is applied to piano modeling.

Extracting the least-damped modes of a transfer function for separate parametric implementation is often used in commuted synthesis. We look at a number of ways to accomplish this goal toward the end of this chapter.

We close the chapter with a simple example of transfer-function modeling applied to the digital phase shifter audio effect. This example classifies as virtual analog modeling, in which a valued analog device is converted to digital form in a way that preserves all valued features of the original. Further examples of transfer-function models appear in Chapter 9.

Sampling the Impulse Response

Sampling the impulse response of a system is of course quite elementary. The main thing to watch out for is aliasing, and the main disadvantage is high computational complexity when the impulse-response is long.

Since we have defined (in §7.2) the driving-point

admittance ![]() as the nominal transfer function of a system

port, corresponding to defining the input as driving force and the

output as resulting velocity (see Fig.7.3), we have that

as the nominal transfer function of a system

port, corresponding to defining the input as driving force and the

output as resulting velocity (see Fig.7.3), we have that

![]() is defined as the system impulse response. Note, however,

that the driving force and observed velocity need not be at the same

physical point, and in general we may freely define any physical input

and output points. Nevertheless, if the outputs are in velocity units

and the inputs are in force units, then the transfer-function matrix

will have units of admittance, and we will assume this for simplicity.

is defined as the system impulse response. Note, however,

that the driving force and observed velocity need not be at the same

physical point, and in general we may freely define any physical input

and output points. Nevertheless, if the outputs are in velocity units

and the inputs are in force units, then the transfer-function matrix

will have units of admittance, and we will assume this for simplicity.

Sampling the impulse response can be expressed mathematically as

![]() .9.2 In practice, we can only record a finite number of

impulse-response samples. Usually a graceful taper (e.g., using the

right half of an FFT window, such as the Hann window) yields better

results than simple truncation. The system model is then implemented

as a Finite Impulse Response (FIR) digital filter (§2.5.4). The

next section describes the related impulse-invariant method for

digital filter design which derives an infinite impulse

response (IIR) digital filter that matches the analog filter impulse

response exactly at the sampling times.

.9.2 In practice, we can only record a finite number of

impulse-response samples. Usually a graceful taper (e.g., using the

right half of an FFT window, such as the Hann window) yields better

results than simple truncation. The system model is then implemented

as a Finite Impulse Response (FIR) digital filter (§2.5.4). The

next section describes the related impulse-invariant method for

digital filter design which derives an infinite impulse

response (IIR) digital filter that matches the analog filter impulse

response exactly at the sampling times.

Sampling the impulse response has the advantage of preserving resonant frequencies (see next section), but its big disadvantage is aliasing of the frequency response. No ``system'' is truly bandlimited. For example, even a simple mass and dashpot with a nonzero initial condition produces a continuous decaying exponential response that is not bandlimited.

Before a continuous impulse response is sampled, a lowpass filter should be considered for eliminating all frequency components at half the sampling rate and above. In other words, the system itself should be ``lowpassed'' to avoid aliasing in many applications. (On the other hand, there are also many applications in which the frequency-response aliasing is not objectional to the ear.) If the system is linear and time invariant, and if we excite the system with input signals and initial conditions that are similarly bandlimited to less than half the sampling rate, no signal inside the system or appearing at the outputs will be aliased. In other words, these conditions yield an ideal bandlimited system simulation that remains exact (for the bandlimited signals) at the sampling instants.

Note, however, that time variation or nonlinearity (both common in practical instruments), together with feedback, will ``pump'' the signal spectrum higher and higher until aliasing is ultimately encountered (see §6.13). For this reason, feedback loops in the digital system may need additional lowpass filtering to attenuate newly generated high frequencies.

A sampled impulse response is an example of a nonparametric representation of a linear, time-invariant system. It is not usually regarded as a physical model, even when the impulse-response samples have a physical interpretation (such as when no anti-aliasing filter is used).

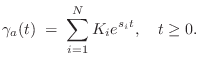

Impulse Invariant Method

The impulse-invariant method converts analog filter transfer

functions to digital filter transfer functions in such a way that the

impulse response is the same (invariant) at the sampling

instants [343], [362, pp.

216-219]. Thus, if ![]() denotes the

impulse-response of an analog (continuous-time) filter, then the

digital (discrete-time) filter given by the impulse-invariant method

will have impulse response

denotes the

impulse-response of an analog (continuous-time) filter, then the

digital (discrete-time) filter given by the impulse-invariant method

will have impulse response

![]() , where

, where ![]() denotes the

sampling interval in seconds. Moreover, the order of the filter is

preserved, and IIR analog filters map to IIR digital filters.

However, the digital filter's frequency response is an aliased

version of the analog filter's frequency

response.9.3

denotes the

sampling interval in seconds. Moreover, the order of the filter is

preserved, and IIR analog filters map to IIR digital filters.

However, the digital filter's frequency response is an aliased

version of the analog filter's frequency

response.9.3

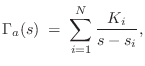

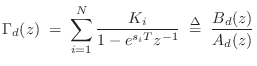

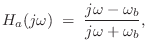

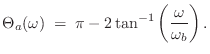

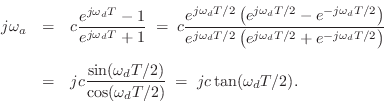

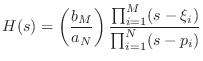

To derive the impulse-invariant method, we begin with the analog transfer function

and perform a partial fraction expansion (PFE) down to first-order terms [449]:9.4

and the residues have remained unchanged. Clearly we must have

Note that the series combination of two digital filters designed by the impulse-invariant method is not impulse invariant. In other terms, the convolution of two sampled analog signals is not the same as the sampled convolution of those analog signals. This is easy to see when aliasing is considered. For example, let one signal be the impulse response of an ideal lowpass filter cutting off below half the sampling rate. Then this signal will not alias when sampled, and its convolution with any second signal will similarly not alias when sampled. However, if the second signal does alias upon sampling, then this aliasing is gone when the convolution precedes the sampling, and the results cannot be the same in the two cases.

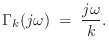

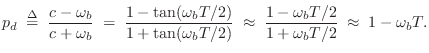

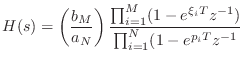

Matched Z Transformation

The matched z transformation uses the same pole-mapping

Eq.![]() (8.2) as in the impulse-invariant method, but the zeros

are handled differently. Instead of only mapping the poles of the

partial fraction expansion and letting the zeros fall where they may,

the matched z transformation maps both the poles and zeros in the

factored form of the transfer function [362, pp.

224-226].

(8.2) as in the impulse-invariant method, but the zeros

are handled differently. Instead of only mapping the poles of the

partial fraction expansion and letting the zeros fall where they may,

the matched z transformation maps both the poles and zeros in the

factored form of the transfer function [362, pp.

224-226].

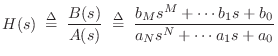

The factored form [449] of a transfer function

can be written as

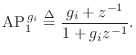

The matched z transformation is carried out by replacing each first-order term of the form

to get

Thus, the matched z transformation normally yields different digital zeros than the impulse-invariant method. The impulse-invariant method is generally considered superior to the matched z transformation [343].

Relation to Finite Difference Approximation

The Finite Difference Approximation (FDA) (§7.3.1) is a

special case of the matched ![]() transformation applied to the point

transformation applied to the point

![]() . To see this, simply set

. To see this, simply set ![]() in Eq.

in Eq.![]() (8.5) to obtain

(8.5) to obtain

which is the FDA definition in the frequency domain given in Eq.

Since the FDA equals the match z transformation for the point ![]() , it maps

analog dc (

, it maps

analog dc (![]() ) to digital dc (

) to digital dc (![]() ) exactly. However, that is the

only point on the frequency axis that is perfectly mapped, as shown in

Fig.7.15.

) exactly. However, that is the

only point on the frequency axis that is perfectly mapped, as shown in

Fig.7.15.

Pole Mapping with Optimal Zeros

We saw in the preceding sections that both the impulse-invariant and

the matched-![]() transformations map poles from the left-half

transformations map poles from the left-half ![]() plane

to the interior of the unit circle in the

plane

to the interior of the unit circle in the ![]() plane via

plane via

where

Therefore, an obvious generalization is to map the poles according to

Eq.![]() (8.8), but compute the zeros in some optimal way, such as by

Prony's method [449, p. 393],[273,297].

(8.8), but compute the zeros in some optimal way, such as by

Prony's method [449, p. 393],[273,297].

It is hard to do better Eq.![]() (8.8) as a pole mapping from

(8.8) as a pole mapping from ![]() to

to

![]() , when aliasing is avoided, because it preserves both the resonance

frequency and bandwidth for a complex pole [449]. Therefore,

good practical modeling results can be obtained by optimizing the

zeros (residues) to achieve audio criteria given these fixed poles.

Alternatively, only the least-damped poles need be constrained in this

way, e.g., to fix and preserve the most important resonances of a

stringed-instrument body or acoustic space.

, when aliasing is avoided, because it preserves both the resonance

frequency and bandwidth for a complex pole [449]. Therefore,

good practical modeling results can be obtained by optimizing the

zeros (residues) to achieve audio criteria given these fixed poles.

Alternatively, only the least-damped poles need be constrained in this

way, e.g., to fix and preserve the most important resonances of a

stringed-instrument body or acoustic space.

Modal Expansion

A well known approach to transfer-function modeling is called modal synthesis, introduced in §1.3.9 [5,299,6,145,381,30]. Modal synthesis may be defined as constructing a source-filter synthesis model in which the filter transfer function is implemented as a sum of first- and/or second-order filter sections (i.e., as a parallel filter bank in which each filter is at most second-order--this was reviewed in §1.3.9). In other words, the physical system is represented as a superposition of individual modes driven by some external excitation (such as a pluck or strike).

In acoustics, the term mode of vibration, or normal mode, normally refers to a single-frequency spatial eigensolution of the governing wave equation. For example, the modes of an ideal vibrating string are the harmonically related sinusoidal string-shapes having an integer number of uniformly spaced zero-crossings (or nodes) along the string, including its endpoints. As first noted by Daniel Bernoulli (§A.2), acoustic vibration can be expressed as a superposition of component sinusoidal vibrations, i.e., as a superposition of modes.9.6

When a single mode is excited by a sinusoidal driving force, all points of the physical object vibrate at the same temporal frequency (cycles per second), and the mode shape becomes proportional to the spatial amplitude envelope of the vibration. The sound emitted from the top plate of a guitar, for example, can be represented as a weighted sum of the radiation patterns of the respective modes of the top plate, where the weighting function is constructed according to how much each mode is excited (typically by the guitar bridge) [143,390,109,205,209].

The impulse-invariant method (§8.2), can be considered a

special case of modal synthesis in which a continuous-time ![]() -plane

transfer function is given as a starting point. More typically, modal

synthesis starts with a measured frequency response, and a

second-order parallel filter bank is fit to that in some way. In

particular, any filter-design technique may be used (§8.6),

followed by a conversion to second-order parallel form.

-plane

transfer function is given as a starting point. More typically, modal

synthesis starts with a measured frequency response, and a

second-order parallel filter bank is fit to that in some way. In

particular, any filter-design technique may be used (§8.6),

followed by a conversion to second-order parallel form.

Modal expansions find extensive application in industry for determining parametric frequency responses (superpositions of second-order modes) from measured vibration data [299]. Each mode is typically parametrized in terms of its resonant frequency, bandwidth (or damping), and gain (most generally complex gain, to include phase).

Modal synthesis can also be seen as a special case of source-filter synthesis, which may be defined as any signal model based on factoring a sound-generating process into a filter driven by some (usually relatively simple) excitation signal. An early example of source-filter synthesis is the vocoder (§A.6.1). Whenever the filter in a source-filter model is implemented as a parallel second-order filter bank, we can call it modal synthesis (although, strictly speaking, each filter section should correspond to a resonant mode of the modeled resonant system).

Also related to modal synthesis is so-called formant synthesis, used extensively in speech synthesis [219,255,389,40,81,491,490,313,363,297]. A formant is simply a filter resonance having some center-frequency, bandwidth, and (sometimes specified) gain. Thus, a formant corresponds to a single mode of vibration in the vocal tract. Many text-to-speech systems in use today are based on the Klatt formant synthesizer for speech [255].

Since the importance of spectral formants in sound synthesis has more to do with the way we hear than with the physical parameters of a system, formant synthesis is probably best viewed as a spectral modeling synthesis method [456], as opposed to a physical modeling technique [435]. An exception to this rule may occur when the system consists physically of a parallel bank of second-order resonators, such as an array of tuning forks or Helmholtz resonators. In such a case, the mode parameters correspond to physically independent objects; this is of course rare in practice.

In the linear, time-invariant case, only modes in the range of human hearing need to be retained in the model. Also, any ``uncontrollable'' or ``unobservable'' modes should obviously be left out as well. With these simplificiation, the modal representation is generally more efficient than an explicit mass-spring-dashpot digitization (§7.3). On the other hand, since the modal representation is usually not directly physical, nonlinear extensions may be difficult and/or behave unnaturally.

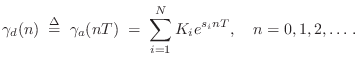

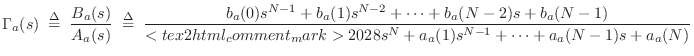

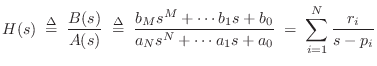

As in the impulse-invariant method (§8.2), starting with an

order ![]() transfer function

transfer function ![]() describing the input-output

behavior of a physical system, a modal description can be obtained

immediately from the partial fraction expansion (PFE):

describing the input-output

behavior of a physical system, a modal description can be obtained

immediately from the partial fraction expansion (PFE):

where

|

(9.10) |

Let

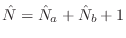

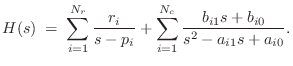

The real poles, if any, can be paired off into a sum of second-order sections as well, with one first-order section left over when

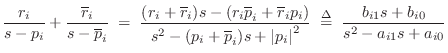

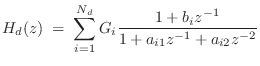

A typical practical implementation of each mode in the sum is by means of second-order filter, or biquad section [449]. A biquad section is easily converted from continuous- to discrete-time form, such as by the impulse-invariant method of §8.2. The final discrete-time model then consist of a parallel bank of second-order digital filters

where

Expressing complex poles of ![]() in polar form as

in polar form as

![]() , (where now we assume

, (where now we assume ![]() denotes a pole in the

denotes a pole in the ![]() plane), we obtain the classical relations [449]

plane), we obtain the classical relations [449]

These parameters are in turn related to the modal parameters resonance frequency

Often ![]() and

and ![]() are estimated from a measured log-magnitude

frequency-response (see §8.8 for a discussion of various methods).

are estimated from a measured log-magnitude

frequency-response (see §8.8 for a discussion of various methods).

It remains to determine the overall section gain ![]() in

Eq.

in

Eq.![]() (8.12), and the coefficient

(8.12), and the coefficient ![]() determining the zero location.

If

determining the zero location.

If ![]() (as is common in practice, since the human ear is not very

sensitive to spectral nulls), then

(as is common in practice, since the human ear is not very

sensitive to spectral nulls), then ![]() can be chosen to match the

measured peak height. A particularly simple automatic method is to

use least-squares minimization to compute

can be chosen to match the

measured peak height. A particularly simple automatic method is to

use least-squares minimization to compute ![]() using Eq.

using Eq.![]() (8.12)

with

(8.12)

with ![]() and

and ![]() fixed (and

fixed (and ![]() ). Generally speaking,

least-squares minimization is always immediate when the error to be

minimized is linear in the unknown parameter (in this case

). Generally speaking,

least-squares minimization is always immediate when the error to be

minimized is linear in the unknown parameter (in this case ![]() ).

).

To estimate ![]() in Eq.

in Eq.![]() (8.12), an effective method is to work

initially with a complex, first-order partial fraction

expansion, and use least squares to determine complex gain

coefficients

(8.12), an effective method is to work

initially with a complex, first-order partial fraction

expansion, and use least squares to determine complex gain

coefficients ![]() for first-order (complex) terms of the form

for first-order (complex) terms of the form

Sections implementing two real poles are not conveniently specified in

terms of resonant frequency and bandwidth. In such cases, it is more

common to work with the filter coefficients ![]() and

and ![]() directly,

as they are often computed by system-identification software

[288,428] or general purpose filter design

utilities, such as in Matlab or Octave [443].

directly,

as they are often computed by system-identification software

[288,428] or general purpose filter design

utilities, such as in Matlab or Octave [443].

To summarize, numerous techniques exist for estimating modal parameters from measured frequency-response data, and some of these are discussed in §8.8 below. Specifically, resonant modes can be estimated from the magnitude, phase, width, and location of peaks in the Fourier transform of a recorded (or estimated) impulse response Another well known technique is Prony's method [449, p. 393], [297]. There is also a large literature on other methods for estimating the parameters of exponentially decaying sinusoids; examples include the matrix-pencil method [274], and parametric spectrum analysis in general [237].

State Space Approach to Modal Expansions

The preceding discussion of modal synthesis was based primarily on fitting a sum of biquads to measured frequency-response peaks. A more general way of arriving at a modal representation is to first form a state space model of the system [449], and then convert to the modal representation by diagonalizing the state-space model. This approach has the advantage of preserving system behavior between the given inputs and outputs. Specifically, the similarity transform used to diagonalize the system provides new input and output gain vectors which properly excite and observe the system modes precisely as in the original system. This procedure is especially more convenient than the transfer-function based approach above when there are multiple inputs and outputs. For some mathematical details, see [449]9.7For a related worked example, see §C.17.6.

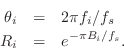

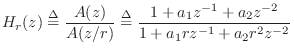

Delay Loop Expansion

When a subset of the resonating modes are nearly harmonically tuned, it can be much more computationally efficient to use a filtered delay loop (see §2.6.5) to generate an entire quasi-harmonic series of modes rather than using a biquad for each modal peak [439]. In this case, the resonator model becomes

Note that when ![]() is close to

is close to ![]() instead of

instead of ![]() , primarily

only odd harmonic resonances are produced, as has been used in

modeling the clarinet [431].

, primarily

only odd harmonic resonances are produced, as has been used in

modeling the clarinet [431].

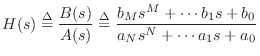

Frequency-Response Matching Using

Digital Filter Design Methods

Given force inputs and velocity outputs, the frequency response

of an ideal mass was given in Eq.![]() (7.1.2) as

(7.1.2) as

where we assume

Ideal Differentiator (Spring Admittance)

Figure 8.1 shows a graph of the frequency response of the

ideal differentiator (spring admittance). In principle, a

digital differentiator is a filter whose frequency response

![]() optimally approximates

optimally approximates ![]() for

for ![]() between

between ![]() and

and ![]() . Similarly, a digital integrator must

match

. Similarly, a digital integrator must

match ![]() along the unit circle in the

along the unit circle in the ![]() plane. The reason

an exact match is not possible is that the ideal frequency responses

plane. The reason

an exact match is not possible is that the ideal frequency responses

![]() and

and ![]() , when wrapped along the unit circle in the

, when wrapped along the unit circle in the

![]() plane, are not ``smooth'' functions any more (see

Fig.8.1). As a result, there is no filter with a

rational transfer function (i.e., finite order) that can match the

desired frequency response exactly.

plane, are not ``smooth'' functions any more (see

Fig.8.1). As a result, there is no filter with a

rational transfer function (i.e., finite order) that can match the

desired frequency response exactly.

![\includegraphics[scale=0.9]{eps/f_ideal_diff_fr_cropped}](http://www.dsprelated.com/josimages_new/pasp/img1805.png) |

The discontinuity at ![]() alone is enough to ensure that no

finite-order digital transfer function exists with the desired

frequency response. As with bandlimited interpolation (§4.4),

it is good practice to reserve a ``guard band'' between the highest

needed frequency

alone is enough to ensure that no

finite-order digital transfer function exists with the desired

frequency response. As with bandlimited interpolation (§4.4),

it is good practice to reserve a ``guard band'' between the highest

needed frequency

![]() (such as the limit of human hearing) and half

the sampling rate

(such as the limit of human hearing) and half

the sampling rate ![]() . In the guard band

. In the guard band

![]() , digital

filters are free to smoothly vary in whatever way gives the best

performance across frequencies in the audible band

, digital

filters are free to smoothly vary in whatever way gives the best

performance across frequencies in the audible band

![]() at the

lowest cost. Figure 8.2 shows an example.

Note that, as with filters used for bandlimited

interpolation, a small increment in oversampling factor yields a much

larger decrease in filter cost (when the sampling rate is near

at the

lowest cost. Figure 8.2 shows an example.

Note that, as with filters used for bandlimited

interpolation, a small increment in oversampling factor yields a much

larger decrease in filter cost (when the sampling rate is near

![]() ).

).

In the general case of Eq.![]() (8.14) with

(8.14) with ![]() , digital filters

can be designed to implement arbitrarily accurate admittance transfer

functions by finding an optimal rational approximation to the complex

function of a single real variable

, digital filters

can be designed to implement arbitrarily accurate admittance transfer

functions by finding an optimal rational approximation to the complex

function of a single real variable ![]()

Digital Filter Design Overview

This section (adapted from [428]), summarizes some of the more commonly used methods for digital filter design aimed at matching a nonparametric frequency response, such as typically obtained from input/output measurements. This problem should be distinguished from more classical problems with their own specialized methods, such as designing lowpass, highpass, and bandpass filters [343,362], or peak/shelf equalizers [559,449], and other utility filters designed from a priori mathematical specifications.

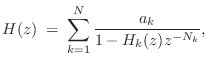

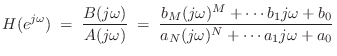

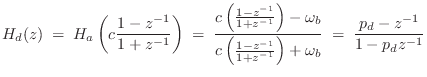

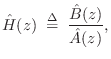

The problem of fitting a digital filter to a prescribed frequency

response may be formulated as follows. To simplify, we set ![]() .

.

Given a continuous complex function

![]() ,

corresponding to a causal desired frequency response,9.8 find a stable digital filter of the form

,

corresponding to a causal desired frequency response,9.8 find a stable digital filter of the form

| (9.15) | |||

| (9.16) |

with

![]() given, such that some norm of the error

given, such that some norm of the error

is minimum with respect to the filter coefficients

The approximate filter ![]() is typically constrained to be

stable, and since

is typically constrained to be

stable, and since

![]() is causal (no positive powers of

is causal (no positive powers of ![]() ),

stability implies causality. Consequently, the impulse response of the

model

),

stability implies causality. Consequently, the impulse response of the

model

![]() is zero for

is zero for ![]() .

.

The filter-design problem is then to find a (strictly) stable

![]() -pole,

-pole,

![]() -zero digital filter which minimizes some norm of

the error in the frequency-response. This is fundamentally

rational approximation of a complex function of a real

(frequency) variable, with constraints on the poles.

-zero digital filter which minimizes some norm of

the error in the frequency-response. This is fundamentally

rational approximation of a complex function of a real

(frequency) variable, with constraints on the poles.

While the filter-design problem has been formulated quite naturally,

it is difficult to solve in practice. The strict stability assumption

yields a compact space of filter coefficients

![]() , leading to the

conclusion that a best approximation

, leading to the

conclusion that a best approximation

![]() exists over this

domain.9.9Unfortunately, the norm of the error

exists over this

domain.9.9Unfortunately, the norm of the error

![]() typically is

not a convex9.10function of the filter coefficients on

typically is

not a convex9.10function of the filter coefficients on

![]() . This

means that algorithms based on gradient descent may fail to find an

optimum filter due to their premature termination at a suboptimal

local minimum of

. This

means that algorithms based on gradient descent may fail to find an

optimum filter due to their premature termination at a suboptimal

local minimum of

![]() .

.

Fortunately, there is at least one norm whose global minimization may be accomplished in a straightforward fashion without need for initial guesses or ad hoc modifications of the complex (phase-sensitive) IIR filter-design problem--the Hankel norm [155,428,177,36]. Hankel norm methods for digital filter design deliver a spontaneously stable filter of any desired order without imposing coefficient constraints in the algorithm.

An alternative to Hankel-norm approximation is to reformulate the

problem, replacing Eq.![]() (8.17) with a modified error criterion so that

the resulting problem can be solved by linear least-squares or

convex optimization techniques. Examples include

(8.17) with a modified error criterion so that

the resulting problem can be solved by linear least-squares or

convex optimization techniques. Examples include

- Pseudo-norm minimization:

(Pseudo-norms can be zero for nonzero functions.)

For example, Padé approximation falls in this category.

In Padé approximation, the first

samples

of the impulse-response

samples

of the impulse-response  of

of  are matched exactly,

and the error in the remaining impulse-response samples is ignored.

are matched exactly,

and the error in the remaining impulse-response samples is ignored.

- Ratio Error: Minimize

subject to

subject to

. Minimizing the

. Minimizing the  norm of the ratio error

yields the class of methods known as linear prediction

techniques [20,296,297]. Since,

by the definition of a norm, we have

norm of the ratio error

yields the class of methods known as linear prediction

techniques [20,296,297]. Since,

by the definition of a norm, we have

,

it follows that

,

it follows that

; therefore,

ratio error methods ignore the phase of the

approximation. It is also evident that ratio error is

minimized by making

; therefore,

ratio error methods ignore the phase of the

approximation. It is also evident that ratio error is

minimized by making

larger than

larger than

.9.11 For this reason, ratio-error

methods are considered most appropriate for modeling the

spectral envelope of

.9.11 For this reason, ratio-error

methods are considered most appropriate for modeling the

spectral envelope of

. It is well known

that these methods are fast and exceedingly robust in

practice, and this explains in part why they are used almost

exclusively in some data-intensive applications such as speech

modeling and other spectral-envelope applications. In some

applications, such as adaptive control or forecasting, the

fact that linear prediction error is minimized can justify

their choice.

. It is well known

that these methods are fast and exceedingly robust in

practice, and this explains in part why they are used almost

exclusively in some data-intensive applications such as speech

modeling and other spectral-envelope applications. In some

applications, such as adaptive control or forecasting, the

fact that linear prediction error is minimized can justify

their choice.

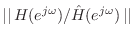

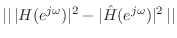

- Equation error: Minimize

When the

![$\displaystyle \left\Vert\,{\hat A}(e^{j\omega})H(e^{j\omega})-{\hat B}(e^{j\ome...

...}(e^{j\omega})\left[ H(e^{j\omega})-{\hat H}(e^{j\omega})\right]\,\right\Vert.

$](http://www.dsprelated.com/josimages_new/pasp/img1848.png)

norm of equation-error is minimized, the

problem becomes solving a set of

norm of equation-error is minimized, the

problem becomes solving a set of

linear

equations.

linear

equations.

The above expression makes it clear that equation-error can be seen as a frequency-response error weighted by

. Thus, relatively large errors can be

expected where the poles of the optimum approximation (roots

of

. Thus, relatively large errors can be

expected where the poles of the optimum approximation (roots

of

) approach the unit circle

) approach the unit circle  . While this may

make the frequency-domain formulation seem ill-posed, in the

time-domain, linear prediction error is minimized in

the

. While this may

make the frequency-domain formulation seem ill-posed, in the

time-domain, linear prediction error is minimized in

the  sense, and in certain applications this is ideal.

(Equation-error methods thus provide a natural extension of

ratio-error methods to include zeros.) Using so-called

Steiglitz-McBride iterations

[287,449,288], the

equation-error solution iteratively approaches the

norm-minimizing solution of Eq.

sense, and in certain applications this is ideal.

(Equation-error methods thus provide a natural extension of

ratio-error methods to include zeros.) Using so-called

Steiglitz-McBride iterations

[287,449,288], the

equation-error solution iteratively approaches the

norm-minimizing solution of Eq. (8.17) for the L2

norm.

(8.17) for the L2

norm.

Examples of minimizing equation error using the matlab function invfreqz are given in §8.6.3 and §8.6.4 below. See [449, Appendix I] (based on [428, pp. 48-50]) for a discussion of equation-error IIR filter design and a derivation of a fast equation-error method based on the Fast Fourier Transform (FFT) (used in invfreqz).

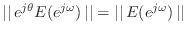

- Conversion to real-valued approximation: For

example, power spectrum matching, i.e., minimization of

, is possible

using the Chebyshev or

, is possible

using the Chebyshev or

norm [428]. Similarly,

linear-phase filter design can be carried out with some

guarantees, since again the problem reduces to real-valued

approximation on the unit circle. The essence of these

methods is that the phase-response is eliminated from

the error measure, as in the norm of the ratio error, in order

to convert a complex approximation problem into a real one.

Real rational approximation of a continuous curve appears to

be solved in principle only under the

norm [428]. Similarly,

linear-phase filter design can be carried out with some

guarantees, since again the problem reduces to real-valued

approximation on the unit circle. The essence of these

methods is that the phase-response is eliminated from

the error measure, as in the norm of the ratio error, in order

to convert a complex approximation problem into a real one.

Real rational approximation of a continuous curve appears to

be solved in principle only under the

norm

[373,374].

norm

[373,374].

- Decoupling poles and zeros: An effective example of

this approach is Kopec's method [428] which consists of

using ratio error to find the poles, computing the error

spectrum

, inverting it, and fitting poles again (to

, inverting it, and fitting poles again (to

). There is a wide variety of methods which first

fit poles and then zeros. None of these methods produce

optimum filters, however, in any normal sense.

). There is a wide variety of methods which first

fit poles and then zeros. None of these methods produce

optimum filters, however, in any normal sense.

In addition to these modifications, sometimes it is necessary to

reformulate the problem in order to achieve a different goal. For

example, in some audio applications, it is desirable to minimize the

log-magnitude frequency-response error. This is due to the way

we hear spectral distortions in many circumstances. A technique which

accomplishes this objective to the first order in the

![]() norm is

described in [428].

norm is

described in [428].

Sometimes the most important spectral structure is confined to an interval of the frequency domain. A question arises as to how this structure can be accurately modeled while obtaining a cruder fit elsewhere. The usual technique is a weighting function versus frequency. An alternative, however, is to frequency-warp the problem using a first-order conformal map. It turns out a first-order conformal map can be made to approximate very well frequency-resolution scales of human hearing such as the Bark scale or ERB scale [459]. Frequency-warping is especially valuable for providing an effective weighting function connection for filter-design methods, such as the Hankel-norm method, that are intrinsically do not offer choice of a weighted norm for the frequency-response error.

There are several methods which produce

![]() instead of

instead of

![]() directly. A fast spectral factorization technique is

useful in conjunction with methods of this category [428].

Roughly speaking, a size

directly. A fast spectral factorization technique is

useful in conjunction with methods of this category [428].

Roughly speaking, a size

![]() polynomial factorization is replaced

by an FFT and a size

polynomial factorization is replaced

by an FFT and a size

![]() system of linear equations.

system of linear equations.

Digital Differentiator Design

We saw the ideal digital differentiator frequency response in

Fig.8.1, where it was noted that the discontinuity in

the response at

![]() made an ideal design unrealizable

(infinite order). Fortunately, such a design is not even needed in

practice, since there is invariably a guard band between the highest

supported frequency

made an ideal design unrealizable

(infinite order). Fortunately, such a design is not even needed in

practice, since there is invariably a guard band between the highest

supported frequency

![]() and half the sampling rate

and half the sampling rate ![]() .

.

![\includegraphics[width=\twidth]{eps/iirdiff-mag-phs-N0-M10}](http://www.dsprelated.com/josimages_new/pasp/img1861.png) |

Figure 8.2 illustrates a more practical design specification for the digital differentiator as well as the performance of a tenth-order FIR fit using invfreqz (which minimizes equation error) in Octave.9.12 The weight function passed to invfreqz was 1 from 0 to 20 kHz, and zero from 20 kHz to half the sampling rate (24 kHz). Notice how, as a result, the amplitude response follows that of the ideal differentiator until 20 kHz, after which it rolls down to a gain of 0 at 24 kHz, as it must (see Fig.8.1). Higher order fits yield better results. Using poles can further improve the results, but the filter should be checked for stability since invfreqz designs filters in the frequency domain and does not enforce stability.9.13

Fitting Filters to Measured Amplitude Responses

The preceding filter-design example digitized an ideal differentiator, which is an example of converting an LTI lumped modeling element into a digital filter while maximally preserving its frequency response over the audio band. Another situation that commonly arises is the need for a digital filter that matches a measured frequency response over the audio band.

Measured Amplitude Response

Figure 8.3 shows a plot of simulated amplitude-response measurements at 10 frequencies equally spread out between 100 Hz and 3 kHz on a log frequency scale. The ``measurements'' are indicated by circles. Each circle plots, for example, the output amplitude divided by the input amplitude for a sinusoidal input signal at that frequency [449]. These ten data points are then extended to dc and half the sampling rate, interpolated, and resampled to a uniform frequency grid (solid line in Fig.8.3), as needed for FFT processing. The details of these computations are listed in Fig.8.4. We will fit a four-pole, one-zero, digital-filter frequency-response to these data.9.14

![\includegraphics[width=\twidth]{eps/tmps2-G}](http://www.dsprelated.com/josimages_new/pasp/img1862.png) |

NZ = 1; % number of ZEROS in the filter to be designed NP = 4; % number of POLES in the filter to be designed NG = 10; % number of gain measurements fmin = 100; % lowest measurement frequency (Hz) fmax = 3000; % highest measurement frequency (Hz) fs = 10000; % discrete-time sampling rate Nfft = 512; % FFT size to use df = (fmax/fmin)^(1/(NG-1)); % uniform log-freq spacing f = fmin * df .^ (0:NG-1); % measurement frequency axis % Gain measurements (synthetic example = triangular amp response): Gdb = 10*[1:NG/2,NG/2:-1:1]/(NG/2); % between 0 and 10 dB gain % Must decide on a dc value. % Either use what is known to be true or pick something "maximally % smooth". Here we do a simple linear extrapolation: dc_amp = Gdb(1) - f(1)*(Gdb(2)-Gdb(1))/(f(2)-f(1)); % Must also decide on a value at half the sampling rate. % Use either a realistic estimate or something "maximally smooth". % Here we do a simple linear extrapolation. While zeroing it % is appealing, we do not want any zeros on the unit circle here. Gdb_last_slope = (Gdb(NG) - Gdb(NG-1)) / (f(NG) - f(NG-1)); nyq_amp = Gdb(NG) + Gdb_last_slope * (fs/2 - f(NG)); Gdbe = [dc_amp, Gdb, nyq_amp]; fe = [0,f,fs/2]; NGe = NG+2; % Resample to a uniform frequency grid, as required by ifft. % We do this by fitting cubic splines evaluated on the fft grid: Gdbei = spline(fe,Gdbe); % say `help spline' fk = fs*[0:Nfft/2]/Nfft; % fft frequency grid (nonneg freqs) Gdbfk = ppval(Gdbei,fk); % Uniformly resampled amp-resp figure(1); semilogx(fk(2:end-1),Gdbfk(2:end-1),'-k'); grid('on'); axis([fmin/2 fmax*2 -3 11]); hold('on'); semilogx(f,Gdb,'ok'); xlabel('Frequency (Hz)'); ylabel('Magnitude (dB)'); title(['Measured and Extrapolated/Interpolated/Resampled ',... 'Amplitude Response']); |

Desired Impulse Response

It is good to check that the desired impulse response is not overly

aliased in the time domain. The impulse-response for this example is

plotted in Fig.8.5. We see that it appears quite short

compared with the inverse FFT used to compute it. The script in

Fig.8.6 gives the details of this computation, and also

prints out a measure of ``time-limitedness'' defined as the ![]() norm of the outermost 20% of the impulse response divided by its

total

norm of the outermost 20% of the impulse response divided by its

total ![]() norm--this measure was reported to be

norm--this measure was reported to be ![]() % for this

example.

% for this

example.

![\includegraphics[width=\twidth]{eps/tmps2-ir}](http://www.dsprelated.com/josimages_new/pasp/img1864.png) |

Note also that the desired impulse response is noncausal. In fact, it is zero phase [449]. This is of course expected because the desired frequency response was real (and nonnegative).

Ns = length(Gdbfk); if Ns~=Nfft/2+1, error("confusion"); end

Sdb = [Gdbfk,Gdbfk(Ns-1:-1:2)]; % install negative-frequencies

S = 10 .^ (Sdb/20); % convert to linear magnitude

s = ifft(S); % desired impulse response

s = real(s); % any imaginary part is quantization noise

tlerr = 100*norm(s(round(0.9*Ns:1.1*Ns)))/norm(s);

disp(sprintf(['Time-limitedness check: Outer 20%% of impulse ' ...

'response is %0.2f %% of total rms'],tlerr));

% = 0.02 percent

if tlerr>1.0 % arbitrarily set 1% as the upper limit allowed

error('Increase Nfft and/or smooth Sdb');

end

figure(2);

plot(s,'-k'); grid('on'); title('Impulse Response');

xlabel('Time (samples)'); ylabel('Amplitude');

|

Converting the Desired Amplitude Response to Minimum Phase

Phase-sensitive filter-design methods such as the equation-error method implemented in invfreqz are normally constrained to produce filters with causal impulse responses.9.15 In cases such as this (phase-sensitive filter design when we don't care about phase--or don't have it), it is best to compute the minimum phase corresponding to the desired amplitude response [449].

As detailed in Fig.8.8, the minimum phase is constructed by the cepstral method [449].9.16

The four-pole, one-zero filter fit using invfreqz is shown in Fig.8.7.

![\includegraphics[width=\twidth]{eps/tmps2-Hh}](http://www.dsprelated.com/josimages_new/pasp/img1865.png) |

c = ifft(Sdb); % compute real cepstrum from log magnitude spectrum % Check aliasing of cepstrum (in theory there is always some): caliaserr = 100*norm(c(round(Ns*0.9:Ns*1.1)))/norm(c); disp(sprintf(['Cepstral time-aliasing check: Outer 20%% of ' ... 'cepstrum holds %0.2f %% of total rms'],caliaserr)); % = 0.09 percent if caliaserr>1.0 % arbitrary limit error('Increase Nfft and/or smooth Sdb to shorten cepstrum'); end % Fold cepstrum to reflect non-min-phase zeros inside unit circle: % If complex: % cf=[c(1),c(2:Ns-1)+conj(c(Nfft:-1:Ns+1)),c(Ns),zeros(1,Nfft-Ns)]; cf = [c(1), c(2:Ns-1)+c(Nfft:-1:Ns+1), c(Ns), zeros(1,Nfft-Ns)]; Cf = fft(cf); % = dB_magnitude + j * minimum_phase Smp = 10 .^ (Cf/20); % minimum-phase spectrum Smpp = Smp(1:Ns); % nonnegative-frequency portion wt = 1 ./ (fk+1); % typical weight fn for audio wk = 2*pi*fk/fs; [B,A] = invfreqz(Smpp,wk,NZ,NP,wt); Hh = freqz(B,A,Ns); figure(3); plot(fk,db([Smpp(:),Hh(:)])); grid('on'); xlabel('Frequency (Hz)'); ylabel('Magnitude (dB)'); title('Magnitude Frequency Response'); % legend('Desired','Filter'); |

Further Reading on Digital Filter Design

This section provided only a ``surface scratch'' into the large topic of digital filter design based on an arbitrary frequency response. The main goal here was to provide a high-level orientation and to underscore the high value of such an approach for encapsulating linear, time-invariant subsystems in a computationally efficient yet accurate form. Applied examples will appear in later chapters. We close this section with some pointers for further reading in the area of digital filter design.

Some good books on digital filter design in general include [343,362,289]. Also take a look at the various references in the help/type info for Matlab/Octave functions pertaining to filter design. Methods for FIR filter design (used in conjunction with FFT convolution) are discussed in Book IV [456], and the equation-error method for IIR filter design was introduced in Book II [449]. See [281,282] for related techniques applied to guitar modeling. See [454] for examples of using matlab functions invfreqz and invfreqs to fit filters to measured frequency-response data (specifically the wah pedal design example). Other filter-design tools can be found in the same website area.

The overview of methods in §8.6.2 above is elaborated in [428], including further method details, application to violin modeling, and literature pointers regarding the methods addressed. Some of this material was included in [449, Appendix I].

In Octave or Matlab, say lookfor filter to obtain a list of filter-related functions. Matlab has a dedicated filter-design toolbox (say doc filterdesign in Matlab). In many matlab functions (both Octave and Matlab), there are literature citations in the source code. For example, type invfreqz in Octave provides a URL to a Web page (from [449]) describing the FFT method for equation-error filter design.

Commuted Synthesis

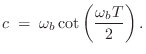

In acoustic stringed musical instruments such as guitars and pianos, the strings couple via a ``bridge'' to some resonating acoustic structure (typically made of wood) that is required for efficient transduction of string vibration to acoustic propagation in the surrounding air. The resonator also imposes its own characteristic frequency response on the radiated sound. Spectral characteristics of the string excitation, the string resonances, and body/soundboard/enclosure resonator, are thus combined multiplicatively in the radiated sound, as depicted in Fig. 8.9.

The idea of commuted synthesis is that, because the string and body are close to linear and time-invariant, we may commute the string and resonator, as shown in Fig. 8.10.

The excitation can now be convolved with the resonator impulse response to provide a single, aggregate, excitation table, as depicted in Fig. 8.11. This is the basic idea behind commuted synthesis, and it greatly reduces the complexity of stringed instrument implementations, since the body filter is replaced by an inexpensive lookup table [439,230]. These simplifications are presently important in single-processor polyphonic synthesizers such as multimedia DSP chips.

![\includegraphics[scale=0.9]{eps/fexcitation}](http://www.dsprelated.com/josimages_new/pasp/img1868.png) |

In the simplest case, the string is ``plucked'' using the (half-windowed) impulse response of the body.

An example of an excitation is the force applied by a pick or a finger at

some point, or set of points, along the string. The input force per sample

at each point divided by ![]() gives the velocity to inject additively at

that point in both traveling-wave directions. (The factor of

gives the velocity to inject additively at

that point in both traveling-wave directions. (The factor of ![]() comes

from splitting the injected velocity into two traveling-wave components,

and from the fact that two string end-points are being driven.) Equal

injection in the left- and right-going directions corresponds to an

excitation force which is stationary with respect to the string.

comes

from splitting the injected velocity into two traveling-wave components,

and from the fact that two string end-points are being driven.) Equal

injection in the left- and right-going directions corresponds to an

excitation force which is stationary with respect to the string.

In a practical instrument, the ``resonator'' is determined by the choice of output signal in the physical scenario, and it generally includes filtering downstream of the body itself, as shown in Fig. 8.12. A typical example for the guitar or violin would be to choose the output signal at a point a few feet away from the top plate of the body. In practice, such a signal can be measured using a microphone held at the desired output point and recording the response at that point to the striking of the bridge with a force hammer. It is useful to record simultaneously the output of an accelerometer mounted on the bridge in order to also obtain experimentally the driving-point impedance at the bridge. In general, it is desirable to choose the output close to the instrument so as to keep the resonator response as short as possible. The resonator components need to be linear and time invariant, so they will be commutative with the string and combinable with the string excitation signal via convolution.

The string should also be linear and time invariant in order to be able to commute it with the generalized resonator. However, the string is actually the least linear element of most stringed musical instruments, with the main effect of nonlinearity being often a slight increase of the fundamental vibration frequency with amplitude. A secondary effect is to introduce coupling between the two polarizations of vibration along the length of the string. In practice, however, the string can be considered sufficiently close to linear to permit commuting with the body. The string is also time varying in the presence of vibrato, but this too can be neglected in practice. While commuting a live string and resonator may not be identical mathematically, the sound is substantially the same.

There are various options when combining the excitation and resonator into an aggregate excitation, as shown in Fig. 8.11. For example, a wave-table can be prepared which contains the convolution of a particular point excitation with a particular choice of resonator. Perhaps the simplest choice of excitation is the impulse signal. Physically, this would be natural when the wave variables in the string are taken to be acceleration waves for a plucked string; in this case, an ideal pluck gives rise to an impulse of acceleration input to the left and right in the string at the pluck point. If loss of perceived pick position is unimportant, the impulse injection need only be in a single direction. (The comb filtering which gives rise to the pick-position illusion can be restored by injecting a second, negated impulse at a delay equal to the travel time to and from the bridge.) In this simple case of a single impulse to pluck the string, the aggregate excitation is simply the impulse response of the resonator. Many excitation and resonator variations can be simulated using a collection of aggregate excitation tables. It is useful to provide for interpolation of excitation tables so as to provide intermediate points along a parameter dimension. In fact, all the issues normally associated with sampling synthesis arise in the context of the string excitation table. A disadvantage of combining excitation and resonator is the loss of multiple output signals from the body simulation, but the timbral effects arising from the mixing together of multiple body outputs can be obtained via a mixing of corresponding excitation tables.

If the aggregate excitation is too long, it may be shortened by a

variety of techniques. It is good to first convert the signal ![]() to

minimum phase so as to provide the maximum shortening consistent

with the original magnitude spectrum. Secondly,

to

minimum phase so as to provide the maximum shortening consistent

with the original magnitude spectrum. Secondly, ![]() can be windowed

using the right wing of any window function typically used in spectrum

analysis. An interesting choice is the exponential window, since it has

the interpretation of increasing the resonator damping in a uniform manner,

i.e., all the poles and zeros of the resonator are contracted radially in

the

can be windowed

using the right wing of any window function typically used in spectrum

analysis. An interesting choice is the exponential window, since it has

the interpretation of increasing the resonator damping in a uniform manner,

i.e., all the poles and zeros of the resonator are contracted radially in

the ![]() plane by the same factor.

plane by the same factor.

Body-Model Factoring

In commuted synthesis, it is often helpful to factor out the least-damped resonances in the body model for implementation in parametric form (e.g., as second-order filters, or ``biquads''). The advantages of this are manifold, including the following:

- The excitation table is shortened (now containing only the most damped modal components).

- The excitation table signal-to-quantization-noise ratio is improved.

- The most important resonances remain parametric, facilitating real-time control. In other words, the parametric resonances can be independently modulated to produce interesting effects.

- Multiple body outputs become available from the parametric filters.

- Resonators may be already available in a separate effects unit, making

them ``free.''

- A memory vs. computation trade-off is available for cost optimization.

Further Reading in Commuted Synthesis

Laurson et al. [278,277] have developed very high quality synthesis of classical guitar from an extended musical score using the commuted synthesis approach. A key factor in the quality of these results is the great attention to detail in the area of musical control when driving the model from a written score. In contrast to this approach, most of the sound examples in [471] were ``played'' in real time, using a MIDI guitar controller feeding the serial port of a NeXT Computer running SynthBuilder [353].9.17

Extracting Parametric Resonators from a Nonparametric Impulse Response

As mentioned in §8.7.1 above, a valuable way of shortening the excitation table in commuted synthesis is to factor the body resonator into its most-damped and least-damped modes. The most-damped modes are then commuted and combined with the excitation in impulse-response form. The least-damped modes can be left in parametric form as recursive digital filter sections.

Commuted synthesis is a technique in which the body resonator is commuted with the string model, as shown in Fig.8.10, in order to avoid having to implement a large body filter at all [439,232,229].9.18In commuted synthesis, the excitation (e.g., plucking force versus time) can be convolved with the resonator impulse response to provide a single aggregate excitation signal. This signal is short enough to store in a look-up table, and a note is played by simply summing it into the string.

Mode Extraction Techniques

The goal of resonator factoring is to identify and remove the least-damped resonant modes of the impulse response. In principle, this means ascertaining the precise resonance frequencies and bandwidths associated with each of the narrowest ``peaks'' in the resonator frequency response, and dividing them out via inverse filtering, so they can be implemented separately as resonators in series. If in addition the amplitude and phase of a resonance peak are accurately measurable in the complex frequency response, the mode can be removed by complex spectral subtraction (equivalent to subtracting the impulse-response of the resonant mode from the total impulse response); in this case, the parametric modes are implemented in a parallel bank as in [66]. However, in the parallel case, the residual impulse response is not readily commuted with the string.

In the inverse-filtering case, the factored resonator components are in cascade (series) so that the damped modes left behind may be commuted with the string and incorporated in the excitation table by convolving the residual impulse response with the desired string excitation signal. In the parallel case, the damped modes do not commute with the string since doing so would require somehow canceling them in the parallel filter sections. In principle, the string would have to be duplicated so that one instance can be driven by the residual signal with no body resonances at all, while the other is connected to the parallel resonator bank and driven only by the natural string excitation without any commuting of string and resonator. Since duplicating the string is unlikely to be cost-effective, the impulse response of the high-frequency modes can be commuted and convolved with the string excitation as in the series case to obtain qualitative results. The error in doing this is that the high-frequency modes are being multiplied by the parallel resonators rather than being added to them.

Various methods are available for estimating the mode parameters for inverse filtering:

- Amplitude response peak measurement

- Weighted digital filter design

- Linear prediction

- Sinusoidal modeling

- Late impulse-response analysis

Amplitude response peak measurement

The longest ringing modes are associated with the narrowest bandwidths. When they are important resonances in the frequency response, they also tend to be the tallest peaks in the frequency response magnitude. (If they are not the tallest near the beginning of the impulse response, they will be the tallest near the end.) Therefore, one effective technique for measuring the least-damped resonances is simply to find the precise location and width of the narrowest and tallest spectral peaks in the measured amplitude response of the resonator. The center-frequency and bandwidth of a narrow frequency-response peak determine two poles in the resonator to be factored out. Expressing a filter in terms of its poles and zeros is one type of ``parametric'' filter representation, as opposed to ``nonparametric'' representations such as the impulse response or frequency response. Prony's method [449,297,273] is one well known technique for estimating the frequencies and bandwidths of sums of exponentially decaying sinusoids (two-pole resonator impulse responses).

In the factoring example presented in §8.8.6, the frequency and bandwidth of the main Helmholtz air mode are measured manually using an interactive spectrum analysis tool. However, it is a simple matter to automate peak-finding in FFT magnitude data. (See, for example, the peak finders used in sinusoidal modeling, discussed a bit further in §8.8.1 below.)

Weighted digital filter design

Many methods for digital filter design support spectral weighting functions that can be used to focus in on the least-damped modes in the frequency response. One is the weighted equation-error method which is available in the matlab invfreqz() function (§8.6.4). Figure 8.13 illustrates use of it. For simplicity, only one frequency-response peak plus noise is shown in this synthetic example. First, the peak center-frequency is measured using a quadratically interpolating peak finder operating on the dB spectral magnitude. This is used to set the spectral weighting function. Next, invfreqz() is called to design a two-pole filter having a frequency response that approximates the measured data as closely as possible. The weighting function is also shown in Fig.8.13, renormalized to overlay on the scale of the plot. Finally, the amplitude response of the two-pole filter designed by the equation-error method is shown overlaid in the figure. Note that the agreement is quite good near the peak which is what matters most. The interpolated peak frequency measured initially in the nonparametric spectral magnitude data can be used to fine-tune the pole-angles of the designed filter, thus rendering the equation-error method a technique for measuring only the peak bandwidth in this case. There are of course many, many techniques in the signal processing literature for measuring spectral peaks.

Linear prediction

Another well known method for converting the least-damped modes into

parametric form is Linear Predictive Coding (LP) followed by polynomial

factorization to obtain resonator poles. LP is particularly good at

fitting spectral peaks due to the nature of the error criterion it minimizes

[428]. The poles closest to the unit circle in the ![]() plane can be chosen for the ``ringy'' part of the resonator. It is well

known that when using techniques like LP to model spectral peaks for

extraction, orders substantially higher than twice the number of spectral

peaks should be used. The extra degrees of freedom in the LP fit give more

poles for modeling spectral detail other than the peaks, allowing the poles

modeling the peaks to fit them with less distraction. On the other hand,

if the order chosen is too high, sometimes more than two poles will home

in on the same peak. In some cases, this may be appropriate since the

body resonances are not necessary resolvable so as to separate the peaks,

especially at high frequencies.

plane can be chosen for the ``ringy'' part of the resonator. It is well

known that when using techniques like LP to model spectral peaks for

extraction, orders substantially higher than twice the number of spectral

peaks should be used. The extra degrees of freedom in the LP fit give more

poles for modeling spectral detail other than the peaks, allowing the poles

modeling the peaks to fit them with less distraction. On the other hand,

if the order chosen is too high, sometimes more than two poles will home

in on the same peak. In some cases, this may be appropriate since the

body resonances are not necessary resolvable so as to separate the peaks,

especially at high frequencies.

Sinusoidal modeling

Another way to find the least-damped mode parameters is by means of an intermediate sinusoidal model of the body impulse response, or, more appropriately, the energy decay relief (EDR) computed from the body impulse response (see §3.2.2). Such sinusoidal models have been used to determine the string loop filter in digital waveguide strings models. In the case of string loop-filter estimation, the sinusoidal model is applied to the impulse response (or ``pluck'' response) of a vibrating string or acoustic tube. In the present application, it is ideally applied to the EDR of the body impulse response (or ``hammer-strike'' response).

Since sinusoidal modeling software (e.g. [424]) typically quadratically interpolates the peak frequencies, the resonance frequencies are generally quite accurately estimated provided the frame size is chosen large enough to span many cycles of the underlying resonance.

The sinusoidal amplitude envelopes yield a particularly robust measurement of resonance bandwidth. Theoretically, the modal decay should be exponential. Therefore, on a dB scale, the amplitude envelope should decay linearly. Linear regression can be used to fit a straight line to the measured log-amplitude envelope of the impulse response of each long-ringing mode. Note that even when amplitude modulation is present due to modal couplings, the ripples tend to average out in the regression and have little effect on the slope measurement. This robustness can be enhanced by starting and ending the linear regression on local maxima in the amplitude envelope. A method for estimating modal decay parameters in the presence of noise is given in [125,234,235].

Below is a section of matlab code which performs linear regression:

function [slope,offset] = fitline(x,y);

%FITLINE fit line 'y = slope * x + offset'

% to column vectors x and y.

phi = [x, ones(length(x),1)];

p = phi' * y;

r = phi' * phi;

t = r\p;

slope = t(1);

offset = t(2);

Late impulse-response analysis

All methods useable with inverse filtering can be modified based on the observation that late in the impulse response, the damped modes have died away, and the least-damped modes dominate. Therefore, by discarding initial impulse-response data, the problem in some sense becomes ``easier'' at the price of working closer to the noise floor. This technique is most appropriate in conjunction with the inverse filtering method for mode extraction (discussed below), since for subtraction, the modal impulse response must be extrapolated back to the beginning of the data record. However, methods used to compute the filter numerator in variations on Prony's method can be used to scale and phase-align a mode for subtraction [428,297].

One simple approximate technique based on looking only at the late impulse response is to take a zero-padded FFT of the latest Hanning-windowed data segment. The least-damped modes should give very clearly dominant peaks in the FFT magnitude data. As discussed above, the peak(s) can be interpolated to estimate the mode resonance frequency, and the bandwidth can be measured to determine the time-constant of decay. Alternatively, the time-constant of decay can be measured in the time domain by measuring the decay slope of the log amplitude envelope across the segment (this time using a rectangular window). Since the least-damped mode is assumed to be isolated in the late decay, it is easy to form a pitch-synchronous amplitude envelope.

Inverse Filtering

Whatever poles are chosen for the least-damped part, and however they are computed (provided they are stable), the damped part can be computed from the full impulse response and parametric part using inverse filtering, as illustrated in the computed examples above. The inverse filter is formed from zeros equal to the estimated resonant poles. When the inverse filter is applied to the full resonator impulse, a ``residual'' signal is formed which is defined as the impulse response of the leftover, more damped modes. The residual is in exactly the nonparametric form needed for commuting with the string and convolving with the string excitation signal, such as a ``pluck'' signal. Feeding the residual signal to the parametric resonator gives the original resonator impulse response to an extremely high degree of accuracy. The error is due only to numerical round-off error during the inverse and forward filtering computations. In particular, the least-damped resonances need not be accurately estimated for this to hold. When there is parametric estimation error, the least-damped components will fail to be completely removed from the residual signal; however, the residual signal through the parametric resonator will always give an exact reconstruction of the original body impulse response, to within roundoff error. This is similar to the well known feature of linear predictive coding that feeding the prediction error signal to the LP model always gives back the original signal [297].

The parametric resonator need not be restricted to all-pole filters, however, although all-pole filters (plus perhaps zeros set manually to the same angles but contracted radii) turn out to be very convenient and simple to work with. Many filter design techniques exist which can produce a parametric part having any prescribed number of poles and zeros, and weighting functions can be used to ``steer'' the methods toward the least-damped components of the impulse response. The equation-error method illustrated in Fig. 8.13 is an example of a method which can also compute zeros in the parametric part as well as poles. However, for inverse filtering to be an option, the zeros must be constrained to be minimum phase so that their inverses will be stable poles.

Empirical Notes on Inverse Filtering

In experiments factoring guitar body impulse responses, it was found that the largest benefit per section comes from pulling out the main Helmholtz air resonance. Doing just this shortens the impulse response (excitation table) by a very large factor, and because the remaining impulse response is noise-like, it can be truncated more aggressively without introducing artifacts.

It also appears that the bandwidth estimate is not very critical in this case. If it is too large, or if ``isolation zeros'' are not installed behind the poles, as shown in Figs. 8.18b and 8.21b, the inverse filtering serves partially as a preemphasis which tends to flatten the guitar body frequency response overall or cause it to rise with frequency. This has a good effect on the signal-to-quantization-noise ratio versus frequency. To maximize the worst-case signal-to-quantization-noise versus frequency, the residual spectrum should be flat since the quantization noise spectrum is normally close to flat. A preemphasis filter for flattening the overall spectrum is commonly used in speech analysis [363,297]. A better preemphasis in this context is an inverse equal-loudness preemphasis, taking the inverse of an equal-loudness contour near the threshold of hearing in the Fletcher-Munson curves [475]. This corresponds to psychoacoustic ``noise shaping'' so that the quantization noise floor is perceptually uniform, and decreasing playback volume until it falls below the threshold of hearing results in all of the noise disappearing across the entire spectrum at the same volume.9.19

Since in some fixed-point implementations, narrow bandwidths may be

difficult to achieve, good results are obtained by simply setting the

bandwidth of the single resonator to any minimum robust value. As a

result, there may still be some main-air-mode response in the residual

signal, but it is typically very small, and early termination of it using a

half-window for table shortening is much less audible than if the original

impulse response were similarly half-windowed. The net effect on the

instrument is to introduce artificial damping the main air mode in

the guitar body. However, since this mode rings so much longer than the

rest of the modes in the guitar body, shortening it does not appear to be

detrimental to the overall quality of the instrument. In general, it is

not desirable for isolated modes to ring longer than all others. Why would

a classical guitarist want an audible ``ringing'' of the guitar body near

![]() Hz?

Hz?

In computing figures 8.16 and

Fig. 8.16b, the estimated ![]() of the main

Helmholtz air mode was only

of the main

Helmholtz air mode was only ![]() . As a result, it is still weakly present

in the inverse filter output (residual) spectrum

Fig. 8.16b.

. As a result, it is still weakly present

in the inverse filter output (residual) spectrum

Fig. 8.16b.

Matlab Code for Inverse Filtering

Below is the matlab source code used to extract the main Helmholtz air mode from the guitar body impulse response in Figures 8.14 through 8.17:

freq = 104.98; % estimated peak frequency in Hz

bw = 10; % peak bandwidth estimate in Hz

R = exp( - pi * bw / fs); % pole radius

z = R * exp(j * 2 * pi * freq / fs); % pole itself

B = [1, -(z + conj(z)), z * conj(z)] % numerator

r = 0.9; % zero/pole factor (notch isolation)

A = B .* (r .^ [0 : length(B)-1]); % denominator

residual = filter(B,A,bodyIR); % apply inverse filter

Sinusoidal Modeling of Mode Decays

When the amplitude envelope, frequency, phase, and onset time are all accurately estimated (for all time), it is possible to subtract the synthesized modal impulse response from the measured impulse response. (This contrasts with purely spectral modal parameters which are amplitude, frequency, bandwidth, and phase.) This method of ``sinusoidal track removal'' is used in sines-plus-noise spectral modeling. (See [424] for further details and supporting C software). In this approach, the resonant mode is subtracted out rather than divided out of the frequency response.