Virtual Musical Instruments

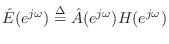

This chapter discusses aspects of synthesis models for specific musical instruments such as guitars, pianos, woodwinds, bowed strings, and brasses. Initially we look at model components for electric guitars, followed by some special considerations for acoustic guitars. Next we focus on basic models for string excitation, first by a mass (a simplified piano hammer), then by a damped spring (plectrum model). Piano modeling is then considered, using commuted synthesis for the acoustic resonators and a parametric signal-model for the force-pulse of the hammer. Finally, elementary synthesis of single-reed woodwinds, such as the clarinet, and bowed-string instruments, such as the violin, are summarized, and some pointers are given regarding other wind and percussion instruments.

Electric Guitars

For electric guitars, which are mainly about the vibrating string and subsequent effects processing, we pick up where §6.7 left off, and work our way up to practical complexity step by step. We begin with practical enhancements for the waveguide string model that are useful for all kinds of guitar models.

Length Three FIR Loop Filter

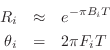

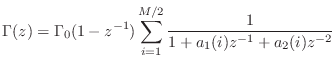

The simplest nondegenerate example of the loop filters of §6.8

is the three-tap FIR case (

![]() ). The symmetry constraint

leaves two degrees of freedom in the frequency response:10.1

). The symmetry constraint

leaves two degrees of freedom in the frequency response:10.1

Length  FIR Loop Filter Controlled by ``Brightness'' and ``Sustain''

FIR Loop Filter Controlled by ``Brightness'' and ``Sustain''

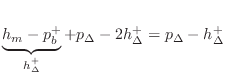

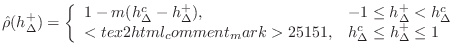

Another convenient parametrization of the second-order symmetric FIR

case is when the dc normalization is relaxed so that two degrees of

freedom are retained. It is then convenient to control them

as brightness ![]() and sustain

and sustain ![]() according to the

formulas

according to the

formulas

where

One-Zero Loop Filter

If we relax the constraint that

![]() be odd, then the simplest case

becomes the one-zero digital filter:

be odd, then the simplest case

becomes the one-zero digital filter:

See [454] for related discussion from a software implementation perspective.

The Karplus-Strong Algorithm

The simulation diagram for the ideal string with the simplest frequency-dependent loss filter is shown in Fig. 9.1. Readers of the computer music literature will recognize this as the structure of the Karplus-Strong algorithm [236,207,489].

![\includegraphics[width=\twidth]{eps/fkarplusstrong}](http://www.dsprelated.com/josimages_new/pasp/img1962.png) |

The Karplus-Strong algorithm, per se, is obtained when the

delay-line initial conditions used to ``pluck'' the string consist of

random numbers, or ``white noise.'' We know the initial shape

of the string is obtained by adding the upper and lower delay

lines of Fig. 6.11, i.e.,

![]() . It is shown in §C.7.4 that the initial

velocity distribution along the string is determined by the

difference between the upper and lower delay lines. Thus, in

the Karplus-Strong algorithm, the string is ``plucked'' by a

random initial displacement and initial velocity distribution.

This is a very energetic excitation, and usually in practice the white

noise is lowpass filtered; the lowpass cut-off frequency gives an

effective dynamic level control since natural stringed

instruments are typically brighter at louder dynamic levels

[428,207].

. It is shown in §C.7.4 that the initial

velocity distribution along the string is determined by the

difference between the upper and lower delay lines. Thus, in

the Karplus-Strong algorithm, the string is ``plucked'' by a

random initial displacement and initial velocity distribution.

This is a very energetic excitation, and usually in practice the white

noise is lowpass filtered; the lowpass cut-off frequency gives an

effective dynamic level control since natural stringed

instruments are typically brighter at louder dynamic levels

[428,207].

Karplus-Strong sound examples are available on the Web.

An implementation of the Karplus-Strong algorithm in the Faust programming language is described (and provided) in [454].

The Extended Karplus-Strong Algorithm

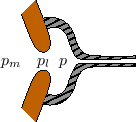

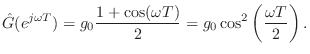

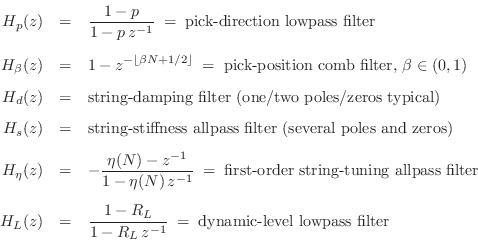

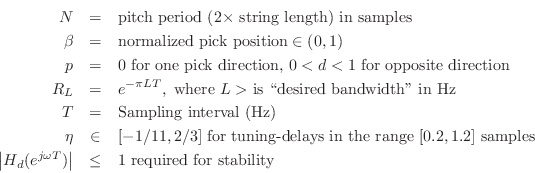

Figure 9.2 shows a block diagram of the Extended Karplus-Strong (EKS) algorithm [207].

The EKS adds the following features to the KS algorithm:

where

Note that while

![]() can be used in the tuning allpass, it

is better to offset it to

can be used in the tuning allpass, it

is better to offset it to

![]() to avoid delays

close to zero in the tuning allpass. (A zero delay is obtained by a

pole-zero cancellation on the unit circle.) First-order allpass

interpolation of delay lines was discussed in §4.1.2.

to avoid delays

close to zero in the tuning allpass. (A zero delay is obtained by a

pole-zero cancellation on the unit circle.) First-order allpass

interpolation of delay lines was discussed in §4.1.2.

A history of the Karplus-Strong algorithm and its extensions is given

in §A.8. EKS sound

examples

are also available on the Web. Techniques for designing the

string-damping filter ![]() and/or the string-stiffness allpass

filter

and/or the string-stiffness allpass

filter ![]() are summarized below in §6.11.

are summarized below in §6.11.

An implementation of the Extended Karplus-Strong (EKS) algorithm in the Faust programming language is described (and provided) in [454].

Nonlinear Distortion

In §6.13, nonlinear elements were introduced in the context of general digital waveguide synthesis. In this section, we discuss specifically virtual electric guitar distortion, and mention other instances of audible nonlinearity in stringed musical instruments.

As discussed in Chapter 6, typical vibrating strings in musical acoustics are well approximated as linear, time-invariant systems, there are special cases in which nonlinear behavior is desired.

Tension Modulation

In every freely vibrating string, the fundamental frequency declines

over time as the amplitude of vibration decays. This is due to

tension modulation, which is often audible at the beginning of

plucked-string tones, especially for low-tension strings. It happens

because higher-amplitude vibrations stretch the string to a

longer average length, raising the average string tension

![]() faster wave propagation

faster wave propagation

![]() higher fundamental

frequency.

higher fundamental

frequency.

The are several methods in the literature for simulating tension modulation in a digital waveguide string model [494,233,508,512,513,495,283], as well as in membrane models [298]. The methods can be classified into two categories, local and global.

Local tension-modulation methods modulate the speed of sound locally as a function of amplitude. For example, opposite delay cells in a force-wave digital waveguide string can be summed to obtain the instantaneous vertical force across that string sample, and the length of the adjacent propagation delay can be modulated using a first-order allpass filter. In principle the string slope reduces as the local tension increases. (Recall from Chapter 6 or Appendix C that force waves are minus the string tension times slope.)

Global tension-modulation methods [495,494] essentially modulate the string delay-line length as a function of the total energy in the string.

String Length Modulation

A number of stringed musical instruments have a ``nonlinear sound'' that comes from modulating the physical termination of the string (as opposed to its acoustic length in the case of tension modulation).

The Finnish Kantele [231,513] has a different effective string-length in the vertical and horizontal vibration planes due to a loose knot attaching the string to a metal bar. There is also nonlinear feeding of the second harmonic due to a nonrigid tuning peg.

Perhaps a better known example is the Indian sitar, in which a curved ``jawari'' (functioning as a nonlinear bridge) serves to shorten the string gradually as it displaces toward the bridge.

The Indian tambura also employs a thread perpendicular to the strings a short distance from the bridge, which serves to shorten the string whenever string displacement toward the bridge exceeds a certain distance.

Finally, the slap bass playing technique for bass guitars involves hitting the string hard enough to cause it to beat against the neck during vibration [263,366].

In all of these cases, the string length is physically modulated in some manner each period, at least when the amplitude is sufficiently large.

Hard Clipping

A widespread class of distortion used in electric guitars, is clipping of the guitar waveform. it is easy to add this effect to any string-simulation algorithm by passing the output signal through a nonlinear clipping function. For example, a hard clipper has the characteristic (in normalized form)

where

Soft Clipping

A soft clipper is similar to a hard clipper, but with the corners smoothed. A common choice of soft-clipper is the cubic nonlinearity, e.g. [489],

This particular soft-clipping characteristic is diagrammed in Fig.9.3. An analysis of its spectral characteristics, with some discussion of aliasing it may cause, was given in in §6.13. An input gain may be used to set the desired degree of distortion.

Enhancing Even Harmonics

A cubic nonlinearity, as well as any odd distortion law,10.2 generates only odd-numbered harmonics (like in a square wave). For best results, and in particular for tube distortion simulation [31,395], it has been argued that some amount of even-numbered harmonics should also be present. Breaking the odd symmetry in any way will add even-numbered harmonics to the output as well. One simple way to accomplish this is to add an offset to the input signal, obtaining

Another method for breaking the odd symmetry is to add some square-law nonlinearity to obtain

where

Software for Cubic Nonlinear Distortion

The function cubicnl in the Faust software distribution (file effect.lib) implements cubic nonlinearity distortion [454]. The Faust programming example cubic_distortion.dsp provides a real-time demo with adjustable parameters, including an offset for bringing in even harmonics. The free, open-source guitarix application (for Linux platforms) uses this type of distortion effect along with many other guitar effects.

In the Synthesis Tool Kit (STK) [91], the class Cubicnl.cpp implements a general cubic distortion law.

Amplifier Feedback

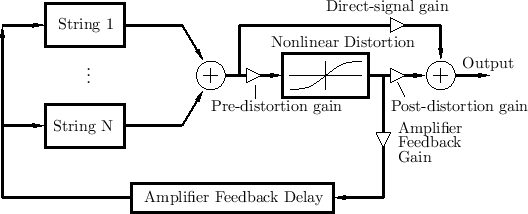

A more extreme effect used with distorted electric guitars is amplifier feedback. In this case, the amplified guitar waveforms couple back to the strings with some gain and delay, as depicted schematically in Fig.9.4 [489].

The Amplifier Feedback Delay in the figure can be adjusted to

emphasize certain partial overtones over others. If the loop gain,

controllable via the Amplifier Feedback Gain, is greater than 1 at any

frequency, a sustained ``feedback howl'' will be

produced. Note that in commercial devices, the Pre-distortion gain

and Post-distortion gain are frequency-dependent, i.e., they are

implemented as pre- and post-equalizers (typically only a few

bands, such as three). Another simple choice is an integrator

![]() for the pre-distortion gain, and a differentiator

for the pre-distortion gain, and a differentiator

![]() for the post-distortion gain.

for the post-distortion gain.

Faust software implementing electric-guitar amplifier feedback may be found in [454].

Cabinet Filtering

The distortion output signal is often further filtered by an amplifier cabinet filter, representing the enclosure, driver responses, etc. It is straightforward to measure the impulse response (or frequency response) of a speaker cabinet and fit it with a recursive digital filter design function such as invfreqz in matlab (§8.6.4). Individual speakers generally have one major resonance--the ``cone resonance''--which is determined by the mass, compliance, and damping of the driver subassembly. The enclosing cabinet introduces many more resonances in the audio range, generally tuned to even out the overall response as much as possible.

Faust software implementing an idealized speaker filter may be found in [454].

Duty-Cycle Modulation

For class A tube amplifier simulation, there should be level-dependent duty-cycle modulation as a function:10.3

- 50% at low amplitude levels (no duty-cycle modulation)

- 55-65% duty cycle at high levels

Vacuum Tube Modeling

The elementary nonlinearities discussed above are aimed at approximating the sound of amplifier distortion, such as often used by guitar players. Generally speaking, the distortion characteristics of vacuum-tube amplifiers are considered superior to those of solid-state amplifiers. A real-time numerically solved nonlinear differential algebraic model for class A single-ended guitar power amplification is presented in [84]. Real-time simulation of a tube guitar-amp (triode preamp, inverter, and push-pull amplifier) based on a precomputed approximate solution of the nonlinear differential equations is presented in [293]. Other references in this area include [338,228,339].

Acoustic Guitars

This section addresses some modeling considerations specific to acoustic guitars. Section 9.2.1 discusses special properties of acoustic guitar bridges, and §9.2.1 gives ways to simulate passive bridges, including a look at some laboratory measurements. For further reading in this area, see, e.g., [281,128].

Bridge Modeling

In §6.3 we analyzed the effect of rigid string terminations on traveling waves. We found that waves derived by time-derivatives of displacement (displacement, velocity, acceleration, and so on) reflect with a sign inversion, while waves defined in terms of the first spatial derivative of displacement (force, slope) reflect with no sign inversion. We now look at the more realistic case of yielding terminations for strings. This analysis can be considered a special case of the loaded string junction analyzed in §C.12.

Yielding string terminations (at the bridge) have a large effect on the sound produced by acoustic stringed instruments. Rigid terminations can be considered a reasonable model for the solid-body electric guitar in which maximum sustain is desired for played notes. Acoustic guitars, on the other hand, must transduce sound energy from the strings into the body of the instrument, and from there to the surrounding air. All audible sound energy comes from the string vibrational energy, thereby reducing the sustain (decay time) of each played note. Furthermore, because the bridge vibrates more easily in one direction than another, a kind of ``chorus effect'' is created from the detuning of the horizontal and vertical planes of string vibration (as discussed further in §6.12.1). A perfectly rigid bridge, in contrast, cannot transmit any sound into the body of the instrument, thereby requiring some other transducer, such as the magnetic pickups used in electric guitars, to extract sound for output.10.4

Passive String Terminations

When a traveling wave reflects from the bridge of a real stringed

instrument, the bridge moves, transmitting sound energy into the

instrument body. How far the bridge moves is determined by the

driving-point impedance of the bridge, denoted ![]() . The

driving point impedance is the ratio of Laplace transform of the force

on the bridge

. The

driving point impedance is the ratio of Laplace transform of the force

on the bridge ![]() to the velocity of motion that results

to the velocity of motion that results

![]() . That is,

. That is,

![]() .

.

For passive systems (i.e., for all unamplified acoustic musical

instruments), the driving-point impedance ![]() is positive

real (a property defined and discussed in §C.11.2). Being

positive real has strong implications on the nature of

is positive

real (a property defined and discussed in §C.11.2). Being

positive real has strong implications on the nature of ![]() . In

particular, the phase of

. In

particular, the phase of

![]() cannot exceed plus or minus

cannot exceed plus or minus

![]() degrees at any frequency, and in the lossless case, all poles and

zeros must interlace along the

degrees at any frequency, and in the lossless case, all poles and

zeros must interlace along the ![]() axis. Another

implication is that the reflectance of a passive bridge, as

seen by traveling waves on the string, is a so-called Schur

function (defined and discussed in §C.11); a Schur

reflectance is a stable filter having gain not exceeding 1 at any

frequency. In summary, a guitar bridge is passive if and only if its

driving-point impedance is positive real and (equivalently) its

reflectance is Schur. See §C.11 for a fuller discussion of

this point.

axis. Another

implication is that the reflectance of a passive bridge, as

seen by traveling waves on the string, is a so-called Schur

function (defined and discussed in §C.11); a Schur

reflectance is a stable filter having gain not exceeding 1 at any

frequency. In summary, a guitar bridge is passive if and only if its

driving-point impedance is positive real and (equivalently) its

reflectance is Schur. See §C.11 for a fuller discussion of

this point.

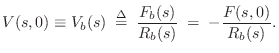

At ![]() , the force on the bridge is given by (§C.7.2)

, the force on the bridge is given by (§C.7.2)

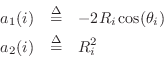

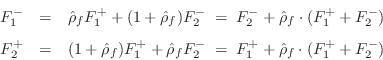

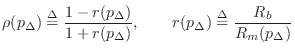

A Terminating Resonator

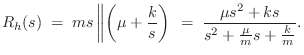

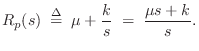

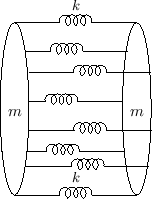

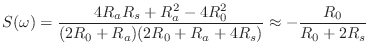

Suppose a guitar bridge couples an ideal vibrating string to a single resonance, as depicted schematically in Fig.9.5. This is often an accurate model of an acoustic bridge impedance in a narrow frequency range, especially at low frequencies where the resonances are well separated. Then, as developed in Chapter 7, the driving-point impedance seen by the string at the bridge is

![\includegraphics[width=\twidth]{eps/f_yielding_term}](http://www.dsprelated.com/josimages_new/pasp/img1992.png) |

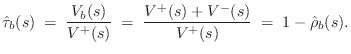

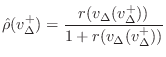

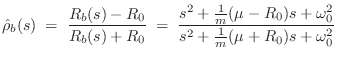

Bridge Reflectance

The bridge reflectance is needed as part of the loop filter in a digital waveguide model (Chapter 6).

As derived in §C.11.1, the force-wave reflectance of ![]() seen on the string is

seen on the string is

where

Bridge Transmittance

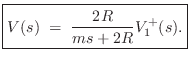

The bridge transmittance is the filter needed for the signal path from the vibrating string to the resonant acoustic body.

Since the bridge velocity equals the string endpoint velocity (a ``series'' connection), the velocity transmittance is simply

If the bridge is rigid, then its motion becomes a velocity input to the acoustic resonator. In principle, there are three such velocity inputs for each point along the bridge. However, it is typical in stringed instrument models to consider only the vertical transverse velocity on the string as significant, which results in one (vertical) driving velocity at the base of the bridge. In violin models (§9.6), the use of a ``sound post'' on one side of the bridge and ``bass bar'' on the other strongly suggests supporting a rocking motion along the bridge.

Digitizing Bridge Reflectance

Converting continuous-time transfer functions such as

![]() and

and

![]() to the digital domain is analogous to converting an analog

electrical filter to a corresponding digital filter--a problem which

has been well studied [343]. For this task, the

bilinear transform (§7.3.2) is a good choice. In

addition to preserving order and being free of aliasing, the bilinear

transform preserves the positive-real property of passive impedances

(§C.11.2).

to the digital domain is analogous to converting an analog

electrical filter to a corresponding digital filter--a problem which

has been well studied [343]. For this task, the

bilinear transform (§7.3.2) is a good choice. In

addition to preserving order and being free of aliasing, the bilinear

transform preserves the positive-real property of passive impedances

(§C.11.2).

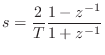

Digitizing

![]() via the bilinear transform (§7.3.2)

transform gives

via the bilinear transform (§7.3.2)

transform gives

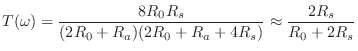

A Two-Resonance Guitar Bridge

Now let's consider a two-resonance guitar bridge, as shown in Fig. 9.6.

![\includegraphics[width=\twidth]{eps/lguitarsynth2simp2}](http://www.dsprelated.com/josimages_new/pasp/img2001.png) |

Like all mechanical systems that don't ``slide away'' in response to a

constant applied input force, the bridge must ``look like a spring''

at zero frequency. Similarly, it is typical for systems to ``look

like a mass'' at very high frequencies, because the driving-point

typically has mass (unless the driver is spring-coupled by what seems

to be massless spring). This implies the driving point admittance

should have a zero at dc and a pole at infinity. If we neglect

losses, as frequency increases up from zero, the first thing we

encounter in the admittance is a pole (a ``resonance'' frequency at

which energy is readily accepted by the bridge from the strings). As

we pass the admittance peak going up in frequency, the phase switches

around from being near ![]() (``spring like'') to being closer to

(``spring like'') to being closer to

![]() (``mass like''). (Recall the graphical method for

calculating the phase response of a linear system

[449].) Below the first resonance, we may say that the system

is stiffness controlled (admittance phase

(``mass like''). (Recall the graphical method for

calculating the phase response of a linear system

[449].) Below the first resonance, we may say that the system

is stiffness controlled (admittance phase

![]() ),

while above the first resonance, we say it is mass controlled

(admittance phase

),

while above the first resonance, we say it is mass controlled

(admittance phase

![]() ). This qualitative description is

typical of any lightly damped, linear, dynamic system. As we proceed

up the

). This qualitative description is

typical of any lightly damped, linear, dynamic system. As we proceed

up the ![]() axis, we'll next encounter a near-zero, or

``anti-resonance,'' above which the system again appears ``stiffness

controlled,'' or spring-like, and so on in alternation to infinity.

The strict alternation of poles and zeros near the

axis, we'll next encounter a near-zero, or

``anti-resonance,'' above which the system again appears ``stiffness

controlled,'' or spring-like, and so on in alternation to infinity.

The strict alternation of poles and zeros near the ![]() axis is

required by the positive real property of all passive

admittances (§C.11.2).

axis is

required by the positive real property of all passive

admittances (§C.11.2).

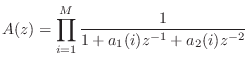

Measured Guitar-Bridge Admittance

![\includegraphics[width=\twidth]{eps/lguitardata}](http://www.dsprelated.com/josimages_new/pasp/img2004.png) |

A measured driving-point admittance [269]

for a real guitar bridge is shown in Fig. 9.7. Note

that at very low frequencies, the phase information does not appear to

be bounded by ![]() as it must be for the admittance to be

positive real (passive). This indicates a poor signal-to-noise ratio

in the measurements at very low frequencies. This can be verified by

computing and plotting the coherence function between the

bridge input and output signals using multiple physical

measurements.10.5

as it must be for the admittance to be

positive real (passive). This indicates a poor signal-to-noise ratio

in the measurements at very low frequencies. This can be verified by

computing and plotting the coherence function between the

bridge input and output signals using multiple physical

measurements.10.5

Figures 9.8 and 9.9 show overlays of the admittance magnitudes and phases, and also the input-output coherence, for three separate measurements. A coherence of 1 means that all the measurements are identical, while a coherence less than 1 indicates variation from measurement to measurement, implying a low signal-to-noise ratio. As can be seen in the figures, at frequencies for which the coherence is very close to 1, successive measurements are strongly in agreement, and the data are reliable. Where the coherence drops below 1, successive measurements disagree, and the measured admittance is not even positive real at very low frequencies.

![\includegraphics[width=\twidth]{eps/lguitarcoh}](http://www.dsprelated.com/josimages_new/pasp/img2006.png) |

![\includegraphics[width=\twidth]{eps/lguitarphs}](http://www.dsprelated.com/josimages_new/pasp/img2007.png) |

Building a Synthetic Guitar Bridge Admittance

To construct a synthetic guitar bridge model, we can first measure empirically the admittance of a real guitar bridge, or we can work from measurements published in the literature, as shown in Fig. 9.7. Each peak in the admittance curve corresponds to a resonance in the guitar body that is well coupled to the strings via the bridge. Whether or not the corresponding vibrational mode radiates efficiently depends on the geometry of the vibrational mode, and how well it couples to the surrounding air. Thus, a complete bridge model requires not only a synthetic bridge admittance which determines the reflectance ``seen'' on the string, but also a separate filter which models the transmittance from the bridge to the outside air; the transmittance filter normally contains the same poles as the reflectance filter, but the zeros are different. Moreover, keep in mind that each string sees a slightly different reflectance and transmittance because it is located at a slightly different point relative to the guitar top plate; this changes the coupling coefficients to the various resonating modes to some extent. (We normally ignore this for simplicity and use the same bridge filters for all the strings.)

Finally, also keep in mind that each string excites the bridge in three dimensions. The two most important are the horizontal and vertical planes of vibration, corresponding to the two planes of transverse vibration on the string. The vertical plane is normal to the guitar top plate, while the horizontal plane is parallel to the top plate. Longitudinal waves also excite the bridge, and they can be important as well, especially in the piano. Since longitudinal waves are much faster in strings than transverse waves, the corresponding overtones in the sound are normally inharmonically related to the main (nearly harmonic) overtones set up by the transverse string vibrations.

The frequency, complex amplitude, and width of each peak in the measured admittance of a guitar bridge can be used to determine the parameters of a second-order digital resonator in a parallel bank of such resonators being used to model the bridge impedance. This is a variation on modal synthesis [5,299]. However, for the bridge model to be passive when attached to a string, its transfer function must be positive real, as discused previously. Since strings are very nearly lossless, passivity of the bridge model is actually quite critical in practice. If the bridge model is even slightly active at some frequency, it can either make the whole guitar model unstable, or it might objectionably lengthen the decay time of certain string overtones.

We will describe two methods of constructing passive ``bridge filters'' from measured modal parameters. The first is guaranteed to be positive real but has some drawbacks. The second method gives better results, but it has to be checked for passivity and possibly modified to give a positive real admittance. Both methods illustrate more generally applicable signal processing methods.

Passive Reflectance Synthesis--Method 1

The first method is based on constructing a passive reflectance

![]() having the desired poles, and then converting to an

admittance via the fundamental relation

having the desired poles, and then converting to an

admittance via the fundamental relation

As we saw in §C.11.1, every passive impedance corresponds

to a passive reflectance which is a Schur function (stable and having gain

not exceeding ![]() around the unit circle). Since damping is light in a

guitar bridge impedance (otherwise the strings would not vibrate very long,

and sustain is a highly prized feature of real guitars), we can expect the

bridge reflectance to be close to an allpass transfer function

around the unit circle). Since damping is light in a

guitar bridge impedance (otherwise the strings would not vibrate very long,

and sustain is a highly prized feature of real guitars), we can expect the

bridge reflectance to be close to an allpass transfer function ![]() .

.

It is well known that every allpass transfer function can be expressed as

We will then construct a Schur function as

Recall that in every allpass filter with real coefficients, to every pole

at radius ![]() there corresponds a zero at radius

there corresponds a zero at radius ![]() .10.7

.10.7

Because the impedance is lightly damped, the poles and zeros of the

corresponding reflectance are close to the unit circle. This means that at

points along the unit circle between the poles, the poles and zeros tend to

cancel. It can be easily seen using the graphical method for computing the

phase of the frequency response that the pole-zero angles in the allpass

filter are very close to the resonance frequencies in the corresponding

passive impedance [429]. Furthermore, the distance of

the allpass poles to the unit circle controls the bandwidth of the

impedance peaks. Therefore, to a first approximation, we can treat the

allpass pole-angles as the same as those of the impedance pole angles, and

the pole radii in the allpass can be set to give the desired impedance peak

bandwidth. The zero-phase shaping filter ![]() gives the desired mode

height.

gives the desired mode

height.

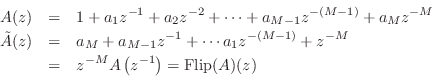

From the measured peak frequencies ![]() and bandwidths

and bandwidths ![]() in the guitar

bridge admittance, we may approximate the pole locations

in the guitar

bridge admittance, we may approximate the pole locations

![]() as

as

where ![]() is the sampling interval as usual. Next we construct the

allpass denominator as the product of elementary second-order sections:

is the sampling interval as usual. Next we construct the

allpass denominator as the product of elementary second-order sections:

Now that we've constructed a Schur function, a passive admittance can be computed using (9.2.1). While it is guaranteed to be positive real, the modal frequencies, bandwidths, and amplitudes are only indirectly controlled and therefore approximated. (Of course, this would provide a good initial guess for an iterative procedure which computes an optimal approximation directly.)

A simple example of a synthetic bridge constructed using this method

with ![]() and

and ![]() is shown in Fig.9.10.

is shown in Fig.9.10.

Passive Reflectance Synthesis--Method 2

The second method is based on constructing a partial fraction expansion of the admittance directly:

A simple example of a synthetic bridge constructed using this method with is shown in Fig.9.11.

Matlab for Passive Reflectance Synthesis Method 1

fs = 8192; % Sampling rate in Hz (small for display convenience) fc = 300; % Upper frequency to look at nfft = 8192;% FFT size (spectral grid density) nspec = nfft/2+1; nc = round(nfft*fc/fs); f = ([0:nc-1]/nfft)*fs; % Measured guitar body resonances F = [4.64 96.52 189.33 219.95]; % frequencies B = [ 10 10 10 10 ]; % bandwidths nsec = length(F); R = exp(-pi*B/fs); % Pole radii theta = 2*pi*F/fs; % Pole angles poles = R .* exp(j*theta); A1 = -2*R.*cos(theta); % 2nd-order section coeff A2 = R.*R; % 2nd-order section coeff denoms = [ones(size(A1)); A1; A2]' A = [1,zeros(1,2*nsec)]; for i=1:nsec, % polynomial multiplication = FIR filtering: A = filter(denoms(i,:),1,A); end;

Now A contains the (stable) denominator of the desired bridge admittance. We want now to construct a numerator which gives a positive-real result. We'll do this by first creating a passive reflectance and then computing the corresponding PR admittance.

g = 0.9; % Uniform loss factor B = g*fliplr(A); % Flip(A)/A = desired allpass Badm = A - B; Aadm = A + B; Badm = Badm/Aadm(1); % Renormalize Aadm = Aadm/Aadm(1); % Plots fr = freqz(Badm,Aadm,nfft,'whole'); nc = round(nfft*fc/fs); spec = fr(1:nc); f = ([0:nc-1]/nfft)*fs; dbmag = db(spec); phase = angle(spec)*180/pi; subplot(2,1,1); plot(f,dbmag); grid; title('Synthetic Guitar Bridge Admittance'); ylabel('Magnitude (dB)'); subplot(2,1,2); plot(f,phase); grid; ylabel('Phase (degrees)'); xlabel('Frequency (Hz)');

Matlab for Passive Reflectance Synthesis Method 2

... as in Method 1 for poles ... % Construct a resonator as a sum of arbitrary modes % with unit residues, adding a near-zero at dc. B = zeros(1,2*nsec+1); impulse = [1,zeros(1,2*nsec)]; for i=1:nsec, % filter() does polynomial division here: B = B + filter(A,denoms(i,:),impulse); end; % add a near-zero at dc B = filter([1 -0.995],1,B); ... as in Method 1 for display ...

Matrix Bridge Impedance

A passive matrix bridge admittance (e.g., taking all six strings of the guitar together) can be conveniently synthesized as a vector of positive-real scalar admittances multiplied by a positive definite matrix [25]. In [25], a dual-polarization guitar-string model is connected to a matrix reflectance computed from the matrix driving-point impedance at the bridge.

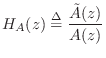

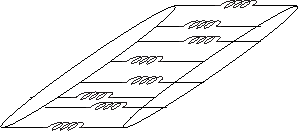

Body Modeling

As introduced in §8.7, the commuted synthesis technique involves commuting the LTI filter representing acoustic resonator (guitar body) with the string, so that the string is excited by the pluck response of the body in place of the plectrum directly. The techniques described in §8.8 can be used to build practical computational synthesis models of acoustic guitar bodies and the like [229].

String Excitation

In §2.4 and §6.10 we looked at the basic architecture of a digital waveguide string excited by some external disturbance. We now consider the specific cases of string excitation by a hammer (mass) and plectrum (spring).

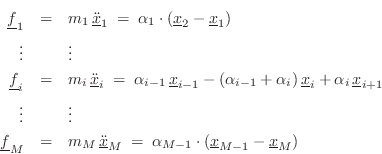

Ideal String Struck by a Mass

In §6.6, the ideal struck string was modeled as a simple initial velocity distribution along the string, corresponding to an instantaneous transfer of linear momentum from the striking hammer into the transverse motion of a string segment at time zero. (See Fig.6.10 for a diagram of the initial traveling velocity waves.) In that model, we neglected any effect of the striking hammer after time zero, as if it had bounced away at time 0 due to a so-called elastic collision. In this section, we consider the more realistic case of an inelastic collision, i.e., where the mass hits the string and remains in contact until something, such as a wave, or gravity, causes the mass and string to separate.

For simplicity, let the string length be infinity, and denote its wave

impedance by ![]() . Denote the colliding mass by

. Denote the colliding mass by ![]() and its speed

prior to collision by

and its speed

prior to collision by ![]() . It will turn out in this analysis that

we may approximate the size of the mass by zero (a so-called

point mass). Finally, we neglect the effects of gravity and

drag by the surrounding air. When the mass collides with the string,

our model must switch from two separate models (mass-in-flight and

ideal string), to that of two ideal strings joined by a mass

. It will turn out in this analysis that

we may approximate the size of the mass by zero (a so-called

point mass). Finally, we neglect the effects of gravity and

drag by the surrounding air. When the mass collides with the string,

our model must switch from two separate models (mass-in-flight and

ideal string), to that of two ideal strings joined by a mass ![]() at

at

![]() , as depicted in Fig.9.12. The ``force-velocity

port'' connections of the mass and two semi-infinite string endpoints

are formally in series because they all move together; that is,

the mass velocity equals the velocity of each of the two string

endpoints connected to the mass (see §7.2 for a fuller

discussion of impedances and their parallel/series connection).

, as depicted in Fig.9.12. The ``force-velocity

port'' connections of the mass and two semi-infinite string endpoints

are formally in series because they all move together; that is,

the mass velocity equals the velocity of each of the two string

endpoints connected to the mass (see §7.2 for a fuller

discussion of impedances and their parallel/series connection).

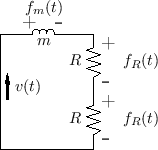

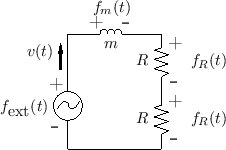

The equivalent circuit for the mass-string assembly after time zero is

shown in Fig.9.13. Note that the string wave impedance ![]() appears twice, once for each string segment on the left and right.

Also note that there is a single common velocity

appears twice, once for each string segment on the left and right.

Also note that there is a single common velocity ![]() for the two

string endpoints and mass. LTI circuit elements in series can be

arranged in any order.

for the two

string endpoints and mass. LTI circuit elements in series can be

arranged in any order.

|

From the equivalent circuit, it is easy to solve for the velocity ![]() .

Formally, this is accomplished by applying Kirchoff's Loop Rule, which

states that the sum of voltages (``forces'') around any series loop is zero:

.

Formally, this is accomplished by applying Kirchoff's Loop Rule, which

states that the sum of voltages (``forces'') around any series loop is zero:

All of the signs are `

Taking the Laplace transform10.9of Eq.![]() (9.8) yields, by linearity,

(9.8) yields, by linearity,

where

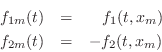

For the mass, we have

![$\displaystyle f_m(t) = m\,a(t)\;=\; m\,\frac{d}{dt} v(t) \quad\longleftrightarrow\quad

F_m(s) = m\left[s\,V(s) - v_0\right],

$](http://www.dsprelated.com/josimages_new/pasp/img2043.png)

Substituting these relations into Eq.![]() (9.9) yields

(9.9) yields

We see that the initial momentum

|

An advantage of the external-impulse formulation is that the system

has a zero initial state, so that an impedance description

(§7.1) is complete. In other words, the system can be

fully described as a series combination of the three impedances ![]() ,

,

![]() (on the left), and

(on the left), and ![]() (on the right), driven by an external

force-source

(on the right), driven by an external

force-source

![]() .

.

Solving Eq.![]() (9.10) for

(9.10) for ![]() yields

yields

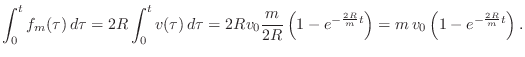

The displacement of the string at ![]() is given by the integral of

the velocity:

is given by the integral of

the velocity:

![$\displaystyle y(t,0) = \int_0^t v(\tau)\,d\tau = v_0\,\frac{m}{2R}\,\left[1-e^{-{\frac{2R}{m}t}}\right]

$](http://www.dsprelated.com/josimages_new/pasp/img2056.png)

The momentum of the mass before time zero is ![]() , and after time

zero it is

, and after time

zero it is

The force applied to the two string endpoints by the mass is given by

![]() . From Newton's Law,

. From Newton's Law,

![]() , we have that

momentum

, we have that

momentum ![]() , delivered by the mass to the string,

can be calculated as the time integral of applied force:

, delivered by the mass to the string,

can be calculated as the time integral of applied force:

In a real piano, the hammer, which strikes in an upward (grand) or sideways (upright) direction, falls away from the string a short time after collision, but it may remain in contact with the string for a substantial fraction of a period (see §9.4 on piano modeling).

Mass Termination Model

The previous discussion solved for the motion of an ideal mass striking an ideal string of infinite length. We now investigate the same model from the string's point of view. As before, we will be interested in a digital waveguide (sampled traveling-wave) model of the string, for efficiency's sake (Chapter 6), and we therefore will need to know what the mass ``looks like'' at the end of each string segment. For this we will find that the impedance description (§7.1) is especially convenient.

![\includegraphics[width=\twidth]{eps/massstringphynum}](http://www.dsprelated.com/josimages_new/pasp/img2065.png) |

Let's number the string segments to the left and right of the mass by

1 and 2, respectively, as shown in Fig.9.15. Then

Eq.![]() (9.8) above may be written

(9.8) above may be written

where

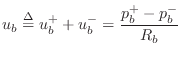

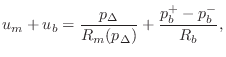

To derive the traveling-wave relations in a digital waveguide model,

we want to use the force-wave variables

![]() and

and

![]() that we defined for vibrating strings in

§6.1.5; i.e., we defined

that we defined for vibrating strings in

§6.1.5; i.e., we defined

![]() , where

, where ![]() is the string tension and

is the string tension and ![]() is the string

slope,

is the string

slope, ![]() .

.

![\includegraphics[width=0.5\twidth]{eps/stringslope}](http://www.dsprelated.com/josimages_new/pasp/img2073.png) |

As shown in Fig.9.16, a negative string slope pulls ``up''

to the right. Therefore, at the mass point we have

![]() , where

, where ![]() denotes the position of the

mass along the string. On the other hand, the figure also shows that

a negative string slope pulls ``down'' to the left, so that

denotes the position of the

mass along the string. On the other hand, the figure also shows that

a negative string slope pulls ``down'' to the left, so that

![]() . In summary, relating the forces we

have defined for the mass-string junction to the force-wave variables

in the string, we have

. In summary, relating the forces we

have defined for the mass-string junction to the force-wave variables

in the string, we have

where ![]() denotes the position of the mass along the string.

denotes the position of the mass along the string.

Thus, we can rewrite Eq.![]() (9.11) in terms of string wave variables as

(9.11) in terms of string wave variables as

or, substituting the definitions of these forces,

The inertial force of the mass is

The force relations can be checked individually. For string 1,

Now that we have expressed the string forces in terms of string

force-wave variables, we can derive digital waveguide models by

performing the traveling-wave decompositions

![]() and

and

![]() and using the Ohm's law relations

and using the Ohm's law relations

![]() and

and

![]() for

for ![]() (introduced above near

Eq.

(introduced above near

Eq.![]() (6.6)).

(6.6)).

Mass Reflectance from Either String

Let's first consider how the mass looks from the viewpoint of string

1, assuming string 2 is at rest. In this situation (no incoming wave

from string 2), string 2 will appear to string 1 as a simple resistor

(or dashpot) of ![]() Ohms in series with the mass impedance

Ohms in series with the mass impedance ![]() .

(This observation will be used as the basis of a rapid solution method

in §9.3.1 below.)

.

(This observation will be used as the basis of a rapid solution method

in §9.3.1 below.)

When a wave from string 1 hits the mass, it will cause the mass to move. This motion carries both string endpoints along with it. Therefore, both the reflected and transmitted waves include this mass motion. We can say that we see a ``dispersive transmitted wave'' on string 2, and a dispersive reflection back onto string 1. Our object in this section is to calculate the transmission and reflection filters corresponding to these transmitted and reflected waves.

By physical symmetry the velocity reflection and transmission

will be the same from string 1 as it is from string 2. We can say the

same about force waves, but we will be more careful because the sign

of the transverse force flips when the direction of travel is

reversed.10.12Thus, we expect a scattering junction of the form shown in

Fig.9.17 (recall the discussion of physically

interacting waveguide inputs in §2.4.3). This much invokes

the superposition principle (for simultaneous reflection and transmission),

and imposes the expected symmetry: equal reflection filters

![]() and

equal transmission filters

and

equal transmission filters

![]() (for either force or velocity waves).

(for either force or velocity waves).

![\includegraphics[width=\twidth]{eps/massstringdwmform1}](http://www.dsprelated.com/josimages_new/pasp/img2087.png) |

Let's begin with Eq.![]() (9.12) above, restated as follows:

(9.12) above, restated as follows:

The traveling-wave decompositions can be written out as

where a ``+'' superscript means ``right-going'' and a ``-'' superscript means ``left-going'' on either string.10.13

Let's define the mass position ![]() to be zero, so that Eq.

to be zero, so that Eq.![]() (9.14)

with the substitutions Eq.

(9.14)

with the substitutions Eq.![]() (9.15) becomes

(9.15) becomes

In the Laplace domain, dropping the common ``(s)'' arguments,

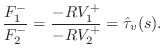

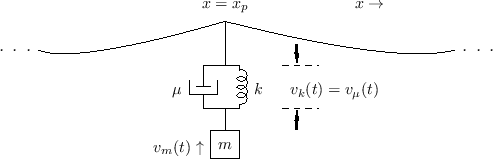

![\begin{eqnarray*}

F_m + F^{+}_1 + F^{-}_1 - F^{+}_2 -F^{-}_2 &=& 0\\ [10pt]

\Lon...

...row\quad

-msV + RV^{+}_1 - RV^{-}_1 - RV^{+}_2 + RV^{-}_2 &=& 0.

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img2101.png)

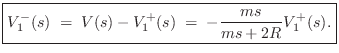

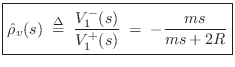

To compute the reflection coefficient of the mass seen on string 1, we

may set ![]() , which means

, which means

![]() , so that we have

, so that we have

From this, the reflected velocity is immediate:

It is always good to check that our answers make physical sense in

limiting cases. For this problem, easy cases to check are ![]() and

and

![]() . When the mass is

. When the mass is ![]() , the reflectance goes to zero (no

reflected wave at all). When the mass goes to infinity, the

reflectance approaches

, the reflectance goes to zero (no

reflected wave at all). When the mass goes to infinity, the

reflectance approaches

![]() , corresponding to a rigid

termination, which also makes sense.

, corresponding to a rigid

termination, which also makes sense.

The results of this section can be more quickly obtained as a special

case of the main result of §C.12, by choosing ![]() waveguides meeting at a load

impedance

waveguides meeting at a load

impedance ![]() . The next section gives another

fast-calculation method based on a standard formula.

. The next section gives another

fast-calculation method based on a standard formula.

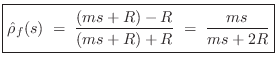

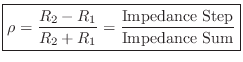

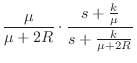

Simplified Impedance Analysis

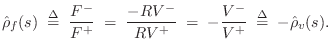

The above results are quickly derived from the general reflection-coefficient for force waves (or voltage waves, pressure waves, etc.):

where

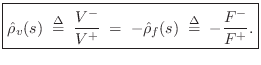

In the mass-string-collision problem, we can immediately write down the force reflectance of the mass as seen from either string:

Since, by the Ohm's-law relations,

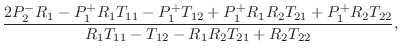

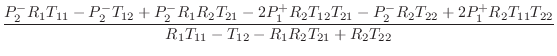

Mass Transmittance from String to String

Referring to Fig.9.15, the velocity transmittance from string 1 to string 2 may be defined as

We can now refine the picture of our scattering junction Fig.9.17 to obtain the form shown in Fig.9.18.

![\includegraphics[width=0.8\twidth]{eps/massstringdwmformvel}](http://www.dsprelated.com/josimages_new/pasp/img2139.png) |

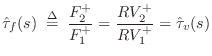

Force Wave Mass-String Model

The velocity transmittance is readily converted to a force transmittance using the Ohm's-law relations:

![\includegraphics[width=0.8\twidth]{eps/massstringdwmformforce}](http://www.dsprelated.com/josimages_new/pasp/img2142.png) |

Checking as before, we see that

![]() corresponds to

corresponds to

![]() , which means no force is transmitted through an

infinite mass, which is reasonable. As

, which means no force is transmitted through an

infinite mass, which is reasonable. As ![]() , the force

transmittance becomes 1 and the mass has no effect, as desired.

, the force

transmittance becomes 1 and the mass has no effect, as desired.

Summary of Mass-String Scattering Junction

In summary, we have characterized the mass on the string in terms of its reflectance and transmittance from either string. For force waves, we have outgoing waves given by

or

![$\displaystyle \left[\begin{array}{c} F^{+}_2 \\ [2pt] F^{-}_1 \end{array}\right...

...ay}\right] \left[\begin{array}{c} F^{+}_1 \\ [2pt] F^{-}_2 \end{array}\right]

$](http://www.dsprelated.com/josimages_new/pasp/img2144.png)

The one-filter form follows from the observation that

![]() appears in both computations, and therefore need only be implemented once:

appears in both computations, and therefore need only be implemented once:

![\begin{eqnarray*}

F^{+}&\isdef & \hat{\rho}_f\cdot(F^{+}_1+F^{-}_2)\\ [5pt]

F^{-}_1 &=& F^{-}_2 + F^{+}\\ [5pt]

F^{+}_2 &=& F^{+}_1 + F^{+}

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img2152.png)

This structure is diagrammed in Fig.9.20.

![\includegraphics[width=\twidth]{eps/massstringdwms}](http://www.dsprelated.com/josimages_new/pasp/img2153.png) |

Again, the above results follow immediately from the more general formulation of §C.12.

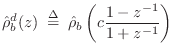

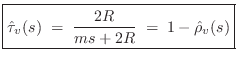

Digital Waveguide Mass-String Model

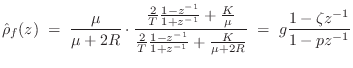

To obtain a force-wave digital waveguide model of the string-mass assembly after the mass has struck the string, it only remains to digitize the model of Fig.9.20. The delays are obviously to be implemented using digital delay lines. For the mass, we must digitize the force reflectance appearing in the one-filter model of Fig.9.20:

A common choice of digitization method is the bilinear transform (§7.3.2) because it preserves losslessness and does not alias. This will effectively yield a wave digital filter model for the mass in this context (see Appendix F for a tutorial on wave digital filters).

The bilinear transform is typically scaled as

![\begin{eqnarray*}

\hat{\rho}_d(z)

&=& \frac{1}{1+\frac{2R}{m}\frac{T}{2}\frac{1+z^{-1}}{1-z^{-1}}}\\ [5pt]

&=& g\frac{1-z^{-1}}{1-pz^{-1}}

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img2157.png)

where the gain coefficient ![]() and pole

and pole ![]() are given by

are given by

![\begin{eqnarray*}

g&\isdef &\frac{1}{1+\frac{RT}{m}}\;<\;1\\ [5pt]

p&\isdef &\frac{1-\frac{RT}{m}}{1+\frac{RT}{m}}\;<\;1.

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img2158.png)

Thus, the reflectance of the mass is a one-pole, one-zero filter. The

zero is exactly at dc, the real pole is close to dc, and the gain at

half the sampling rate is ![]() . We may recognize this as the classic

dc-blocking filter

[449]. Comparing with Eq.

. We may recognize this as the classic

dc-blocking filter

[449]. Comparing with Eq.![]() (9.18), we see that the behavior

at dc is correct, and that the behavior at infinite frequency

(

(9.18), we see that the behavior

at dc is correct, and that the behavior at infinite frequency

(

![]() ) is now the behavior at half the sampling rate

(

) is now the behavior at half the sampling rate

(

![]() ).

).

Physically, the mass reflectance is zero at dc because sufficiently slow waves can freely move a mass of any finite size. The reflectance is 1 at infinite frequency because there is no time for the mass to start moving before it is pushed in the opposite direction. In short, a mass behaves like a rigid termination at infinite frequency, and a free end (no termination) at zero frequency. The reflectance of a mass is therefore a ``dc blocker''.

The final digital waveguide model of the mass-string combination is shown in Fig.9.21.

Additional examples of lumped-element modeling, including masses, springs, dashpots, and their various interconnections, are discussed the Wave Digital Filters (WDF) appendix (Appendix F). A nice feature WDFs is that they employ traveling-wave input/output signals which are ideal for interfacing to digital waveguides. The main drawback is that the WDFs operate over a warped frequency axis (due to the bilinear transform), while digital delay lines have a normal (unwarped) frequency axis. On the plus side, WDFs cannot alias, while digital waveguides do alias in the frequency domain for signals that are not bandlimited to less than half the sampling rate. At low frequencies (or given sufficient oversampling), the WDF frequency warping is minimal, and in such cases, WDF ``lumped element models'' may be connected directly to digital waveguides, which are ``sampled-wave distributed parameter'' models.

Even when the bilinear-transform frequency-warping is severe, it is often well tolerated when the frequency response has only one ``important frequency'', such as a second-order resonator, lowpass, or highpass response. In other words, the bilinear transform can be scaled to map any single analog frequency to any desired corresponding digital frequency (see §7.3.2 for details), and the frequency-warped responses above and below the exactly mapped frequency may ``sound as good as'' the unwarped responses for musical purposes. If not, higher order filters can be used to model lumped elements (Chapter 7).

Displacement-Wave Simulation

As discussed in [121], displacement waves

are often preferred over force or velocity waves for guitar-string

simulations, because such strings often hit obstacles such as frets or

the neck. To obtain displacement from velocity at a given ![]() ,

we may time-integrate

velocity as above to produce displacement at any spatial sample along the

string where a collision might be possible. However, all these

integrators can be eliminated by simply going to a displacement-wave

simulation, as has been done in nearly all papers to date on plucking

models for digital waveguide strings.

,

we may time-integrate

velocity as above to produce displacement at any spatial sample along the

string where a collision might be possible. However, all these

integrators can be eliminated by simply going to a displacement-wave

simulation, as has been done in nearly all papers to date on plucking

models for digital waveguide strings.

To convert our force-wave simulation to a displacement-wave

simulation, we may first convert force to velocity using the Ohm's law

relations

![]() and

and

![]() and then

conceptually integrate all signals with respect to time (in advance of

the simulation).

and then

conceptually integrate all signals with respect to time (in advance of

the simulation).

![]() is the same on both sides of the finger-junction, which means we

can convert from force to velocity by simply negating all left-going

signals. (Conceptually, all signals are converted from force to

velocity by the Ohm's law relations and then divided by

is the same on both sides of the finger-junction, which means we

can convert from force to velocity by simply negating all left-going

signals. (Conceptually, all signals are converted from force to

velocity by the Ohm's law relations and then divided by ![]() , but the

common scaling by

, but the

common scaling by ![]() can be omitted (or postponed) unless signal

values are desired in particular physical units.) An

all-velocity-wave simulation can be converted to displacement waves

even more easily by simply changing

can be omitted (or postponed) unless signal

values are desired in particular physical units.) An

all-velocity-wave simulation can be converted to displacement waves

even more easily by simply changing ![]() to

to ![]() everywhere, because

velocity and displacement waves scatter identically. In more general

situations, we can go to the Laplace domain and replace each

occurrence of

everywhere, because

velocity and displacement waves scatter identically. In more general

situations, we can go to the Laplace domain and replace each

occurrence of ![]() by

by ![]() , each

, each ![]() by

by ![]() ,

divide all signals by

,

divide all signals by ![]() , push any leftover

, push any leftover ![]() around for maximum

simplification, perhaps absorbing it into a nearby filter. In an

all-velocity-wave simulation, each signal gets multiplied by

around for maximum

simplification, perhaps absorbing it into a nearby filter. In an

all-velocity-wave simulation, each signal gets multiplied by ![]() in

this procedure, which means it cancels out of all definable transfer

functions. All filters in the diagram (just

in

this procedure, which means it cancels out of all definable transfer

functions. All filters in the diagram (just

![]() in this

example) can be left alone because their inputs and outputs are still

force-valued in principle. (We expressed each force wave in terms of

velocity and wave impedance without changing the signal flow diagram,

which remains a force-wave simulation until minus signs, scalings, and

in this

example) can be left alone because their inputs and outputs are still

force-valued in principle. (We expressed each force wave in terms of

velocity and wave impedance without changing the signal flow diagram,

which remains a force-wave simulation until minus signs, scalings, and

![]() operators are moved around and combined.) Of course, one can

absorb scalings and sign reversals into the filter(s) to change the

physical input/output units as desired. Since we routinely assume

zero initial conditions in an impedance description, the integration

constants obtained by time-integrating velocities to get displacements

are all defined to be zero. Additional considerations regarding the

choice of displacement waves over velocity (or force) waves are given

in §E.3.3. In particular, their initial conditions can be very

different, and traveling-wave components tend not to be as well

behaved for displacement waves.

operators are moved around and combined.) Of course, one can

absorb scalings and sign reversals into the filter(s) to change the

physical input/output units as desired. Since we routinely assume

zero initial conditions in an impedance description, the integration

constants obtained by time-integrating velocities to get displacements

are all defined to be zero. Additional considerations regarding the

choice of displacement waves over velocity (or force) waves are given

in §E.3.3. In particular, their initial conditions can be very

different, and traveling-wave components tend not to be as well

behaved for displacement waves.

Piano Hammer Modeling

The previous section treated an ideal point-mass striking an ideal string. This can be considered a simplified piano-hammer model. The model can be improved by adding a damped spring to the point-mass, as shown in Fig.9.22 (cf. Fig.9.12).

|

The impedance of this plucking system, as seen by the string, is the

parallel combination of the mass impedance ![]() and the damped spring

impedance

and the damped spring

impedance ![]() . (The damper

. (The damper ![]() and spring

and spring ![]() are formally

in series--see §7.2, for a refresher on series versus

parallel connection.) Denoting

the driving-point impedance of the hammer at the string contact-point

by

are formally

in series--see §7.2, for a refresher on series versus

parallel connection.) Denoting

the driving-point impedance of the hammer at the string contact-point

by ![]() , we have

, we have

Thus, the scattering filters in the digital waveguide model are second order (biquads), while for the string struck by a mass (§9.3.1) we had first-order scattering filters. This is expected because we added another energy-storage element (a spring).

The impedance formulation of Eq.![]() (9.19) assumes all elements are

linear and time-invariant (LTI), but in practice one can normally

modulate element values as a function of time and/or state-variables

and obtain realistic results for low-order elements. For this we must

maintain filter-coefficient formulas that are explicit functions of

physical state and/or time. For best results, state variables should

be chosen so that any nonlinearities remain memoryless in the

digitization

[361,348,554,555].

(9.19) assumes all elements are

linear and time-invariant (LTI), but in practice one can normally

modulate element values as a function of time and/or state-variables

and obtain realistic results for low-order elements. For this we must

maintain filter-coefficient formulas that are explicit functions of

physical state and/or time. For best results, state variables should

be chosen so that any nonlinearities remain memoryless in the

digitization

[361,348,554,555].

Nonlinear Spring Model

In the musical acoustics literature, the piano hammer is classically

modeled as a nonlinear spring

[493,63,178,76,60,486,164].10.14Specifically, the piano-hammer damping in Fig.9.22 is

typically approximated by ![]() , and the spring

, and the spring ![]() is

nonlinear and memoryless according to a simple power

law:

is

nonlinear and memoryless according to a simple power

law:

The upward force applied to the string by the hammer is therefore

| (10.20) |

This force is balanced at all times by the downward string force (string tension times slope difference), exactly as analyzed in §9.3.1 above.

Including Hysteresis

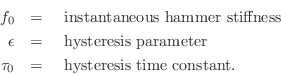

Since the compressed hammer-felt (wool) on real piano hammers shows significant hysteresis memory, an improved piano-hammer felt model is

where

Equation (9.21) is said to be a good approximation under normal playing conditions. A more complete hysteresis model is [487]

![$\displaystyle f_h(t) \eqsp f_0\left[x_k^p(t) - \frac{\epsilon}{\tau_0} \int_0^t x_k^p(\xi) \exp\left(\frac{\xi-t}{\tau_0}\right)d\xi\right]

$](http://www.dsprelated.com/josimages_new/pasp/img2179.png)

Relating to Eq.![]() (9.21) above, we have

(9.21) above, we have

![]() (N/mm

(N/mm![]() ).

).

Piano Hammer Mass

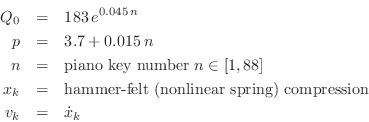

The piano-hammer mass may be approximated across the keyboard by [487]

Pluck Modeling

The piano-hammer model of the previous section can also be configured

as a plectrum by making the mass and damping small or zero, and

by releasing the string when the contact force exceeds some threshold

![]() . That is, to a first approximation, a plectrum can be modeled

as a spring (linear or nonlinear) that disengages when either

it is far from the string or a maximum spring-force is exceeded. To

avoid discontinuities when the plectrum and string engage/disengage,

it is good to taper both the damping and spring-constant to zero at

the point of contact (as shown

below).

. That is, to a first approximation, a plectrum can be modeled

as a spring (linear or nonlinear) that disengages when either

it is far from the string or a maximum spring-force is exceeded. To

avoid discontinuities when the plectrum and string engage/disengage,

it is good to taper both the damping and spring-constant to zero at

the point of contact (as shown

below).

Starting with the piano-hammer impedance of Eq.![]() (9.19) and setting

the mass

(9.19) and setting

the mass ![]() to infinity (the plectrum holder is immovable), we define

the plectrum impedance as

to infinity (the plectrum holder is immovable), we define

the plectrum impedance as

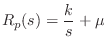

The force-wave reflectance of impedance ![]() in Eq.

in Eq.![]() (9.22), as

seen from the string, may be computed exactly as in

§9.3.1:

(9.22), as

seen from the string, may be computed exactly as in

§9.3.1:

If the spring damping is much greater than twice the string wave impedance (

Again following §9.3.1, the transmittance for force waves is given by

If the damping ![]() is set to zero, i.e., if the plectrum is to be modeled

as a simple linear spring, then the impedance becomes

is set to zero, i.e., if the plectrum is to be modeled

as a simple linear spring, then the impedance becomes

![]() ,

and the force-wave reflectance becomes

[128]

,

and the force-wave reflectance becomes

[128]

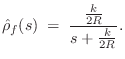

Digital Waveguide Plucked-String Model

When plucking a string, it is necessary to detect ``collisions''

between the plectrum and string. Also, more complete plucked-string

models will allow the string to ``buzz'' on the frets and ``slap''

against obstacles such as the fingerboard. For these reasons, it is

convenient to choose displacement waves for the waveguide

string model. The reflection and transmission filters for

displacement waves are the same as for velocity, namely,

![]() and

and

![]() .

.

As in the mass-string collision case, we obtain the one-filter

scattering-junction implementation shown in Fig.9.23. The filter

![]() may now be digitized using the bilinear transform as

previously (§9.3.1).

may now be digitized using the bilinear transform as

previously (§9.3.1).

Incorporating Control Motion

Let ![]() denote the vertical position of the mass

in Fig.9.22. (We still assume

denote the vertical position of the mass

in Fig.9.22. (We still assume ![]() .) We can think of

.) We can think of

![]() as the position of the control point on the

plectrum, e.g., the position of the ``pinch-point'' holding the

plectrum while plucking the string. In a harpsichord,

as the position of the control point on the

plectrum, e.g., the position of the ``pinch-point'' holding the

plectrum while plucking the string. In a harpsichord, ![]() can be

considered the jack position [347].

can be

considered the jack position [347].

Also denote by ![]() the rest length of the spring

the rest length of the spring ![]() in Fig.9.22, and let

in Fig.9.22, and let

![]() denote the

position of the ``end'' of the spring while not in contact with the

string. Then the plectrum makes contact with the string when

denote the

position of the ``end'' of the spring while not in contact with the

string. Then the plectrum makes contact with the string when

Let the subscripts ![]() and

and ![]() each denote one side of the scattering

system, as indicated in Fig.9.23. Then, for example,

each denote one side of the scattering

system, as indicated in Fig.9.23. Then, for example,

![]() is the displacement of the string on the left (side

is the displacement of the string on the left (side

![]() ) of plucking point, and

) of plucking point, and ![]() is on the right side of

is on the right side of ![]() (but

still located at point

(but

still located at point ![]() ). By continuity of the string, we have

). By continuity of the string, we have

When the spring engages the string (![]() ) and begins to compress,

the upward force on the string at the contact point is given by

) and begins to compress,

the upward force on the string at the contact point is given by

During contact, force equilibrium at the plucking point requires (cf. §9.3.1)

where

Substituting

![]() and taking the Laplace transform yields

and taking the Laplace transform yields

![$\displaystyle Y(s)

\eqsp Y_1^+(s) + Y_2^-(s) + \frac{1}{2R} \frac{F_k(s)}{s}

\eqsp Y_1^+(s) + Y_2^-(s) + \frac{k}{2Rs}\left[Y_e(s) - Y(s)\right].

$](http://www.dsprelated.com/josimages_new/pasp/img2224.png)

![\begin{eqnarray*}

Y(s) &=& \left[1-\hat{\rho}_f(s)\right]\cdot

\left[Y_1^+(s)+Y...

...)\cdot \left\{Y_e(s)

- \left[Y_1^+(s)+Y_2^-(s)\right]\right\},

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img2226.png)

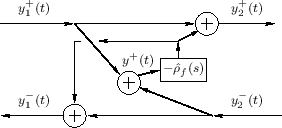

where, as first noted at Eq.![]() (9.24) above,

(9.24) above,

![\begin{eqnarray*}

Y_d^+ &=& Y_e - \left(Y_1^+ + Y_2^-\right)\\ [5pt]

Y_1^- &=& Y...

...\hat{\rho}_f Y_d^+\\ [5pt]

Y_2^+ &=& Y_1^+ + \hat{\rho}_f Y_d^+.

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img2228.png)

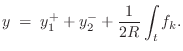

This system is diagrammed in Fig.9.24. The manipulation of the

minus signs relative to Fig.9.23 makes it convenient for

restricting ![]() to positive values only (as shown in the

figure), corresponding to the plectrum engaging the string going up.

This uses the approximation

to positive values only (as shown in the

figure), corresponding to the plectrum engaging the string going up.

This uses the approximation

![]() ,

which is exact when

,

which is exact when

![]() , i.e., when the plectrum does not affect

the string displacement at the current time. It is therefore exact at

the time of collision and also applicable just after release.

Similarly,

, i.e., when the plectrum does not affect

the string displacement at the current time. It is therefore exact at

the time of collision and also applicable just after release.

Similarly,

![]() can be used to trigger a release of the

string from the plectrum.

can be used to trigger a release of the

string from the plectrum.

Successive Pluck Collision Detection

As discussed above, in a simple 1D plucking model, the plectrum comes

up and engages the string when

![]() , and above some

maximum force the plectrum releases the string. At this point, it is

``above'' the string. To pluck again in the same direction, the

collision-detection must be disabled until we again have

, and above some

maximum force the plectrum releases the string. At this point, it is

``above'' the string. To pluck again in the same direction, the

collision-detection must be disabled until we again have ![]() ,

requiring one bit of state to keep track of that.10.16 The harpsichord jack

plucks the string only in the ``up'' direction due to its asymmetric

behavior in the two directions [143]. If only

``up picks'' are supported, then engagement can be suppressed after a

release until

,

requiring one bit of state to keep track of that.10.16 The harpsichord jack

plucks the string only in the ``up'' direction due to its asymmetric

behavior in the two directions [143]. If only

``up picks'' are supported, then engagement can be suppressed after a

release until ![]() comes back down below the envelope of string

vibration (e.g.,

comes back down below the envelope of string

vibration (e.g.,

![]() ). Note that

intermittent disengagements as a plucking cycle begins are normal;

there is often an audible ``buzzing'' or ``chattering'' when plucking

an already vibrating string.

). Note that

intermittent disengagements as a plucking cycle begins are normal;

there is often an audible ``buzzing'' or ``chattering'' when plucking

an already vibrating string.

When plucking up and down in alternation, as in the tremolo

technique (common on mandolins), the collision detection alternates

between ![]() and

and ![]() , and again a bit of state is needed to

keep track of which comparison to use.

, and again a bit of state is needed to

keep track of which comparison to use.

Plectrum Damping

To include damping ![]() in the plectrum model, the load impedance

in the plectrum model, the load impedance

![]() goes back to Eq.

goes back to Eq.![]() (9.22):

(9.22):

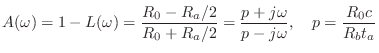

Digitization of the Damped-Spring Plectrum

Applying the bilinear transformation (§7.3.2) to the reflectance

![]() in Eq.

in Eq.![]() (9.23) (including damping) yields the

following first-order digital force-reflectance filter:

(9.23) (including damping) yields the

following first-order digital force-reflectance filter:

The transmittance filter is again

Feathering

Since the pluck model is linear, the parameters are not signal-dependent. As a result, when the string and spring separate, there is a discontinuous change in the reflection and transmission coefficients. In practice, it is useful to ``feather'' the switch-over from one model to the next [470]. In this instance, one appealing choice is to introduce a nonlinear spring, as is commonly used for piano-hammer models (see §9.3.2 for details).

Let the nonlinear spring model take the form

The foregoing suggests a nonlinear tapering of the damping ![]() in

addition to the tapering the stiffness

in

addition to the tapering the stiffness ![]() as the spring compression

approaches zero. One natural choice would be

as the spring compression

approaches zero. One natural choice would be

In summary, the engagement and disengagement of the plucking system can be ``feathered'' by a nonlinear spring and damper in the plectrum model.

Piano Synthesis

The main elements of the piano we need to model are the

- hammer (§9.3.2),

- string (Chapter 6, §6.6),

- bridge (§9.2.1), and

- soundboard, enclosure, acoustic space, mic placement, etc.

From the bridge (which runs along the soundboard) to each ``virtual microphone'' (or ``virtual ear''), the soundboard, enclosure, and listening space can be well modeled as a high-order LTI filter characterized by its impulse response (Chapter 8). Such long impulse responses can be converted to more efficient recursive digital filters by various means (Chapter 3,§8.6.2).

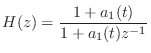

A straightforward piano model along the lines indicated above turns out to be relatively expensive computationally. Therefore, we also discuss, in §9.4.4 below, the more specialized commuted piano synthesis technique [467], which is capable of high quality piano synthesis at low cost relative to other model-based approaches. It can be seen as a hybrid method in which the string is physically modeled, the hammer is represented by a signal model, and the acoustic resonators (soundboard, enclosure, etc.) are handled by ordinary sampling (of their impulse response).

Stiff Piano Strings

Piano strings are audibly inharmonic due to stiffness [211,210]. General stiff-string modeling was introduced in §6.9. In this section, we discuss further details specific to piano strings.

The main effect of string stiffness is to stretch the partial overtone series, so that piano tones are not precisely harmonic [144]. As a result, piano tuners typically stretch the tuning of the piano slightly. For example, the total amount of tuning stretch from the lowest to highest note has been measured to be approximately 35 cents on a Kurzweil PC88, 45 cents on a Steinway Model M, and 60 cents on a Steinway Model D.10.17

Piano String Wave Equation

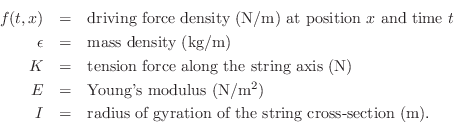

A wave equation suitable for modeling linearized piano strings is given by [77,45,317,517]

where the partial derivative notation

Young's modulus and the radius of gyration are defined in Appendix B.

The first two terms on the right-hand side of Eq.![]() (9.30) come from

the ideal string wave equation (see Eq.

(9.30) come from

the ideal string wave equation (see Eq.![]() (C.1)), and they model

transverse acceleration and transverse restoring force due to tension,

respectively. The term

(C.1)), and they model

transverse acceleration and transverse restoring force due to tension,

respectively. The term ![]() approximates the transverse

restoring force exerted by a stiff string when it is bent. In an

ideal string with zero diameter, this force is zero; in an ideal

rod (or bar), this term is dominant [317,261,169].

The final two terms provide damping. The damping associated

with

approximates the transverse

restoring force exerted by a stiff string when it is bent. In an

ideal string with zero diameter, this force is zero; in an ideal

rod (or bar), this term is dominant [317,261,169].

The final two terms provide damping. The damping associated

with ![]() is frequency-independent, while the damping due

is frequency-independent, while the damping due ![]() increases with frequency.