Signal/Vector Reconstruction from Projections

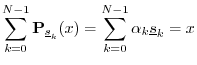

We now arrive finally at the main desired result for this section:

Theorem: The projections of any vector

![]() onto any orthogonal basis set

for

onto any orthogonal basis set

for ![]() can be summed to reconstruct

can be summed to reconstruct ![]() exactly.

exactly.

Proof: Let

![]() denote any orthogonal basis set for

denote any orthogonal basis set for ![]() .

Then since

.

Then since ![]() is in the space spanned by these vectors, we have

is in the space spanned by these vectors, we have

for some (unique) scalars

![$\displaystyle {\bf P}_{\sv_k}(\sv_l) \isdef

\frac{\left<\sv_l,\sv_k\right>}{\l...

...ll}

\underline{0}, & l\neq k \\ [5pt]

\sv_k, & l=k. \\

\end{array} \right.

$](http://www.dsprelated.com/josimages_new/mdft/img973.png)

Next Section:

Gram-Schmidt Orthogonalization

Previous Section:

General Conditions