How to Find a Fast Floating-Point atan2 Approximation

Context

Over a short period of time, I came across nearly identical approximations of the two parameter arctangent function, atan2, developed by different companies, in different countries, and even in different decades. Fascinated with how the coefficients used in these approximations were derived, I set out to find them. This atan2 implementation is based around a rational approximation of arctangent on the domain -1 to 1:$$ atan(z) \approx \dfrac{z}{1.0 + 0.28z^2}$$

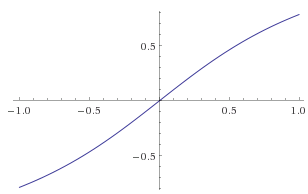

Arctangent [-1,1]

Looking at the arctangent, it visually resembles a cubic polynomial. So in addition to deriving the rational approximation, I set out to find a polynomial version too. It turns out there is a 3rd order polynomial that is a close match to the rational approximation:

$$ atan(z) \approx 0.9724x - 0.1919x^3$$

The rest of this post will cover how to reproduce the polynomial coefficients, how to implement them in the atan2 context, and wrapping up with some other thoughts.

(Note: I find it odd that this 3rd order polynomial did not come up in my research. I believe this is because the rational approximation was developed for fixed-point math and was adopted for floating-point later, but I did not find evidence of this [3].)

Arctangent Approximation

The Remez Method is an iterative, minimax algorithm that for a given function converges to a polynomial (or rational approximation) which minimizes the max error. Conveniently, the Boost libraries includes a robust implementation in the math::tools namespace with the class remez_minimax [4]. The class's numerator() and denominator() contain the coefficients at the end of the iterative process.

Although I planned to target single precision floats, it was necessary to use double precision to get useful coefficients. Below is the code necessary to initialize the remez_minimax class for atan and run iterations to find the coefficients for the polynomial approximation. At a certain point, max_change stays constant or becomes periodic and the process can stop.

math::tools::remez_minimax<double> approximate_atan(

[](const double& x) { return atan(x); }, // double precision required

3, 0, // 3rd degree polynomial

-1, 1, // -1 to 1 range

false, 0, 0, 64); // other params

do

{

approximate_atan.iterate();

} while (approximate_atan.max_change() > 0.00001);

const math::tools::polynomial<double> coefficients = approximate_atan.numerator();

const double max_error = approximate_atan.max_error();

After running this, the coefficients of {0.0, 0.97239411, 0.0, -0.19194795} were isolated, and the max error was just slightly less than 0.005 radians, which was comparable to rational version.

I confirmed the results twice:

- On Wolfram Alpha with input: sup(abs(atan(x) - 0.9724*x + 0.1919(x^3)) from -1 to 1

- Testing every single precision floating point value between -1 and 1 compared against the atan in MSVC.

The other metric I looked at was average error. Although the max error was comparable, the average error was 40% higher. If the max error is acceptable for any value (about 0.28 degrees), then it is likely also acceptable for all values.

Measuring the average error of atan polynomial and rational approximation functions, the polynomial version is nearly 40% higher.

Implementing atan2

Set with a simple approximation of arctangent over the range [-1,1], the next step is integrating it into an atan2 implementation. To handle values outside the [-1,1] range, the following arctan identity is useful:$$ arctan(y/x) = \pi/2 - arctan(x/y), \text{if} \left|y/x\right| > 1.$$

To handle the different permutations of signs of y and x, I followed the definition of atan2 from Wikipedia [1]. There are comments to call out each of the 7 cases. The two extra cases handle the the y term being greater than x in which the above identity is needed.

atan// Polynomial approximating arctangenet on the range -1,1.

// Max error < 0.005 (or 0.29 degrees)

float ApproxAtan(float z)

{

const float n1 = 0.97239411f;

const float n2 = -0.19194795f;

return (n1 + n2 * z * z) * z;

}

atan2

float ApproxAtan2(float y, float x)

{

if (x != 0.0f)

{

if (fabsf(x) > fabsf(y))

{

const float z = y / x;

if (x > 0.0)

{

// atan2(y,x) = atan(y/x) if x > 0

return ApproxAtan(z);

}

else if (y >= 0.0)

{

// atan2(y,x) = atan(y/x) + PI if x < 0, y >= 0

return ApproxAtan(z) + PI;

}

else

{

// atan2(y,x) = atan(y/x) - PI if x < 0, y < 0

return ApproxAtan(z) - PI;

}

}

else // Use property atan(y/x) = PI/2 - atan(x/y) if |y/x| > 1.

{

const float z = x / y;

if (y > 0.0)

{

// atan2(y,x) = PI/2 - atan(x/y) if |y/x| > 1, y > 0

return -ApproxAtan(z) + PI_2;

}

else

{

// atan2(y,x) = -PI/2 - atan(x/y) if |y/x| > 1, y < 0

return -ApproxAtan(z) - PI_2;

}

}

}

else

{

if (y > 0.0f) // x = 0, y > 0

{

return PI_2;

}

else if (y < 0.0f) // x = 0, y < 0

{

return -PI_2;

}

}

return 0.0f; // x,y = 0. Could return NaN instead.

}

Notice that only finite floating point values are handled. Permutations of +/- infinity and +/- 0 all have defined values under the IEEE standard. I decided to not include these for this this function.

Looking for a Bit More Speed

The goal of the approximation is to be faster than the precise implementation and with the math simplified (at least as much as I could show), the remaining optimization targeted the branching and reducing the amount of floating point comparisons.

It is possible to eliminate the three branches that determined the +/-PI or +/-PI/2 offsets to atan with bit manipulation. The nice thing, and it is even suggested by the disassembly, was that some of the logic could be performed on the ALU and FPU in parallel. Overall this made an impact of about 5%, but at the cost of being harder to read.

float ApproxAtan2(float y, float x)

{

const float n1 = 0.97239411f;

const float n2 = -0.19194795f;

float result = 0.0f;

if (x != 0.0f)

{

const union { float flVal; uint32 nVal; } tYSign = { y };

const union { float flVal; uint32 nVal; } tXSign = { x };

if (fabsf(x) >= fabsf(y))

{

union { float flVal; uint32 nVal; } tOffset = { PI };

// Add or subtract PI based on y's sign.

tOffset.nVal |= tYSign.nVal & 0x80000000u;

// No offset if x is positive, so multiply by 0 or based on x's sign.

tOffset.nVal *= tXSign.nVal >> 31;

result = tOffset.flVal;

const float z = y / x;

result += (n1 + n2 * z * z) * z;

}

else // Use atan(y/x) = pi/2 - atan(x/y) if |y/x| > 1.

{

union { float flVal; uint32 nVal; } tOffset = { PI_2 };

// Add or subtract PI/2 based on y's sign.

tOffset.nVal |= tYSign.nVal & 0x80000000u;

result = tOffset.flVal;

const float z = x / y;

result -= (n1 + n2 * z * z) * z;

}

}

else if (y > 0.0f)

{

result = PI_2;

}

else if (y < 0.0f)

{

result = -PI_2;

}

return result;

}

Example disassembly of ALU and FPU operations being interleaved:

...

tOffset.nVal |= tYSign.nVal & 0x80000000u;

and ecx, 80000000h

or ecx, dword ptr[tOffset]

result = tOffset.flVal;

float z = y / x;

divss xmm4, xmm5

tOffset.nVal *= tXSign.nVal >> 31;

shr eax, 1Fh

imul ecx, eax

result += (n1 + n2 * z * z) * z;

...

SIMD Optimization

For work, I re-wrote ApproxAtan2 using SSE2 intrinsics to calculate 4 atan2 results simultaneously. Taking out any overhead of packing the 4 sets of x and y values, it ran about 40% faster than 4 separate calls to ApproxAtan2 run in serial. The function ended up being 38 intrinsic instructions which intuitively feels a bit more than necessary, but I limited my time to look at it. I might go back and try writing it again with the goals of being: more readable, use less instructions, and allow myself to use newer instruction sets.

Results

The tests I ran were a number of different inputs representing some different contexts with about 1 billion pairs of values. The values below are in seconds and have had the baseline, or time to generate inputs, removed. (I only ran one comparable test of the SIMD version vs the polynomial approximation.)

The tests are inputs of what I expected in typical geometric applications. I also included a contrived test to demonstrate how the DIVSS operation on i7 can have dramatically different performance based on the input. The polynomial approximation with reduced branching seems to be 25% to 50% faster.

| Test | std::atan2 | rational approx | polynomial approx | polynomial SIMD |

|---|---|---|---|---|

| 1 | 100.3 | 24.1 | 18.4 | 46.0 (11.5) |

| 2 | 70.1 | 14.4 | 7.4 | na |

| 3 | 70.5 | 14.3 | 8.1 | na |

| 4 | 8.3 | 51.2 | 50.8 | na |

|

||||

Limitations of Remez Minimax

The Boost class for the Remez minimax algorithm is quite nice in that you can test a number of polynomial and rational combinations to find the best set of coefficients for the required performance. I have tried this for arccos and tanh as well. However the algorithm only minimizes the error for polynomial and rational approximations when there are other types of function approximations. Modern FPU architectures can perform sqrt comparably fast to division for example.

Recursive functions or continued fractions are also often decent approximations. In the case of arccos there are long known approximations using both sqrt and recursion which are superior to polynomial and rational functions found using the Remez method. There are also cases where the Remez algorithm can get stuck or become unstable, although there are work arounds.

Links

- [1] Atan2 - Handling Zero and Planes: https://en.wikipedia.org/wiki/Atan2#Definition_and_computation

- [2] The Remez Method: http://www.boost.org/doc/libs/1_46_1/libs/math/doc/sf_and_dist/html/math_toolkit/backgrounders/remez.html

- [3] Performing Efficient Arctangent Approximation: http://www.embedded.com/design/other/4216719/Performing-efficient-arctangent-approximation

- [4] Boost Remez source code: http://www.boost.org/doc/libs/1_55_0/boost/math/tools/remez.hpp

- Comments

- Write a Comment Select to add a comment

So I noticed that while you approximated \(\arctan(z)\) over \([-1,1]\) you were only actually applying it to \([0,1]\) - which means we can do better by fitting a cubic on the proper interval. This does cost one extra multiply and two extra adds, as the cubic won't be symmetric anymore. (Coefficients are approxomate, but yield similar error.)

\[\arctan(z) \approx -0.060317z^3 -0.198146z^2 + 1.044261z - 0.002178, z \in [0,1]\]

for two more multiplies and another add a quartic does even better

\[\arctan(z) \approx 0.141499z^4 - 0.343315z^3 - 0.016224z^2 + 1.003839z - 0.000158\]

| Method | RMS Error | Max Error | Time |

| \(z \over 1+az\) | 0.002348 | 0.007385 | 0.716351 |

| \(az^3+cz\) | 0.003488 | 0.004952 | 0.449856 |

| \(az^3+bz^2+cz+d\) | 0.0006843 | 0.002178 | 0.563637 |

| \(az^4+bz^3+cz^2+dz+e\) | 0.00006394 | 0.0002427 | 0.654011 |

| libm | 1.407e-17 | 1.110e-16 | 3.020070 |

Error estimates are in radians over 20k evenly spaced points on the unit circle.

Time is to sum up arctagents of \(2^{26}\) points evenly distributed in the unit square.I couldn't get the tiny number run to replicate, except libm did get faster (LLVM 3.9.1 on an i5-3337).

Hi imuli,

Give me a week to pull up this project again and refresh myself. But two quick questions:

1. How did you come to the conclusion that I was only using the range [0,1]?

2. What did you use to locate the coefficients? As I mentioned I was using the boost implementation of remez_minimax.

(I will also call out that the rms in my image left out a divide by 2, which you have picked up on.)

Thanks, very interest to follow up on this. The extra add/multiply would be well worth the precision.

1. Apparently by misreading something, because your code obviously is using the full range! We don't need to though, because \(\arctan(-z) = -\arctan(z)\).

double

atan2_approx(double y, double x){

double ay = fabs(y), ax = fabs(x);

int invert = ay > ax;

double z = invert ? ax/ay : ay/ax; // [0,1]

double th = atan_approx(z); // [0,π/4]

if(invert) th = M_PI_2 - th; // [0,π/2]

if(x < 0) th = M_PI - th; // [0,π]

th = copysign(th, y); // [-π,π]

}

The three branches can be eliminated with some cleverness, but I'm going for clarity here.

2. I used gnuplot's fit function. It actually gave slightly better coefficients for the symmetric cubic case, but they weren't *that* much better.

Spent some time looking at your solution. What I like the most is how succinct and more readable it becomes when dealing with the absolute values of x and y. If I use your approach to update my SIMD implementation, I'll be sure to post up here. Thank you again, this was very insightful!

Be warned, imuli's solution has a critical problem. It might end up giving you values outside of [-π,π] when z is close to zero.

See how imuli's polygonal approximation does not pass from the origin and there is a small offset. That causes the problem.

Great, thank you. I'm going to process this next week when I have some free time.

Hi imuli

I read your article with interest.I need an approximation as precise as possible in the range of[0.1]. Calculation time does not matter.I checked it for accuracy right away, yours Approximation delivered the best result with the least amount of effort.What kind of approximation is it ? and how do I get more accurate Coefficients and as well as for higher order.Do you have a link?

Thank you in advance. Toni.

They are Polynomial Regressions, there are a lot of tools out there to create them, it looks like I used gnuplot which is a graph plotting tool. Higher degree polynomials can give you more precise results, I am not sure at what point you'll hit diminishing returns.

For unbounded accurancy, this answer on stack overflow looks like it will converge in reasonable time for results that aren't right around π/4. There is also openlibm's implementation.

Thanks for the prompt reply.

I tried 3 different formulas:

......

X = 0.166666666666;

.....// x2 to x11 are power of X

X11 = pow(X,11);

}

void calc(){

atang1= Pidiv4 * X - X*(X -1)*(0.2447 + 0.0663* X); // =0.166420534253

atang2= 0.141499*X4 -0.343315*X3 -0.016224*X2 +1.003839*X -0.000158;

// =0.165217608213

atang3= X - X3/3 + X5/5 - X7/7; // =0.165148675442

atang4= X - X3/3 + X5/5 - X7/7 + X9/9; // =0.165148690343

atang5= X - X3/3 + X5/5 - X7/7 + X9/9 - X11/11; // =0.165148690343

}

// Correct result is: 0.16514867740814

my math skills are quite limited.

(wikipedia)Polynominal Regresion (atang2) = unfortunately could not

find out how to calculate the coefficients, could You help me please

I noticed the title of your post and at first thought to myself, "Uh oh, another attempt at numerical approximation" -- but it is very well-done and I applaud your work.

I wish there were a good Remez rational approximation feature in Python so I could experiment without having to drop back down into C++ hell.

Thank you. It is interesting you mention Python. When I was looking for an implementation of Remez approximation, my goal was to find an R package. At least at the time I was doing this research, I only found the Boost implementation and Matlab (which I no longer have a license for).

I'm glad you found this thing, but please give credit.

http://f3.to/portfolio/math/fastatan2.htm I had this up since 2008.

Here's the version for microcontrollers that can only do 16 bit integer math, such as the Picaxe:

http://f3.to/portfolio/math/fastatan2_integer.htm

Thank you

Can you be more specific?

I looked at your pages, and these seem to be different approximations (but I could be mistaken). So I am not sure what you are asking to be credited for. The rational approximation at the very beginning of the article?

- Nic

Please play around with my values and you may get more precise results :)

I see. Thank you. Yes, next time I come back to benchmark atan2, I can try to adapt your equation to work with in my test. At this time, none of the projects I am on are bottle-necked on atan2, so it is a lower priority. But any new results, I will post back to this thread.

Hello Nic,

This article was very insightful. I'm very new to all this but was able to understand to some level after reading this article. Good job and thanks. Did you add that update from imuli's comment? Is atan2 c function also updated as per that information provided by imuli? I'm gonna use this atan2 function directly(as I cannot use math.h lib). When I run python math.atan2 function and the above atan2 function, it has slight error 0.04. With that update, can we reduce that error some more?

Thanks,

Manju

To post reply to a comment, click on the 'reply' button attached to each comment. To post a new comment (not a reply to a comment) check out the 'Write a Comment' tab at the top of the comments.

Please login (on the right) if you already have an account on this platform.

Otherwise, please use this form to register (free) an join one of the largest online community for Electrical/Embedded/DSP/FPGA/ML engineers: