FDN Stability

Stability of the FDN is assured when some norm [451] of

the state vector

![]() decreases over time when the input signal is

zero [220, ``Lyapunov stability theory'']. That is, a

sufficient condition for FDN stability is

decreases over time when the input signal is

zero [220, ``Lyapunov stability theory'']. That is, a

sufficient condition for FDN stability is

for all

![$\displaystyle \mathbf{x}(n+1) = \mathbf{A}\left[\begin{array}{c} x_1(n-M_1) \\ [2pt] x_2(n-M_2) \\ [2pt] x_3(n-M_3)\end{array}\right].

$](http://www.dsprelated.com/josimages_new/pasp/img567.png)

for all

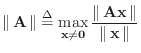

The matrix norm corresponding to any vector norm

![]() may be defined for the matrix

may be defined for the matrix

![]() as

as

where

It can be shown [167] that the spectral norm of a matrix

![]() is given by the largest singular value of

is given by the largest singular value of

![]() (``

(``

![]() ''), and that this is equal to the

square-root of the largest eigenvalue of

''), and that this is equal to the

square-root of the largest eigenvalue of

![]() , where

, where

![]() denotes the matrix transpose of the real matrix

denotes the matrix transpose of the real matrix

![]() .3.11

.3.11

Since every orthogonal matrix

![]() has spectral norm

1,3.12 a wide variety of stable

feedback matrices can be parametrized as

has spectral norm

1,3.12 a wide variety of stable

feedback matrices can be parametrized as

![$\displaystyle {\bm \Gamma}= \left[ \begin{array}{cccc}

g_1 & 0 & \dots & 0\\

0...

...\\

0 & 0 & \dots & g_N

\end{array}\right], \quad \left\vert g_i\right\vert<1.

$](http://www.dsprelated.com/josimages_new/pasp/img587.png)

An alternative stability proof may be based on showing that an FDN is

a special case of a passive digital waveguide network (derived in

§C.15). This analysis reveals that the FDN is lossless if

and only if the feedback matrix

![]() has unit-modulus eigenvalues

and linearly independent eigenvectors.

has unit-modulus eigenvalues

and linearly independent eigenvectors.

Next Section:

Allpass from Two Combs

Previous Section:

Single-Input, Single-Output (SISO) FDN