Fourier Theorems for the DTFT

This section states and proves selected Fourier theorems for the DTFT. A more complete list for the DFT case is given in [264].3.4Since this material was originally part of an appendix, it is relatively dry reading. Feel free to skip to the next chapter and refer back as desired when a theorem is invoked.

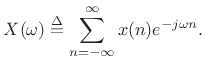

As introduced in §2.1 above, the Discrete-Time Fourier Transform (DTFT) may be defined as

|

(3.8) |

We say that

Linearity of the DTFT

| (3.9) |

or

| (3.10) |

where

Proof:

We have

![\begin{eqnarray*}

\hbox{\sc DTFT}_\omega(\alpha x_1 + \beta x_2)

& \isdef & \sum_{n=-\infty}^{\infty}[\alpha x_1(n) + \beta x_2(n)]e^{-j\omega n}\\

&=& \alpha\sum_{n=-\infty}^{\infty}x_1(n)e^{-j\omega n} + \beta \sum_{n=-\infty}^{\infty}x_2(n)e^{-j\omega n}\\

&\isdef & \alpha X_1(\omega) + \beta X_2(\omega)

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/sasp2/img126.png)

One way to describe the linearity property is to observe that the Fourier transform ``commutes with mixing.''

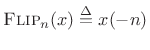

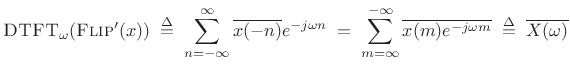

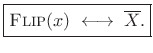

Time Reversal

For any complex signal ![]() ,

,

![]() , we have

, we have

| (3.11) |

where

.

.

Proof:

![\begin{eqnarray*}

\hbox{\sc DTFT}_\omega(\hbox{\sc Flip}(x))

&\isdef & \sum_{n=-\infty}^{\infty} x(-n)e^{-j\omega n}

\eqsp \sum_{m=\infty}^{-\infty} x(m)e^{-j(-\omega) m}

\eqsp X(-\omega) \\ [5pt]

&\isdef & \hbox{\sc Flip}_\omega(X)

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/sasp2/img130.png)

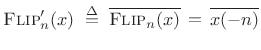

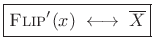

Arguably,

![]() should include complex conjugation. Let

should include complex conjugation. Let

|

(3.12) |

denote such a definition. Then in this case we have

|

(3.13) |

Proof:

|

(3.14) |

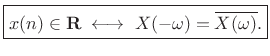

In the typical special case of real signals (

![]() ), we have

), we have

![]() so that

so that

|

(3.15) |

That is, time-reversing a real signal conjugates its spectrum.

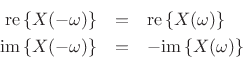

Symmetry of the DTFT for Real Signals

Most (if not all) of the signals we deal with in practice are real signals. Here we note some spectral symmetries associated with real signals.

DTFT of Real Signals

The previous section established that the spectrum ![]() of every real

signal

of every real

signal ![]() satisfies

satisfies

| (3.16) |

I.e.,

|

(3.17) |

In other terms, if a signal

- The real part is even, while the imaginary part is odd:

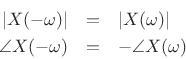

- The magnitude is even, while the phase is odd:

Real Even (or Odd) Signals

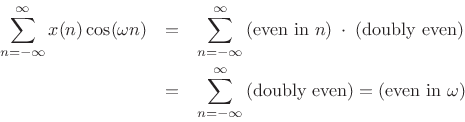

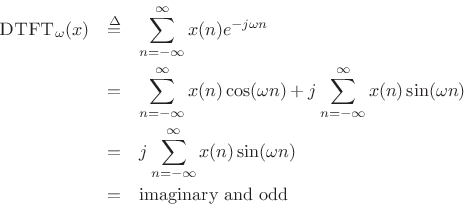

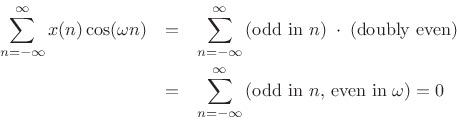

If a signal is even in addition to being real, then its DTFT is also real and even. This follows immediately from the Hermitian symmetry of real signals, and the fact that the DTFT of any even signal is real:

![\begin{eqnarray*}

\hbox{\sc DTFT}_\omega(x)

& \isdef & \sum_{n=-\infty}^{\infty}x(n) e^{-j\omega n}\\

& = & \sum_{n=-\infty}^{\infty}x(n) \left[\cos(\omega n) + j\sin(\omega n)\right]\\

& = & \sum_{n=-\infty}^{\infty}x(n) \cos(\omega n) + j\sum_{n=-\infty}^{\infty}x(n)\sin(\omega n)\\

& = & \sum_{n=-\infty}^{\infty}x(n) \cos(\omega n)\\

& = & \hbox{real and even}

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/sasp2/img142.png)

This is true since cosine is even, sine is odd, even times even is even, even times odd is odd, and the sum over all samples of an odd signal is zero. I.e.,

and

If ![]() is real and even, the following are true:

is real and even, the following are true:

![\begin{eqnarray*}

\hbox{\sc Flip}(x) & = & x \qquad \hbox{($x(-n)=x(n)$)}\\

\overline{x} & = & x\\ [5pt]

\hbox{\sc Flip}(X) & = & X\\

\overline{X} & = & X\\

\angle X(\omega) & =& 0 \hbox{ or } \pi

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/sasp2/img145.png)

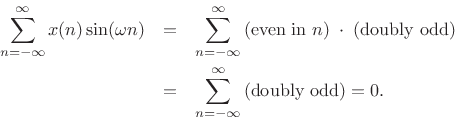

Similarly, if a signal is odd and real, then its DTFT is odd and purely imaginary. This follows from Hermitian symmetry for real signals, and the fact that the DTFT of any odd signal is imaginary.

where we used the fact that

and

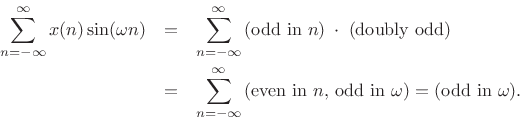

Shift Theorem for the DTFT

We define the shift operator for sampled signals ![]() by

by

|

(3.18) |

where

The shift theorem states3.5

|

(3.19) |

or, in operator notation,

| (3.20) |

Proof:

![\begin{eqnarray*}

\hbox{\sc DTFT}_\omega[\hbox{\sc Shift}_l(x)] &\isdef & \sum_{n=-\infty}^{\infty}x(n-l) e^{-j \omega n} \\

&=& \sum_{m=-\infty}^{\infty} x(m) e^{-j \omega (m+l)}

\qquad(m\isdef n-l) \\

&=& \sum_{m=-\infty}^{\infty}x(m) e^{-j \omega m} e^{-j \omega l} \\

&=& e^{-j \omega l} \sum_{m=-\infty}^{\infty}x(m) e^{-j \omega m} \\

&\isdef & e^{-j \omega l} X(\omega)

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/sasp2/img158.png)

Note that

![]() is a linear phase term, so called

because it is a linear function of frequency with slope equal to

is a linear phase term, so called

because it is a linear function of frequency with slope equal to ![]() :

:

| (3.21) |

The shift theorem gives us that multiplying a spectrum

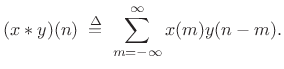

Convolution Theorem for the DTFT

The convolution of discrete-time signals ![]() and

and ![]() is defined

as

is defined

as

|

(3.22) |

This is sometimes called acyclic convolution to distinguish it from the cyclic convolution used for length

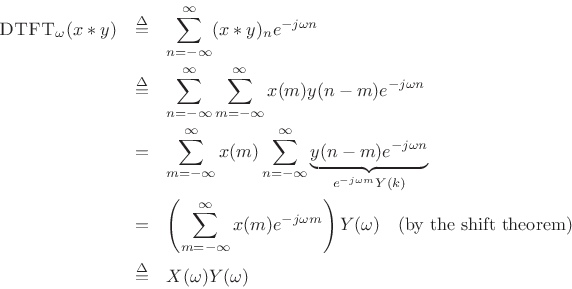

The convolution theorem is then

| (3.23) |

That is, convolution in the time domain corresponds to pointwise multiplication in the frequency domain.

Proof: The result follows immediately from interchanging the order

of summations associated with the convolution and DTFT:

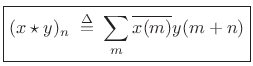

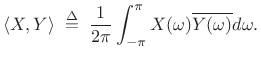

Correlation Theorem for the DTFT

We define the correlation of discrete-time signals ![]() and

and ![]() by

by

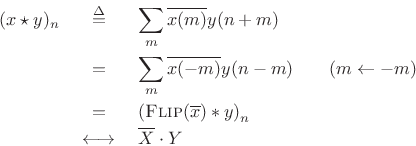

The correlation theorem for DTFTs is then

Proof:

where the last step follows from the convolution theorem of

§2.3.5 and the symmetry result

![]() of §2.3.2.

of §2.3.2.

Autocorrelation

The autocorrelation of a signal ![]() is simply the

cross-correlation of

is simply the

cross-correlation of ![]() with itself:

with itself:

|

(3.24) |

From the correlation theorem, we have

Note that this definition of autocorrelation is appropriate for signals having finite support (nonzero over a finite number of samples). For infinite-energy (but finite-power) signals, such as stationary noise processes, we define the sample autocorrelation to include a normalization suitable for this case (see Chapter 6 and Appendix C).

From the autocorrelation theorem we have that a digital-filter

impulse-response ![]() is that of a lossless allpass filter

[263] if and only if

is that of a lossless allpass filter

[263] if and only if

![]() .

In other words, the autocorrelation of the impulse-response of every

allpass filter is impulsive.

.

In other words, the autocorrelation of the impulse-response of every

allpass filter is impulsive.

Power Theorem for the DTFT

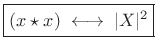

The inner product of two signals may be defined in the time domain by [264]

|

(3.25) |

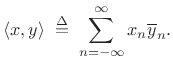

The inner product of two spectra may be defined as

|

(3.26) |

Note that the frequency-domain inner product includes a normalization factor while the time-domain definition does not.

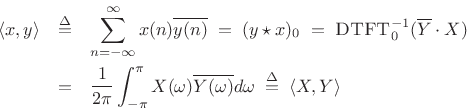

Using inner-product notation, the power theorem (or Parseval's theorem [202]) for DTFTs can be stated as follows:

| (3.27) |

That is, the inner product of two signals in the time domain equals the inner product of their respective spectra (a complex scalar in general).

When we consider the inner product of a signal with itself, we have the special case known as the energy theorem (or Rayleigh's energy theorem):

| (3.28) |

where

Proof:

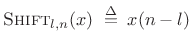

Stretch Operator

We define the stretch operator in the time domain by

![$\displaystyle \hbox{\sc Stretch}_{L,n}(x) \isdefs \left\{\begin{array}{ll} x\left(\frac{n}{L}\right), & n = 0\;(\hbox{\sc mod}\ L) \\ [5pt] 0, & \hbox{otherwise} \\ \end{array} \right..$](http://www.dsprelated.com/josimages_new/sasp2/img183.png) |

(3.29) |

In other terms, we stretch a sampled signal by the factor

In the literature on multirate filter banks (see Chapter 11), the

stretch operator is typically called instead the upsampling

operator. That is, stretching a signal by the factor of ![]() is called

upsampling the signal by the factor

is called

upsampling the signal by the factor ![]() . (See §11.1.1 for

the graphical symbol (

. (See §11.1.1 for

the graphical symbol (

![]() ) and associated discussion.) The

term ``stretch'' is preferred in this book because ``upsampling''

is easily confused with ``increasing the sampling rate''; resampling a

signal to a higher sampling rate is conceptually implemented by a

stretch operation followed by an ideal lowpass filter which moves the

inserted zeros to their properly interpolated values.

) and associated discussion.) The

term ``stretch'' is preferred in this book because ``upsampling''

is easily confused with ``increasing the sampling rate''; resampling a

signal to a higher sampling rate is conceptually implemented by a

stretch operation followed by an ideal lowpass filter which moves the

inserted zeros to their properly interpolated values.

Note that we could also call the stretch operator the scaling operator, to unify the terminology in the discrete-time case with that of the continuous-time case (§2.4.1 below).

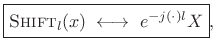

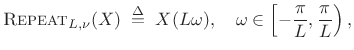

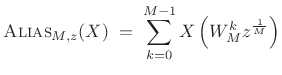

Repeat (Scaling) Operator

We define the repeat operator in the frequency domain as

a scaling of frequency axis by some integer factor ![]() :

:

|

(3.30) |

where

The repeat operator maps the entire unit circle (taken as ![]() to

to

![]() ) to a segment of itself

) to a segment of itself

![]() , centered about

, centered about

![]() , and repeated

, and repeated ![]() times. This is illustrated in Fig.2.2

for

times. This is illustrated in Fig.2.2

for ![]() .

.

Since the frequency axis is continuous and ![]() -periodic for DTFTs,

the repeat operator is precisely equivalent to a scaling operator for

the Fourier transform case (§B.4). We call it ``repeat''

rather than ``scale'' because we are restricting the scale factor to

positive integers, and because the name ``repeat'' describes more

vividly what happens to a periodic spectrum that is compressively

frequency-scaled over the unit circle by an integer factor.

-periodic for DTFTs,

the repeat operator is precisely equivalent to a scaling operator for

the Fourier transform case (§B.4). We call it ``repeat''

rather than ``scale'' because we are restricting the scale factor to

positive integers, and because the name ``repeat'' describes more

vividly what happens to a periodic spectrum that is compressively

frequency-scaled over the unit circle by an integer factor.

Stretch/Repeat (Scaling) Theorem

Using these definitions, we can compactly state the stretch theorem:

| (3.31) |

Proof:

![\begin{eqnarray*}

\hbox{\sc DTFT}_\omega[\hbox{\sc Stretch}_L(x)]

&\isdef & \sum_{n=-\infty}^{\infty}\hbox{\sc Stretch}_{L,n}(x)e^{-j\omega n}\\

&=& \sum_{m=-\infty}^{\infty}x(m)e^{-j\omega m L}\qquad \hbox{($m\isdef n/L$)}\\

&\isdef & X(\omega L)

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/sasp2/img199.png)

As ![]() traverses the interval

traverses the interval

![]() ,

,

![]() traverses the unit circle

traverses the unit circle ![]() times, thus implementing the repeat

operation on the unit circle. Note also that when

times, thus implementing the repeat

operation on the unit circle. Note also that when ![]() , we have

, we have

![]() , so that dc always maps to dc. At half the sampling

rate

, so that dc always maps to dc. At half the sampling

rate

![]() , on the other hand, after the mapping, we may have

either

, on the other hand, after the mapping, we may have

either

![]() (

(![]() odd), or

odd), or ![]() (

(![]() even), where

even), where

![]() .

.

The stretch theorem makes it clear how to do

ideal sampling-rate conversion for integer upsampling ratios ![]() :

We first stretch the signal by the factor

:

We first stretch the signal by the factor ![]() (introducing

(introducing ![]() zeros

between each pair of samples), followed by an ideal lowpass

filter cutting off at

zeros

between each pair of samples), followed by an ideal lowpass

filter cutting off at ![]() . That is, the filter has a gain of 1

for

. That is, the filter has a gain of 1

for

![]() , and a gain of 0 for

, and a gain of 0 for

![]() . Such a system (if it were realizable) implements ideal bandlimited interpolation of the original signal by the factor

. Such a system (if it were realizable) implements ideal bandlimited interpolation of the original signal by the factor ![]() .

.

The stretch theorem is analogous to the scaling theorem for continuous Fourier transforms (introduced in §2.4.1 below).

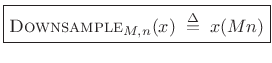

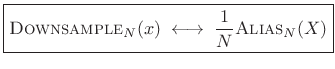

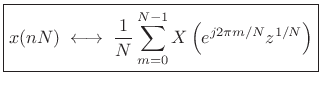

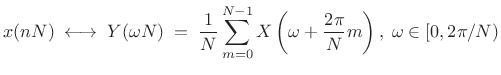

Downsampling and Aliasing

The downsampling operator

![]() selects every

selects every ![]() sample of a signal:

sample of a signal:

|

(3.32) |

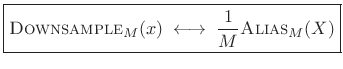

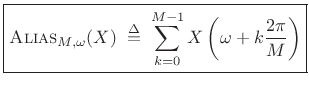

The aliasing theorem states that downsampling in time corresponds to aliasing in the frequency domain:

|

(3.33) |

where the

|

(3.34) |

for

In z transform notation, the

![]() operator can be expressed as

[287]

operator can be expressed as

[287]

|

(3.35) |

where

![$\displaystyle \hbox{\sc Alias}_{M,\omega}(X) \eqsp \sum_{k=0}^{M-1} X\left[e^{j\left(\frac{\omega}{M} + k\frac{2\pi}{M}\right)}\right], \quad -\pi\leq \omega < \pi.$](http://www.dsprelated.com/josimages_new/sasp2/img219.png) |

(3.36) |

The frequency scaling corresponds to having a sampling interval of

The aliasing theorem makes it clear that, in order to downsample by

factor ![]() without aliasing, we must first lowpass-filter the spectrum

to

without aliasing, we must first lowpass-filter the spectrum

to

![]() . This filtering (when ideal) zeroes out the

spectral regions which alias upon downsampling.

. This filtering (when ideal) zeroes out the

spectral regions which alias upon downsampling.

Note that any rational sampling-rate conversion factor

![]() may be implemented as an upsampling by the factor

may be implemented as an upsampling by the factor ![]() followed by

downsampling by the factor

followed by

downsampling by the factor ![]() [50,287].

Conceptually, a stretch-by-

[50,287].

Conceptually, a stretch-by-![]() is followed by a lowpass filter cutting

off at

is followed by a lowpass filter cutting

off at

![]() , followed by

downsample-by-

, followed by

downsample-by-![]() , i.e.,

, i.e.,

| (3.37) |

In practice, there are more efficient algorithms for sampling-rate conversion [270,135,78] based on a more direct approach to bandlimited interpolation.

Proof of Aliasing Theorem

To show:

or

From the DFT case [264], we know this is true when ![]() and

and ![]() are each complex sequences of length

are each complex sequences of length ![]() , in which case

, in which case ![]() and

and ![]() are length

are length ![]() . Thus,

. Thus,

|

(3.38) |

where we have chosen to keep frequency samples

|

(3.39) |

Replacing

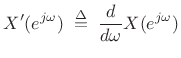

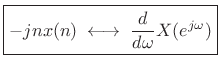

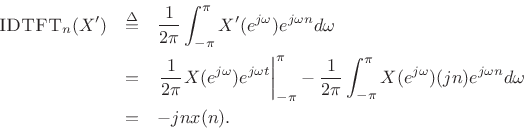

Differentiation Theorem Dual

Theorem: Let ![]() denote a signal with DTFT

denote a signal with DTFT

![]() , and let

, and let

|

(3.40) |

denote the derivative of

where

Proof:

Using integration by parts, we obtain

An alternate method of proof is given in §B.3.

Corollary: Perhaps a cleaner statement is as follows:

This completes our coverage of selected DTFT theorems. The next section adds some especially useful FT theorems having no precise counterpart in the DTFT (discrete-time) case.

Next Section:

Continuous-Time Fourier Theorems

Previous Section:

Fourier Transform (FT) and Inverse

![\includegraphics[width=4in]{eps/stretch2}](http://www.dsprelated.com/josimages_new/sasp2/img186.png)

![\begin{psfrags}

% latex2html id marker 7156\psfrag{w}{\Large $\omega$}\begin{figure}[htbp]

\includegraphics[width=\twidth]{eps/repeat2}

\caption{Illustration of the repeat operator.}

\end{figure}

\end{psfrags}](http://www.dsprelated.com/josimages_new/sasp2/img196.png)