Equivalence of Digital Waveguide and Finite Difference Schemes

It was shown in §C.4.3 that the digital waveguide (DW) model for the ideal vibrating string performs the same ``state transitions'' as the more standard finite-difference time-domain (FDTD) scheme (also known as the ``leapfrog'' recursion). This appendix, initially published in [444], further establishes that the solution spaces of the two schemes are isomorphic. That is, a linear, one-to-one transformation is derived which converts any point in the state-space of one scheme to a unique point in the other scheme. Since boundary conditions and initial values are more intuitively transparent in the DW formulation, the simple means of converting back and forth can be useful in initializing and constructing boundaries for FDTD simulations, as we will see.

Introduction

The digital waveguide (DW) method has been used for many years to provide highly efficient algorithms for musical sound synthesis based on physical models [433,447,396]. For a much longer time, finite-difference time-domain (FDTD) schemes have been used to simulate more general situations, usually at higher cost [550,392,74,77,45,397]. In recent years, there has been interest in relating these methods to each other [123] and in combining them for more general simulations. For example, modular hybrid methods have been devised which interconnect DW and FDTD simulations by means of a KW converter [223,226]. The basic idea of the KW-converter adaptor is to convert the ``Kirchoff variables'' of the FDTD, such as string displacement, velocity, etc., to ``wave variables'' of the DW. The W variables are regarded as the traveling-wave components of the K variables.

In this appendix, we present an alternative to the KW converter. Instead of converting K variables to W variables, or vice versa, in the time domain, conversion formulas are derived with respect to the current state as a function of spatial coordinates. As a result, it becomes simple to convert any instantaneous state configuration from FDTD to DW form, or vice versa. Thus, instead of providing the necessary time-domain filter to implement a KW converter converting traveling-wave components to physical displacement of a vibrating string, say, one may alternatively set the displacement variables instantaneously to the values corresponding to a given set of traveling-wave components in the string model. Another benefit of the formulation is an exact physical interpretation of arbitrary initial conditions and excitations in the K-variable FDTD method. Since the DW formulation is exact in principle (though bandlimited), while the FDTD is approximate, even in principle, it can be argued that the true physical interpretation of the FDTD method is that given by the DW method. Since both methods generate the same evolution of state from a common starting point, they may only differ in computational expense, numerical sensitivity, and in the details of supplying initial conditions and boundary conditions.

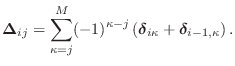

The wave equation for the ideal vibrating string, reviewed in §C.1, can be written as

|

In the following two subsections, we briefly recall finite difference and digital waveguide models for the ideal vibrating string.

Finite Difference Time Domain (FDTD) Scheme

As discussed in §C.2, we may use centered finite

difference approximations (FDA) for the

second-order partial derivatives in the wave equation to obtain a

finite difference scheme for numerically integrating the ideal

wave equation [481,311]:

where

Substituting the FDA into the wave equation, choosing ![]() ,

where

,

where

![]() is sound speed (normalized to

is sound speed (normalized to ![]() below), and sampling at times

below), and sampling at times ![]() and positions

and positions ![]() , we

obtain the following explicit finite difference scheme for the string

displacement:

, we

obtain the following explicit finite difference scheme for the string

displacement:

where the sampling intervals

Digital Waveguide (DW) Scheme

We now derive the digital waveguide formulation by sampling the

traveling-wave solution to the wave equation. It is easily

checked that the lossless 1D wave equation

![]() is solved

by any string shape

is solved

by any string shape ![]() which travels to the left or right with speed

which travels to the left or right with speed

![]() [100]. Denote

right-going traveling waves in general by

[100]. Denote

right-going traveling waves in general by

![]() and

left-going traveling waves by

and

left-going traveling waves by

![]() , where

, where ![]() and

and

![]() are assumed twice-differentiable. Then, as is well known, the

general class of solutions to the lossless, one-dimensional,

second-order wave equation can be expressed as

are assumed twice-differentiable. Then, as is well known, the

general class of solutions to the lossless, one-dimensional,

second-order wave equation can be expressed as

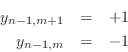

Sampling these traveling-wave solutions yields

where a ``

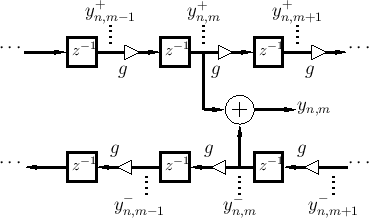

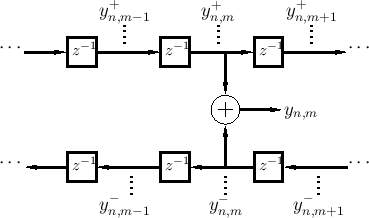

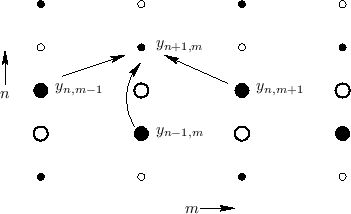

Figure E.1 (initially given as Fig.C.3)

shows a signal flow diagram for the computational model of

Eq.![]() (E.5), termed a digital waveguide model (developed

in detail in Appendix C). Recall that, by the sampling theorem, it

is an exact model so long as the initial conditions and any ongoing

additive excitations are bandlimited to less than half the temporal

sampling rate

(E.5), termed a digital waveguide model (developed

in detail in Appendix C). Recall that, by the sampling theorem, it

is an exact model so long as the initial conditions and any ongoing

additive excitations are bandlimited to less than half the temporal

sampling rate ![]() [451, Appendix G]. Recall also that

the position along the string,

[451, Appendix G]. Recall also that

the position along the string,

![]() meters, is laid

out from left to right in the diagram, giving a physical

interpretation to the horizontal direction in the diagram, even though

spatial samples have been eliminated from explicit consideration. (The

arguments of

meters, is laid

out from left to right in the diagram, giving a physical

interpretation to the horizontal direction in the diagram, even though

spatial samples have been eliminated from explicit consideration. (The

arguments of ![]() and

and ![]() have physical units of time.)

have physical units of time.)

The left- and right-going traveling wave components are summed to produce a physical output according to

In Fig.E.1, ``transverse displacement outputs'' have been arbitrarily placed at

FDTD and DW Equivalence

The FDTD and DW recursions both compute time updates by forming fixed linear combinations of past state. As a result, each can be described in ``state-space form'' [449, Appendix G] by a constant matrix operator, the ``state transition matrix'', which multiplies the state vector at the current time to produce the state vector for the next time step. The FDTD operator propagates K variables while the DW operator propagates W variables. We may show equivalence by (1) defining a one-to-one transformation which will convert K variables to W variables or vice versa, and (2) showing that given any common initial state for both schemes, the state transition matrices compute the same next state in both cases.

The next section shows that the linear transformation from W to K variables,

for all

From Fig.E.1, it is clear that the DW scheme preserves

mapping Eq.![]() (E.7) by definition. For the FDTD scheme, we expand the

right-hand of Eq.

(E.7) by definition. For the FDTD scheme, we expand the

right-hand of Eq.![]() (E.3) using Eq.

(E.3) using Eq.![]() (E.7) and verify that the

left-hand side also satisfies the map, i.e., that

(E.7) and verify that the

left-hand side also satisfies the map, i.e., that

![]() holds:

holds:

![\begin{eqnarray*}

y(n+1,m) &=& y(n,m+1) + y(n,m-1) - y(n-1,m) \\

&=& y^{+}(n-m...

...^{+}[(n+1)-m] + y^{-}[(n+1)+m] \\

&\isdef & y(n+1,m) \nonumber

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img4520.png)

Since the DW method propagates sampled (bandlimited) solutions to the

ideal wave equation without error, it follows that the FDTD method

does the same thing, despite the relatively crude approximations made

in Eq.![]() (E.2). In particular, it is known that the FDA introduces

artificial damping when applied to first order partial derivatives

arising in lumped, mass-spring systems [447].

(E.2). In particular, it is known that the FDA introduces

artificial damping when applied to first order partial derivatives

arising in lumped, mass-spring systems [447].

The equivalence of the DW and FDTD state transitions extends readily to the DW mesh [518,447] which is essentially a lattice-work of DWs for simulating membranes and volumes. The equivalence is more important in higher dimensions because the FDTD formulation requires less computations per node than the DW approach in higher dimensions (see [33] for some quantitative comparisons).

Even in one dimension, the DW and finite-difference methods have unique advantages in particular situations [223], and as a result they are often combined together to form a hybrid traveling-wave/physical-variable simulation [351,352,222,124,123,224,263,33].

State Transformations

In previous work, time-domain adaptors (digital filters) converting

between K variables and W variables have been devised

[223]. In this section, an alternative approach is

proposed. Mapping Eq.![]() (E.7) gives us an immediate conversion from W

to K state variables, so all we need now is the inverse map for any

time

(E.7) gives us an immediate conversion from W

to K state variables, so all we need now is the inverse map for any

time ![]() . This is complicated by the fact that non-local spatial

dependencies can go indefinitely in one direction along the string, as

we will see below. We will proceed by first writing down the

conversion from W to K variables in matrix form, which is easy to do,

and then invert that matrix. For simplicity, we will consider the

case of an infinitely long string.

. This is complicated by the fact that non-local spatial

dependencies can go indefinitely in one direction along the string, as

we will see below. We will proceed by first writing down the

conversion from W to K variables in matrix form, which is easy to do,

and then invert that matrix. For simplicity, we will consider the

case of an infinitely long string.

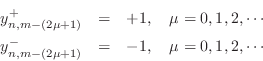

To initialize a K variable simulation for starting at time ![]() , we

need initial spatial samples at all positions

, we

need initial spatial samples at all positions ![]() for two successive

times

for two successive

times ![]() and

and ![]() . From this state specification, the FDTD scheme

Eq.

. From this state specification, the FDTD scheme

Eq.![]() (E.3) can compute

(E.3) can compute ![]() for all

for all ![]() , and so on for

increasing

, and so on for

increasing ![]() . In the DW model, all state variables are defined as

belonging to the same time

. In the DW model, all state variables are defined as

belonging to the same time ![]() , as shown in Fig.E.2.

, as shown in Fig.E.2.

From Eq.![]() (E.6), and referring to the notation defined in

Fig.E.2, we may write the conversion from W to K variables

as

(E.6), and referring to the notation defined in

Fig.E.2, we may write the conversion from W to K variables

as

where the last equality follows from the traveling-wave behavior (see Fig.E.2).

Figure E.3 shows the so-called ``stencil'' of the FDTD scheme.

The larger circles indicate the state at time ![]() which can be used to

compute the state at time

which can be used to

compute the state at time ![]() . The filled and unfilled circles

indicate membership in one of two interleaved grids [55]. To

see why there are two interleaved grids, note that when

. The filled and unfilled circles

indicate membership in one of two interleaved grids [55]. To

see why there are two interleaved grids, note that when ![]() is even,

the update for

is even,

the update for ![]() depends only on odd

depends only on odd ![]() from time

from time ![]() and even

and even

![]() from time

from time ![]() . Since the two W components of

. Since the two W components of ![]() are converted to

two W components at time

are converted to

two W components at time ![]() in Eq.

in Eq.![]() (E.8), we have that the update for

(E.8), we have that the update for

![]() depends only on W components from time

depends only on W components from time ![]() and positions

and positions

![]() .

Moving to the next position update, for

.

Moving to the next position update, for

![]() , the state used is

independent of that used for

, the state used is

independent of that used for ![]() , and the W components used are

from positions

, and the W components used are

from positions ![]() and

and ![]() . As a result of these observations, we

see that we may write the state-variable transformation separately for

even and odd

. As a result of these observations, we

see that we may write the state-variable transformation separately for

even and odd ![]() , e.g.,

, e.g.,

Denote the linear transformation operator by

The operator

The case of the finite string is identical to that of the infinite string when the matrix

Excitation Examples

Localized Displacement Excitations

Whenever two adjacent components of

will initialize only

![]() , a solitary left-going pulse

of amplitude 1 at time

, a solitary left-going pulse

of amplitude 1 at time

![]() , as can be seen from Eq.

, as can be seen from Eq.![]() (E.11) by adding the leftmost columns

explicitly written for

(E.11) by adding the leftmost columns

explicitly written for

![]() . Similarly, the initialization

. Similarly, the initialization

gives rise to an isolated right-going pulse

![]() , corresponding

to the leftmost column of

, corresponding

to the leftmost column of

![]() plus the first column on the left not

explicitly written in Eq.

plus the first column on the left not

explicitly written in Eq.![]() (E.11). The superposition of these two

examples corresponds to a physical impulsive excitation at time 0 and

position

(E.11). The superposition of these two

examples corresponds to a physical impulsive excitation at time 0 and

position ![]() :

:

Thus, the impulse starts out with amplitude 2 at time 0 and position

In summary, we see that to excite a single sample of displacement

traveling in a single-direction, we must excite equally a pair of

adjacent colums in

![]() . This corresponds to equally weighted

excitation of K-variable pairs the form

. This corresponds to equally weighted

excitation of K-variable pairs the form

![]() .

.

Note that these examples involved only one of the two interleaved computational grids. Shifting over an odd number of spatial samples to the left or right would involve the other grid, as would shifting time forward or backward an odd number of samples.

Localized Velocity Excitations

Initial velocity excitations are straightforward in the DW paradigm,

but can be less intuitive in the FDTD domain. It is well known that

velocity in a displacement-wave DW simulation is determined by the

difference of the right- and left-going waves

[437]. Specifically, initial velocity waves ![]() can

be computed from from initial displacement waves

can

be computed from from initial displacement waves ![]() by spatially

differentiating

by spatially

differentiating ![]() to obtain traveling slope waves

to obtain traveling slope waves

![]() , multiplying by minus the tension

, multiplying by minus the tension ![]() to obtain force

waves, and finally dividing by the wave impedance

to obtain force

waves, and finally dividing by the wave impedance

![]() to

obtain velocity waves:

to

obtain velocity waves:

where

We can see from Eq.![]() (E.11) that such asymmetry can be caused by

unequal weighting of

(E.11) that such asymmetry can be caused by

unequal weighting of ![]() and

and

![]() . For example, the

initialization

. For example, the

initialization

corresponds to an impulse velocity excitation at position

![]() . In this case, both interleaved grids are excited.

. In this case, both interleaved grids are excited.

More General Velocity Excitations

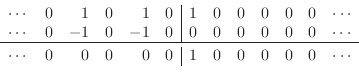

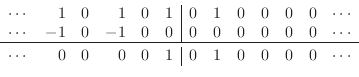

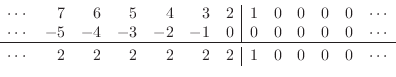

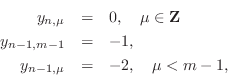

From Eq.![]() (E.11), it is clear that initializing any single K variable

(E.11), it is clear that initializing any single K variable

![]() corresponds to the initialization of an infinite number of W

variables

corresponds to the initialization of an infinite number of W

variables

![]() and

and

![]() . That is, a single K variable

. That is, a single K variable ![]() corresponds to only a single column of

corresponds to only a single column of

![]() for only one of the

interleaved grids. For example,

referring to Eq.

for only one of the

interleaved grids. For example,

referring to Eq.![]() (E.11),

initializing the K variable

(E.11),

initializing the K variable

![]() to -1 at time

to -1 at time ![]() (with all other

(with all other ![]() intialized to 0)

corresponds to the W-variable initialization

intialized to 0)

corresponds to the W-variable initialization

with all other W variables being initialized to zero.

In view of earlier remarks, this corresponds to an impulsive velocity

excitation on only one of the two subgrids. A schematic

depiction from ![]() to

to ![]() of the W variables at time

of the W variables at time ![]() is as

follows:

is as

follows:

|

(E.14) |

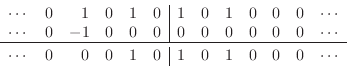

Below the solid line is the sum of the left- and right-going traveling-wave components, i.e., the corresponding K variables at time

|

(E.15) |

|

(E.16) |

|

(E.17) |

|

(E.18) |

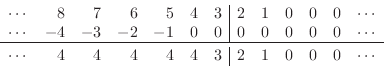

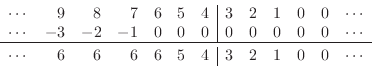

The sequence

Due to the independent interleaved subgrids in the FDTD algorithm, it is nearly always non-physical to excite only one of them, as the above example makes clear. It is analogous to illuminating only every other pixel in a digital image. However, joint excitation of both grids may be accomplished either by exciting adjacent spatial samples at the same time, or the same spatial sample at successive times instants.

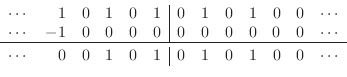

In addition to the W components being non-local, they can demand a

larger dynamic range than the K variables. For example, if the entire

semi-infinite string for ![]() is initialized with velocity

is initialized with velocity ![]() ,

the initial displacement traveling-wave components look as follows:

,

the initial displacement traveling-wave components look as follows:

|

(E.19) |

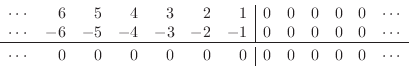

and the variables evolve forward in time as follows:

|

(E.20) |

|

(E.21) |

|

(E.22) |

Thus, the left semi-infinite string moves upward at a constant velocity of 2, while a ramp spreads out to the left and right of position

where ![]() denotes the set of all integers.

While the FDTD excitation is also not local, of course, it is

bounded for all

denotes the set of all integers.

While the FDTD excitation is also not local, of course, it is

bounded for all ![]() .

.

Since the traveling-wave components of initial velocity excitations are generally non-local in a displacement-based simulation, as illustrated in the preceding examples, it is often preferable to use velocity waves (or force waves) in the first place [447].

Another reason to prefer force or velocity waves is that displacement

inputs are inherently impulsive. To see why this is so, consider that

any physically correct driving input must effectively exert some

finite force on the string, and this force is free to change

arbitrarily over time. The ``equivalent circuit'' of the infinitely

long string at the driving point is a ``dashpot'' having real,

positive resistance

![]() . The applied force

. The applied force ![]() can be

divided by

can be

divided by ![]() to obtain the velocity

to obtain the velocity ![]() of the string driving

point, and this velocity is free to vary arbitrarily over time,

proportional to the applied force. However, this velocity must be

time-integrated to obtain a displacement

of the string driving

point, and this velocity is free to vary arbitrarily over time,

proportional to the applied force. However, this velocity must be

time-integrated to obtain a displacement ![]() . Therefore,

there can be no instantaneous displacement response to a finite

driving force. In other words, any instantaneous effect of an input

driving signal on an output displacement sample is non-physical except

in the case of a massless system. Infinite force is required to move

the string instantaneously. In sampled displacement simulations, we

must interpret displacement changes as resulting from time-integration

over a sampling period. As the sampling rate increases, any

physically meaningful displacement driving signal must converge to

zero.

. Therefore,

there can be no instantaneous displacement response to a finite

driving force. In other words, any instantaneous effect of an input

driving signal on an output displacement sample is non-physical except

in the case of a massless system. Infinite force is required to move

the string instantaneously. In sampled displacement simulations, we

must interpret displacement changes as resulting from time-integration

over a sampling period. As the sampling rate increases, any

physically meaningful displacement driving signal must converge to

zero.

Additive Inputs

Instead of initial conditions, ongoing input signals can be defined

analogously. For example, feeding an input signal ![]() into the FDTD

via

into the FDTD

via

corresponds to physically driving a single sample of string displacement at position

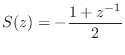

Interpretation of the Time-Domain KW Converter

As shown above, driving a single displacement sample ![]() in the

FDTD corresponds to driving a velocity input at position

in the

FDTD corresponds to driving a velocity input at position ![]() on two

alternating subgrids over time. Therefore, the filter

on two

alternating subgrids over time. Therefore, the filter

![]() acts as the filter

acts as the filter

![]() on either subgrid alone--a

first-order difference. Since displacement is being simulated, velocity

inputs must be numerically integrated. The first-order difference can

be seen as canceling this integration, thereby converting a velocity

input to a displacement input, as in Eq.

on either subgrid alone--a

first-order difference. Since displacement is being simulated, velocity

inputs must be numerically integrated. The first-order difference can

be seen as canceling this integration, thereby converting a velocity

input to a displacement input, as in Eq.![]() (E.23).

(E.23).

State Space Formulation

In this section, we will summarize and extend the above discussion by means of a state space analysis [220].

FDTD State Space Model

Let

![]() denote the FDTD state for one of the two subgrids at time

denote the FDTD state for one of the two subgrids at time

![]() , as defined by Eq.

, as defined by Eq.![]() (E.10). The other subgrid is handled

identically and will not be considered explicitly. In fact, the other

subgrid can be dropped altogether to obtain a half-rate,

staggered grid scheme [55,147]. However, boundary

conditions and input signals will couple the subgrids, in general. To

land on the same subgrid after a state update, it is necessary to

advance time by two samples instead of one. The state-space model for

one subgrid of the FDTD model of the ideal string may then be written

as

(E.10). The other subgrid is handled

identically and will not be considered explicitly. In fact, the other

subgrid can be dropped altogether to obtain a half-rate,

staggered grid scheme [55,147]. However, boundary

conditions and input signals will couple the subgrids, in general. To

land on the same subgrid after a state update, it is necessary to

advance time by two samples instead of one. The state-space model for

one subgrid of the FDTD model of the ideal string may then be written

as

To avoid the issue of boundary conditions for now, we will continue working with the infinitely long string. As a result, the state vector

When there is a general input signal vector

![]() , it is necessary to

augment the input matrix

, it is necessary to

augment the input matrix

![]() to accomodate contributions over both

time steps. This is because inputs to positions

to accomodate contributions over both

time steps. This is because inputs to positions ![]() at time

at time ![]() affect position

affect position ![]() at time

at time ![]() . Henceforth, we assume

. Henceforth, we assume

![]() and

and

![]() have been augmented in this way. Thus, if there are

have been augmented in this way. Thus, if there are ![]() input

signals

input

signals

![]() ,

,

![]() , driving the full

string state through weights

, driving the full

string state through weights

![]() ,

,

![]() , the vector

, the vector

![]() is of dimension

is of dimension

![]() :

:

![\begin{displaymath}

\underline{u}(n+2) =

\left[\!

\begin{array}{c}

\underline{\upsilon}(n+2)\\

\underline{\upsilon}(n+1)

\end{array}\!\right]

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4608.png)

![]() forms the output signal as an arbitrary linear combination of

states. To obtain the usual displacement output for the subgrid,

forms the output signal as an arbitrary linear combination of

states. To obtain the usual displacement output for the subgrid,

![]() is the matrix formed from the identity matrix by deleting every

other row, thereby retaining all displacement samples at time

is the matrix formed from the identity matrix by deleting every

other row, thereby retaining all displacement samples at time ![]() and

discarding all displacement samples at time

and

discarding all displacement samples at time ![]() in the state vector

in the state vector

![]() :

:

![\begin{displaymath}

\underbrace{\left[\!

\begin{array}{c}

\vdots \\

y_{n,m-2} \...

..._{n,m+4}\\

\vdots

\end{array}\!\right]}_{\underline{x}_K(n)}

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4612.png)

The intra-grid state update for even

For odd

![\begin{displaymath}

\underbrace{\left[\!

\begin{array}{l}

\qquad\vdots\\

y_{n+1...

...+3}\\

\qquad\vdots

\end{array}\!\right]}_{\underline{x}_K(n)}

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4626.png)

![\begin{displaymath}

\underbrace{\left[\!

\begin{array}{l}

\qquad\vdots\\

y_{n+1...

...ine{\upsilon}(n+1)

\end{array}\!\right]}_{\underline{u}(n+2)}.

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4627.png)

DW State Space Model

As discussed in §E.2, the traveling-wave decomposition

Eq.![]() (E.4) defines a linear transformation Eq.

(E.4) defines a linear transformation Eq.![]() (E.10) from the DW

state to the FDTD state:

(E.10) from the DW

state to the FDTD state:

Since

Multiplying through Eq.

| (E.30) |

where

To verify that the DW model derived in this manner is the computation diagrammed in Fig.E.2, we may write down the state transition matrix for one subgrid from the figure to obtain the permutation matrix

and displacement output matrix

![\begin{displaymath}

\underbrace{\left[\!

\begin{array}{c}

\vdots \\

y_{n,m-2} \...

...+4} \\

\quad\vdots

\end{array}\!\right]}_{\underline{x}_W(n)}

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4644.png)

DW Displacement Inputs

We define general DW inputs as follows:

| (E.33) | |||

| (E.34) |

The

Typically, input signals are injected equally to the left and right along the string, in which case

![\begin{displaymath}

\underbrace{\left[\!

\begin{array}{c}

\vdots\\

y^{+}_{n+2,m...

...ine{\upsilon}(n+1)

\end{array}\!\right]}_{\underline{u}(n+2)}.

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4655.png)

![\begin{displaymath}

\underline{x}_W(n+2) = \mathbf{A}_W\underline{x}_W(n) +

\un...

...d{array}\!\right]}_{{\mathbf{B}_W}}

\underline{\upsilon}(n+2).

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4657.png)

To show that the directly obtained FDTD and DW state-space models

correspond to the same dynamic system, it remains to verify that

![]() . It is somewhat easier to show that

. It is somewhat easier to show that

![\begin{eqnarray*}

\mathbf{T}\,\mathbf{A}_W&=& \mathbf{A}_K\,\mathbf{T}\\

&=&

\l...

...dots & \vdots & \vdots & \vdots & \vdots

\end{array}\!\right].

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img4659.png)

A straightforward calculation verifies that the above identity holds,

as expected. One can similarly verify

![]() , as expected.

The relation

, as expected.

The relation

![]() provides a recipe for translating any

choice of input signals for the FDTD model to equivalent inputs for

the DW model, or vice versa.

For example, in the scalar input case (

provides a recipe for translating any

choice of input signals for the FDTD model to equivalent inputs for

the DW model, or vice versa.

For example, in the scalar input case (![]() ), the DW input-weights

), the DW input-weights

![]() become FDTD input-weights

become FDTD input-weights

![]() according to

according to

![\begin{displaymath}

\left[\!

\begin{array}{l}

\qquad\vdots\\

y_{n+1,m-1}\\

y_{...

...psilon}(n+2)\\

\underline{\upsilon}(n+1)

\end{array}\!\right]

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4662.png)

Finally, when

![\begin{displaymath}

\mathbf{B}_K=

\left[\!

\begin{array}{cc}

\vdots & \vdots\\

...

...0 \\

2 & 0 \\

1 & 0 \\

\vdots & \vdots

\end{array}\!\right]

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4668.png)

DW Non-Displacement Inputs

Since a displacement input at position ![]() corresponds to

symmetrically exciting the right- and left-going traveling-wave

components

corresponds to

symmetrically exciting the right- and left-going traveling-wave

components ![]() and

and ![]() , it is of interest to understand what

it means to excite these components antisymmetrically. As

discussed in §E.3.3, an antisymmetric excitation of

traveling-wave components can be interpreted as a velocity

excitation. It was noted that localized velocity excitations in the

FDTD generally correspond to non-localized velocity excitations in the

DW, and that velocity in the DW is proportional to the spatial

derivative of the difference between the left-going and right-going

traveling displacement-wave components (see Eq.

, it is of interest to understand what

it means to excite these components antisymmetrically. As

discussed in §E.3.3, an antisymmetric excitation of

traveling-wave components can be interpreted as a velocity

excitation. It was noted that localized velocity excitations in the

FDTD generally correspond to non-localized velocity excitations in the

DW, and that velocity in the DW is proportional to the spatial

derivative of the difference between the left-going and right-going

traveling displacement-wave components (see Eq.![]() (E.13)). More

generally, the antisymmetric component of displacement-wave excitation

can be expressed in terms of any wave variable which is linearly

independent relative to displacement, such as acceleration, slope,

force, momentum, and so on. Since the state space of a vibrating

string (and other mechanical systems) is traditionally taken to be

position and velocity, it is perhaps most natural to relate the

antisymmetric excitation component to velocity.

(E.13)). More

generally, the antisymmetric component of displacement-wave excitation

can be expressed in terms of any wave variable which is linearly

independent relative to displacement, such as acceleration, slope,

force, momentum, and so on. Since the state space of a vibrating

string (and other mechanical systems) is traditionally taken to be

position and velocity, it is perhaps most natural to relate the

antisymmetric excitation component to velocity.

In practice, the simplest way to handle a velocity input ![]() in a

DW simulation is to first pass it through a first-order integrator of the

form

in a

DW simulation is to first pass it through a first-order integrator of the

form

to convert it to a displacement input. By the equivalence of the DW and FDTD models, this works equally well for the FDTD model. However, in view of §E.3.3, this approach does not take full advantage of the ability of the FDTD scheme to provide localized velocity inputs for applications such as simulating a piano hammer strike. The FDTD provides such velocity inputs for ``free'' while the DW requires the external integrator Eq.

Note, by the way, that these ``integrals'' (both that done internally

by the FDTD and that done by Eq.![]() (E.37)) are merely sums over

discrete time--not true integrals. As a result, they are exact only

at dc (and also trivially at

(E.37)) are merely sums over

discrete time--not true integrals. As a result, they are exact only

at dc (and also trivially at ![]() , where the output amplitude is

zero). Discrete sums can also be considered exact integrators for

impulse-train inputs--a point of view sometimes useful when

interpreting simulation results. For normal bandlimited signals,

discrete sums most accurately approximate integrals in a neighborhood

of dc. The KW-converter filter

, where the output amplitude is

zero). Discrete sums can also be considered exact integrators for

impulse-train inputs--a point of view sometimes useful when

interpreting simulation results. For normal bandlimited signals,

discrete sums most accurately approximate integrals in a neighborhood

of dc. The KW-converter filter

![]() has analogous

properties.

has analogous

properties.

Input Locality

The DW state-space model is given in terms of the FDTD state-space

model by Eq.![]() (E.31). The similarity transformation matrix

(E.31). The similarity transformation matrix

![]() is

bidiagonal, so that

is

bidiagonal, so that

![]() and

and

![]() are both approximately

diagonal when the output is string displacement for all

are both approximately

diagonal when the output is string displacement for all ![]() . However,

since

. However,

since

![]() given in Eq.

given in Eq.![]() (E.11) is upper triangular, the input matrix

(E.11) is upper triangular, the input matrix

![]() can replace sparse input matrices

can replace sparse input matrices

![]() with only

half-sparse

with only

half-sparse

![]() , unless successive columns of

, unless successive columns of

![]() are equally

weighted, as discussed in §E.3. We can say that local

K-variable excitations may correspond to non-local W-variable

excitations. From Eq.

are equally

weighted, as discussed in §E.3. We can say that local

K-variable excitations may correspond to non-local W-variable

excitations. From Eq.![]() (E.35) and Eq.

(E.35) and Eq.![]() (E.36), we see that

displacement inputs are always local in both systems.

Therefore, local FDTD and non-local DW excitations can only occur when

a variable dual to displacement is being excited, such as velocity.

If the external integrator Eq.

(E.36), we see that

displacement inputs are always local in both systems.

Therefore, local FDTD and non-local DW excitations can only occur when

a variable dual to displacement is being excited, such as velocity.

If the external integrator Eq.![]() (E.37) is used, all inputs are

ultimately displacement inputs, and the distinction disappears.

(E.37) is used, all inputs are

ultimately displacement inputs, and the distinction disappears.

Boundary Conditions

The relations of the previous section do not hold exactly when the string length is finite. A finite-length string forces consideration of boundary conditions. In this section, we will introduce boundary conditions as perturbations of the state transition matrix. In addition, we will use the DW-FDTD equivalence to obtain physically well behaved boundary conditions for the FDTD method.

Consider an ideal vibrating string with ![]() spatial samples. This is a sufficiently large number to make clear

most of the repeating patterns in the general case. Introducing

boundary conditions is most straightforward in the DW paradigm. We

therefore begin with the order 8 DW model, for which the state vector

(for the 0th subgrid) will be

spatial samples. This is a sufficiently large number to make clear

most of the repeating patterns in the general case. Introducing

boundary conditions is most straightforward in the DW paradigm. We

therefore begin with the order 8 DW model, for which the state vector

(for the 0th subgrid) will be

![\begin{displaymath}

\underline{x}_W(n) =

\left[\!

\begin{array}{l}

y^{+}_{n,0}\...

...}_{n,4}\\

y^{+}_{n,6}\\

y^{-}_{n,6}\\

\end{array}\!\right].

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4678.png)

![\begin{displaymath}

\mathbf{C}_W=

\left[\!

\begin{array}{ccccccccccc}

1 & 1 & ...

...0 & 0 \\

0 & 0 & 0 & 0 & 0 & 0 & 1 & 1

\end{array}\!\right]

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4679.png)

![\begin{displaymath}

{\mathbf{B}_W}

=

\left[\!

\begin{array}{cc}

0 & 0 \\

0 & ...

...

0 & 0 \\

0 & 0 \\

0 & 0 \\

0 & 0

\end{array}\!\right]

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4681.png)

Resistive Terminations

Let's begin with simple ``resistive'' terminations at the string

endpoints, resulting in the reflection coefficient ![]() at each end of

the string, where

at each end of

the string, where

![]() corresponds to nonnegative (passive)

termination resistances [447]. Inspection of

Fig.E.2 makes it clear that terminating the left endpoint may be

accomplished by setting

corresponds to nonnegative (passive)

termination resistances [447]. Inspection of

Fig.E.2 makes it clear that terminating the left endpoint may be

accomplished by setting

![$\displaystyle \tilde{\mathbf{A}}_W= \left[\! \begin{array}{ccccccccccc} 0 & g_l...

... 0 & 0 & 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & g_r & 0 \end{array} \!\right]$](http://www.dsprelated.com/josimages_new/pasp/img4684.png) |

(E.38) |

The simplest choice of state transformation matrix

![\begin{displaymath}

\mathbf{T}\isdef

\left[\!

\begin{array}{ccccccccccc}

1 & 1...

... 1 & 1 \\

0 & 0 & 0 & 0 & 0 & 0 & 0 & 1

\end{array}\!\right]

\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4685.png)

![\begin{eqnarray*}

\tilde{\mathbf{A}}_K&\isdef & \mathbf{T}\tilde{\mathbf{A}}_W\m...

...r \\

0 & 0 & 0 & 0 & 0 & 0 & g_r & -g_r

\end{array}\!\right],

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img4687.png)

where

![]() and

and

![]() . We see that the left

FDTD termination is non-local for

. We see that the left

FDTD termination is non-local for ![]() , while the right

termination is local (to two adjacent spatial samples) for all

, while the right

termination is local (to two adjacent spatial samples) for all ![]() .

This can be viewed as a consequence of having ordered the FDTD state

variables as

.

This can be viewed as a consequence of having ordered the FDTD state

variables as

![]() instead of

instead of

![]() . Choosing the other ordering

interchanges the endpoint behavior. Call these orderings Type I and

Type II, respectively. Then

. Choosing the other ordering

interchanges the endpoint behavior. Call these orderings Type I and

Type II, respectively. Then

![]() ; that is, the similarity

transformation matrix

; that is, the similarity

transformation matrix

![]() is transposed when converting from Type I

to Type II or vice versa. By anechoically coupling a Type I FDTD

simulation on the right with a Type II simulation on the left,

general resistive terminations may be obtained on both ends which are

localized to two spatial samples.

is transposed when converting from Type I

to Type II or vice versa. By anechoically coupling a Type I FDTD

simulation on the right with a Type II simulation on the left,

general resistive terminations may be obtained on both ends which are

localized to two spatial samples.

In nearly all musical sound synthesis applications, at least one of

the string endpoints is modeled as rigidly clamped at the ``nut''.

Therefore, since the FDTD, as defined here, most naturally provides

a clamped endpoint on the left, with more general localized terminations

possible on the right, we will proceed with this case for simplicity in what

follows. Thus, we set ![]() and obtain

and obtain

![\begin{eqnarray*}

\mbox{$\stackrel{{\scriptscriptstyle \vdash}}{\mathbf{A}}$}_K&...

..._r \\

0 & 0 & 0 & 0 & 0 & 0 & g_r & -g_r

\end{array}\!\right]

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img4695.png)

Boundary Conditions as Perturbations

To study the effect of boundary conditions on the state transition

matrices

![]() and

and

![]() , it is convenient to write the terminated

transition matrix as the sum of of the ``left-clamped'' case

, it is convenient to write the terminated

transition matrix as the sum of of the ``left-clamped'' case

![]()

![]() (for which

(for which ![]() ) plus a series of one or more rank-one

perturbations. For example, introducing a right termination with

reflectance

) plus a series of one or more rank-one

perturbations. For example, introducing a right termination with

reflectance ![]() can be written

can be written

where

In general, when ![]() is odd, adding

is odd, adding

![]() to

to

![]()

![]() corresponds to a connection from left-going waves to

right-going waves, or vice versa (see Fig.E.2). When

corresponds to a connection from left-going waves to

right-going waves, or vice versa (see Fig.E.2). When ![]() is

odd and

is

odd and ![]() is even, the connection flows from the right-going to the

left-going signal path, thus providing a termination (or partial

termination) on the right. Left terminations flow from the bottom to

the top rail in Fig.E.2, and in such connections

is even, the connection flows from the right-going to the

left-going signal path, thus providing a termination (or partial

termination) on the right. Left terminations flow from the bottom to

the top rail in Fig.E.2, and in such connections ![]() is even

and

is even

and ![]() is odd. The spatial sample numbers involved in the connection

are

is odd. The spatial sample numbers involved in the connection

are

![]() and

and

![]() , where

, where

![]() denotes the greatest integer less than or equal to

denotes the greatest integer less than or equal to

![]() .

.

The rank-one perturbation of the DW transition matrix Eq.![]() (E.39)

corresponds to the following rank-one perturbation of the FDTD

transition matrix

(E.39)

corresponds to the following rank-one perturbation of the FDTD

transition matrix

![]()

![]() :

:

In general, we have

Thus, the general rule is that

Reactive Terminations

In typical string models for virtual musical instruments, the ``nut

end'' of the string is rigidly clamped while the ``bridge end'' is

terminated in a passive reflectance ![]() . The condition

for passivity of the reflectance is simply that its gain be bounded

by 1 at all frequencies [447]:

. The condition

for passivity of the reflectance is simply that its gain be bounded

by 1 at all frequencies [447]:

A very simple case, used, for example, in the Karplus-Strong plucked-string algorithm, is the two-point-average filter:

![\begin{eqnarray*}

\mbox{$\stackrel{{\scriptscriptstyle \vdash\!\!\dashv}}{\mathb...

... \\

0 & 0 & 0 & 0 & -1/2 & 1/2 & -1 & -1

\end{array}\!\right].

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img4722.png)

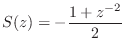

This gives the desired filter in a half-rate, staggered grid case. In the full-rate case, the termination filter is really

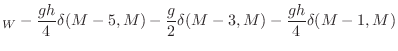

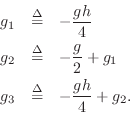

Another often-used string termination filter in digital waveguide models is specified by [447]

![\begin{eqnarray*}

s(n) &=& -g\left[\frac{h}{4}, \frac{1}{2}, \frac{h}{4}\right]\...

...{j\omega T})&=&

-e^{-j\omega T}g\frac{1 + h \cos(\omega T)}{2},

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img4724.png)

where ![]() is an overall gain factor that affects the decay

rate of all frequencies equally, while

is an overall gain factor that affects the decay

rate of all frequencies equally, while ![]() controls the

relative decay rate of low-frequencies and high frequencies. An

advantage of this termination filter is that the delay is

always one sample, for all frequencies and for all parameter settings;

as a result, the tuning of the string is invariant with respect to

termination filtering. In this case, the perturbation is

controls the

relative decay rate of low-frequencies and high frequencies. An

advantage of this termination filter is that the delay is

always one sample, for all frequencies and for all parameter settings;

as a result, the tuning of the string is invariant with respect to

termination filtering. In this case, the perturbation is

![\begin{eqnarray*}

\mbox{$\stackrel{{\scriptscriptstyle \vdash\!\!\dashv}}{\mathb...

...d g_2 & \quad -g_2 & \quad g_3 & \quad -g_3

\end{array}\!\right]

\end{eqnarray*}](http://www.dsprelated.com/josimages_new/pasp/img4728.png)

where

The filtered termination examples of this section generalize

immediately to arbitrary finite-impulse response (FIR) termination

filters ![]() . Denote the impulse response of the termination filter

by

. Denote the impulse response of the termination filter

by

Interior Scattering Junctions

A so-called Kelly-Lochbaum scattering junction [297,447] can be introduced into the string at the fourth sample by the following perturbation

A single time-varying scattering junction provides a reasonable model for plucking, striking, or bowing a string at a point. Several adjacent scattering junctions can model a distributed interaction, such as a piano hammer, finger, or finite-width bow spanning several string samples.

Note that scattering junctions separated by one spatial sample (as

typical in ``digital waveguide filters'' [447]) will

couple the formerly independent subgrids. If scattering junctions are

confined to one subgrid, they are separated by two samples of delay

instead of one, resulting in round-trip transfer functions of the form

![]() (as occurs in the digital waveguide mesh). In the context of

a half-rate staggered-grid scheme, they can provide general IIR

filtering in the form of a ladder digital filter [297,447].

(as occurs in the digital waveguide mesh). In the context of

a half-rate staggered-grid scheme, they can provide general IIR

filtering in the form of a ladder digital filter [297,447].

Lossy Vibration

The DW and FDTD state-space models are equivalent with respect to

lossy traveling-wave simulation. Figure E.4 shows the flow diagram

for the case of simple attenuation by ![]() per sample of wave

propagation, where

per sample of wave

propagation, where ![]() for a passive string.

for a passive string.

The DW state update can be written in this case as

State Space Summary

We have seen that the DW and FDTD schemes correspond to state-space models which are related to each other by a simple change of coordinates (similarity transformation). It is well known that such systems exhibit the same transfer functions, have the same modes, and so on. In short, they are the same linear dynamic system. Differences may exist with respect to spatial locality of input signals, initial conditions, and boundary conditions.

State-space analysis was used to translate initial conditions and boundary conditions from one case to the other. Passive terminations in the DW paradigm were translated to passive terminations for the FDTD scheme, and FDTD excitations were translated to the DW case in order to interpret them physically.

Computational Complexity

The DW model is more efficient in one dimension because it can make

use of delay lines to obtain an

![]() computation per time sample

[437], whereas the FDTD scheme is

computation per time sample

[437], whereas the FDTD scheme is

![]() per sample

(

per sample

(![]() being the number of spatial samples along the string). There is

apparently no known way to achieve

being the number of spatial samples along the string). There is

apparently no known way to achieve

![]() complexity for the FDTD

scheme. In higher dimensions, i.e., when simulating membranes and

volumes, the delay-line advantage disappears, and the FDTD scheme has

the lower operation count (and memory storage requirements).

complexity for the FDTD

scheme. In higher dimensions, i.e., when simulating membranes and

volumes, the delay-line advantage disappears, and the FDTD scheme has

the lower operation count (and memory storage requirements).

Summary

An explicit linear transformation was derived for converting state variables of the finite-difference time-domain (FDTD) scheme to those of the digital waveguide (DW) scheme. The equivalence of the FDTD and DW state transitions was reviewed, and the proof of state-space equivalence was completed. Since the DW scheme is exact within its bandwidth (being a sampled traveling-wave scheme instead of a finite difference scheme), it can be put forth as the proper physical interpretation of the FDTD scheme, and consequently be used to provide physically accurate initial conditions and excitations for the FDTD method. For its part, the FDTD method provides lower cost relative to the DW method in dimensions higher than one (for simulating membranes, volumes, and so on), and can be preferred in highly distributed nonlinear string simulation applications.

Future Work

The simple state translation formulas derived here for the

one-dimensional case do not extend simply to higher dimensions. While

straightforward extensions to higher dimensions are presumed to exist,

a simple and intuitive result such as found here for the 1D case could

be more useful for initializing and driving FDTD mesh simulations from

a physical point of view. In particular, spatially localized initial

conditions and boundary conditions in the DW framework should map to

localized counterparts in the FDTD scheme. A generalization of the

Toeplitz operator

![]() having a known closed-form inverse could be

useful in higher dimensions.

having a known closed-form inverse could be

useful in higher dimensions.

Acknowledgments

The author wishes to thank Stefan Bilbao, Georg Essl, and Patty Huang for fruitful discussions on topics addressed in this appendix.

Next Section:

Wave Digital Filters

Previous Section:

Finite-Difference Schemes

![\includegraphics[width=\twidth]{eps/fidealCopy}](http://www.dsprelated.com/josimages_new/pasp/img4517.png)

![$\displaystyle \left[\! \begin{array}{c} \vdots \\ y_{n,m-1}\\ y_{n-1,m}\\ y_{n,...

...n,m+3}\\ y^{+}_{n,m+5}\\ y^{-}_{n,m+5}\\ \vdots \end{array} \!\right]. \protect$](http://www.dsprelated.com/josimages_new/pasp/img4535.png)

![$\displaystyle \left[\! \begin{array}{c} \vdots \\ y^{+}_{n,m-1}\\ y^{-}_{n,m-1}...

... y_{n,m+3}\\ y_{n-1,m+4}\\ y_{n,m+5}\\ \vdots \\ \end{array} \!\right] \protect$](http://www.dsprelated.com/josimages_new/pasp/img4542.png)

![\begin{displaymath}\begin{array}{c}

\left[1, -1, 1, -1, 1\right]\\

\vspace{0.5i...

...y_{n-1,m+1}\\

y_{n,m+2}\\

y_{n-1,m+3}\\

\end{array}\!\right]\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4622.png)

![\begin{displaymath}+ \left[\underline{\beta}_m^T \quad (\underline{\beta}_{m-1}+...

...+2)\\

\underline{\upsilon}(n+1)

\end{array}\!\right].

\protect\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4623.png)

![$\displaystyle \underbrace{\left[\! \begin{array}{l} \qquad\vdots \\ y^{+}_{n+2,...

...{-}_{n,m+4} \\ \quad\vdots \end{array} \!\right]}_{\underline{x}_W(n)} \protect$](http://www.dsprelated.com/josimages_new/pasp/img4642.png)

![$\displaystyle \left({\mathbf{B}_W}\right)_m = \left[\! \begin{array}{cc} (\unde...

...ma}^{-}_m)^T & (\underline{\gamma}^{-}_{m+1})^T \end{array} \!\right]. \protect$](http://www.dsprelated.com/josimages_new/pasp/img4653.png)

![$\displaystyle \mathbf{B}_K= \left[\! \begin{array}{cc} \vdots & \vdots\\ \gamma...

...ma _{m+1}+\gamma _{m+3} \\ [5pt] \vdots & \vdots \end{array} \!\right] \protect$](http://www.dsprelated.com/josimages_new/pasp/img4664.png)

![\begin{displaymath}\mathbf{T}{\bm \delta}_{8,7}\mathbf{T}^{-1}

=

\left[\!

\begin...

...

0 & 0 & 0 & 0 & 0 & 0 & 1 & -1

\end{array}\!\right].

\protect\end{displaymath}](http://www.dsprelated.com/josimages_new/pasp/img4713.png)